This sample Sensation Research Paper is published for educational and informational purposes only. If you need help writing your assignment, please use our research paper writing service and buy a paper on any topic at affordable price. Also check our tips on how to write a research paper, see the lists of psychology research paper topics, and browse research paper examples.

Psychology has roots in both philosophy and biology. This dual relation with philosophy and biology is probably most evident with sensation and perception. Philosophers have been interested in sensation and perception since our senses put us in contact with the external world; our perceptions are our representations of the external world. René Descartes believed that perception was among our innate abilities. John Locke and other empiricists, however, argued against innate abilities, instead suggesting that knowledge is based on experience obtained through the senses or by reflection on residual sensory stimulation. Locke separated our experiences into primary and secondary qualities. Primary qualities are inherent to an object (e.g., size) whereas secondary qualities are added to an object (e.g., color). George Berkeley disagreed with Locke’s notion of primary and secondary qualities because he felt it created dualism between physical objects and mental perceptions. Berkeley believed only in perceptions. Because everything is perceived, the physical world is not necessary, therefore materialism is irrelevant. Berkeley, an ordained minister, further believed that the permanent existence of objects was due to God’s perception of the world. An important issue associated with the empiricist view is whether we perceive the world veridically. If our knowledge is based on our perceptions of the world, do our perceptions accurately represent the world or are our perceptions subject to error? If our perceptions are subject to error, then our knowledge obtained from our senses is also subject to error (see Gendler & Hawthorne, 2006, for an overview of traditional and current issues in the philosophy of perception).

John Stuart Mills believed in the importance of experience but also added the idea of contiguity as a mechanism for acquiring knowledge and ideas through our senses. Contiguity refers to both space and time. Therefore, sensations that occur close together in space or time become associated with each other. Furthermore, associations can be made between present stimuli and images of stimuli hitherto removed. Alexander Bain added that associations between sensations result in neurological changes; however, the mental and biological operations take place in parallel.

These philosophical positions helped develop theories on how we learn, but they do not address the relation between how loud a sound is and how loud we perceive it to be, for example. Thus, psychophysics developed to determine the relation between a physical stimulus and the corresponding psychological experience (see Chapter 20 by Krantz in this volume). The three most influential people in the development of psychophysics were Ernst Weber, Gustav Fechner, and S. S. Stevens. Weber wrote The Sense of Touch in 1834 and proposed the concept of the just noticeable difference (JND). Fechner further contributed to psychophysics by expanding the work on JNDs and developed the method of limits, methods of adjustment, and method of constant stimuli as tools for assessing the relation between a physical stimulus and its mental counterpart. Although Fechner believed that there was a direct relation between a stimulus and the perception of it, he also believed that the physical and mental worlds operate in parallel with each other and do not interact (a position known as psychophysical parallelism). Stevens disagreed with the direct relation espoused by Fechner and instead argued that the physical and the psychological were related by an exponential function.

Wilhelm Wundt also played a significant role in establishing a scientific basis for examining perceptual processes. Although Wundt used systematic introspection, which was later called into question, he also used simple, discrimination, and choice reaction time tasks to examine our immediate conscious experience. Reaction times are perhaps the most widely used dependent variable in perception and cognitive research. Wundt used these methods to determine the elements of perception (elementism). Another interesting contribution of Wundt is that he believed that our conscious experience comprised not only sensations but also our affect. This belief highlights the fact that several processes are involved with influencing how we perceive the world and how we interact with it. However, Christian von Ehrenfels (1890), in a critique of Wundt, suggested that consciousness also contains Gestaltqualitaten (form qualities), aspects of experience that cannot be explained with simple associations between sensations. While at the University of Prague, von Ehrenfels influenced a young student, Max Wertheimer, who eventually developed Gestalt psychology.

Have you ever stopped at a traffic light, seen a car out of the corner of your eye, and then suddenly stepped harder on the brake pedal because you thought that you were moving? Something like this happened to Wertheimer on a train. The event caused him to realize that motion was perceived even though he was receiving sensory information to indicate that he was stationary. Thus, our perceptions are based on more than simple associations of sensory information. Instead, it is possible that a whole perception is actually different from the sensory parts it comprises. This realization led to the Gestalt psychology maxim—”The whole is greater than the sum of its parts”—and to a phenomenological approach for examining perceptual experience. As a result of this approach, Wertheimer proposed the Gestalt principles of perceptual organization (i.e., proximity, continuity, similarity, closure, simplicity, and figure-ground).

David Marr (1982) later proposed a computational theory of object perception. According to Marr, object recognition occurs through three stages of processing. First, a primal sketch or abstract representation is obtained from the retinal information, producing a blob-like image of contour locations and groupings. Additional processing of the primal sketch leads to a 2 VCD sketch. This sketch yields an abstract description of the orientation and depth relations of the object surface to the viewer. Finally, a 3-D model is constructed that contains the perceptual object composed of a number of volumetric primitives (simple shapes like a cylinder) and the relationship between them. Thus, Marr believed that objects were perceived through a series of computational processes that render an image based on the relations between simple volumetric shapes. Similar to the use of volumetric shapes by Marr, Biederman (1987) proposed recognition by components (RBC) theory, in which simple geometric shapes, or geons (e.g., cylinder, cube, wedge), are combined to produce a mental representation of a physical object. The geons can vary across several dimensions to represent different parts of objects. For instance, if you place a medium-size snowball (sphere) on top of a large snowball, you have a snowman. If you place an object with a long, thin cylinder connected perpendicularly to a short, wide cylinder into the area of the snowman’s mouth, you added a corncob pipe. A flattened cylinder (or disc) represents the button nose. Similarly, the two eyes made out of coal can be rep-resented the same way.

Both Marr’s computational theory and Biederman’s RBC theory rely on contour information obtained through early stages of visual processing. One task that researchers have used extensively to examine the types of features available in early stages of visual processing is a search task. Search tasks require a participant to search for a target among a field of distractors. The number of distractors and features of the distractors are manipulated in the task. If you increase the number of distractors, you may increase the amount of time it takes to find the target because participants might engage in a serial search in which they process each item one at a time. However, if the number of distractors does not affect response time, it is likely that all of the items in the search array are being processed at the same time (parallel search). Serial searches take time and attentional resources. Parallel searches are fast and require no attention. Based on the results of search tasks, we know that features such as color, edges, shape, orientation, and motion are preattentitively extracted from a visual scene. According to feature integration theory, each of these features is placed into separate maps that preserve the spatial location of the features. Attention is then used to combine information from the different feature maps for a particular location. The combination of features leads to object perception. Feature integration theory highlights several important aspects of the perceptual process. First, perception takes place in stages. Second, our perceptions are influenced not only by the sensory information gathered from the environment but by other factors as well (e.g., attention and memory).

This overview of thought related to sensation and perception shows that philosophers and researchers attempt to address one key question: How do we derive an internal, mental representation of an external, physical world? Some approaches are phenomenologically based whereas others are computationally based. However, all theories of perception must be confined by the type of sensory information available for processing.

Sensation And Perception

The idea that we are information processors permeates many subfields within psychology (e.g., sensation and perception, cognition, and social psychology). An information-processing approach is fairly straightforward—there is input, processing, and an output or, put another way, we receive information, interpret it, and respond. Using the example of a computer, as I type, the computer scans for a key press. Any key press is very briefly stored in the keyboard buffer. However, when I type “a” an electric impulse is received and stored in the buffer as a binary code (not an “a”). Once the input is received, it is interpreted by the processor. The binary code of 01100001 then becomes an “a,” and I see “a” on the monitor in the document I am typing. Likewise, when light reaches the retina, the rods and cones undergo a bleaching process that produces a neural impulse that travels along the optic nerve, eventually reaching the lateral geniculate nucleus of the thalamus. The information received at the thalamus is not “what I see.” That information, therefore, is relayed to the visual cortex in the occipital lobe, where it undergoes further processing. The object is identified in the “what” pathway in the temporal lobe. A decision about what to do with the object is made in the prefrontal cortex and a response is generated in the motor cortex in the frontal lobe. Thus, within this information-processing account, sensation is the input, perception is the processing, and our response to the stimulus is our output. Sensation, therefore, is often described as the process by which stimulus energies from the environment are received and represented by our sense receptors. Perception, then, is the process by which the sensory information is interpreted into meaningful objects and events. Following this traditional separation of sensation and perception, if you are examining the sensitivity of the ear to particular frequencies of sound, you are studying sensation, and if you are examining the pitch of these sounds, you are studying perception.

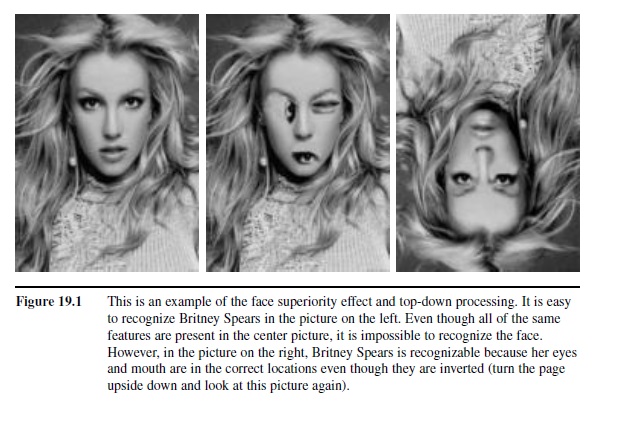

It is also important to note that processing sensory information can occur in a bottom-up or top-down manner. Bottom-up processing proceeds from sensory information to higher level processing (similar to the information-processing example just described). Top-down processing occurs when experience and expectations guide the interpretation of sensory information. Context effects are good illustrations of top-down processing. For instance, letters are easier to read when they make up a word than when they do not (Cattell, 1886). This phenomenon is referred to as the word superiority effect. Likewise, a degraded word can be recognized faster in the context of a sentence than when presented alone (sentence superiority effect Perfetti, 1985). Similarly, portions of a line drawing can be recognized easier in context than out of context (Palmer, 1975). Facial features also appear to be recognized in the context of the whole face (face superiority). For example, the picture in the left frame of Figure 19.1 is easily recognizable. The picture in the middle, on the other hand, is unrecognizable even though all of the same features are present. Finally, in the right frame, the picture is recognizable again since the eyes and mouth are in the proper locations. However, turn the page upside down. The eyes and mouth are in the proper locations but not the proper orientations. In each of the preceding examples, anticipating the next word in a sentence or knowing the typical objects located at street corners or the features of a face influences how quickly the sensory information is processed.

As noted by Scharff in Chapter 27, Perception (this volume), the line between sensation and perception is sometimes fuzzy and not easy to delineate. In fact, sensation and perception are sometimes referred to as one continuous process in introductory psychology texts (e.g., Myers, 2007). For the purpose of this research-paper, however, sensation includes the reception of energy from the environment (e.g., sound waves) and the process of changing that energy into a neural impulse (i.e., transduction) that ultimately travels to the appropriate area(s) in the brain. The other chapters in this section describe sensation and perception for our various senses.

Figure 19.1 This is an example of the face superiority effect and top-down processing. It is easy to recognize Britney Spears in the picture on the left. Even though all of the same Seatures are present in the center picture, it is impossible to recognize the face. However, in the picture on the right, Britney Spears is recognizable because her eyes and mouth are in the correct locations even though they are inverted (turn the page upside down and look at this picture again).

Figure 19.1 This is an example of the face superiority effect and top-down processing. It is easy to recognize Britney Spears in the picture on the left. Even though all of the same Seatures are present in the center picture, it is impossible to recognize the face. However, in the picture on the right, Britney Spears is recognizable because her eyes and mouth are in the correct locations even though they are inverted (turn the page upside down and look at this picture again).

Multisensory Processing

Although each sensory modality is typically researched in isolation of the other senses, it is easy to think of examples in which the senses interact. For example, when you have nasal congestion, you experience some impairment in your ability to smell things. You also cannot taste foods very well, since olfactory and gustatory senses are associated (e.g., Mozel, P. Smith, B. Smith, Sullivan, & Swender, 1969). Several types of sensory information interact with visual information. A common example is visual precedence. When you watch a movie, the action is occurring on the screen in front of you but the sound is coming from the speakers along the sidewalls. However, you attribute the source of the sound to the actors on the screen. The visual information takes precedence when attributing the source of the sound. Vision and touch interact as well. Graziano and Gross (1995) found bimodal neurons for touch and vision in the parietal lobe using monkeys. Specifically, they found that, with the eyes covered, particular neurons responded to the stroke of a cotton swab on the face. They also found that the same neurons responded when the eyes were uncovered and a cotton swab moved toward the face, indicating that the neurons not only responded to a tactile receptive field but to visual information associated with that receptive field as well. Another example deals with spatial processing. Although there is a “where” pathway for processing visual spatial information, spatial information is also processed in relation to our own position in space. Head-centric processing refers to processing objects in space in relation to our head. The semicircular canals function somewhat like a gyroscope, providing information about head orientation. The ocular muscles that move the eyeball receive excitatory and inhibitory information from the semicircular canals (Leigh & Zee, 1999). This input helps guide head-centric localization of objects.

Research in multisensory processing has been increasing in recent years and represents a growing area of research in sensory and perceptual processing. As this research continues, we will be able to gain a better understanding of how the senses interact with one another to produce the rich perceptual experiences we encounter from our environment.

Sensory Adaptation

Our sensory systems are largely designed to detect changes in the environment. It is important to be able to detect changes because changes in the sensation (input) can signal a change in behavior (output). For instance, imagine you are driving down the street listening to music. Suddenly, you hear an ambulance siren. When you hear the siren you pull to the side of the road. The new sound of the siren signals a change in driving behavior. However, there are some stimuli that do not change. Usually, these constant sources of stimulation are not informative and our sensory systems adapt to them. Specifically, sensory adaptation (also neural adaptation) occurs when there is a change over time in the responsiveness of the sensory system to a constant stimulus. As a result, the firing rate of neurons decreases and will remain lower when the stimulus is presented again. An example of sensory adaptation is an afterimage. You have probably experienced a color afterimage demonstration. A common example is to look at a flag with alternating green and black stripes and a rectangular field of yellow in the upper left corner filled with black stars. When you look at this flag for approximately two minutes, your sensory system adapts to the green, black, and yellow colors. When you then look at a white background, you see alternating red and white stripes with a blue field in the upper left corner filled with white stars. This simple demonstration supports Hering’s opponent-process theory in which color vision results from opposing responses to red-green and yellow-blue (also black-white). Adapting to green, for instance, reduces the neural response to green and increases the opposing response to red. Therefore, when you look at the white background, you see red where you had previously seen green. Sensory adaptation occurs in other areas of vision (e.g., motion aftereffect, spatial frequency) and in other senses. Researchers, therefore, can combine sensory adaptation with psychophysical methods to determine how the sensory system responds to physical characteristics of the environment (e.g., line orientation).

Sensory Memory

Miller’s (1956) well-known article “The Magical Number Seven, Plus or Minus Two” helped establish short-term memory as a fairly small memory store that held information for a limited amount of time (Brown, 1958: M. Peterson & L. R. Peterson, 1959). Sperling’s (1960) use of the partial report method, however, provided evidence for a sensory memory store that could hold more information than short-term memory but for only a brief period of time. Atkinson and Shiffrin (1968), therefore, proposed a multistore model of memory that included sensory, short-term, and long-term stores. This model has been extremely influential in conceptualizing memory as an information-processing system and is still used today. Thus, the idea of sensory memory has been dealt with largely as an issue of memory and not necessarily sensation.

However, Phillips (1974) suggested that there are really two sensory-related stores. The first store lasts approximately 100 ms (for vision), has fairly high capacity, and is related to sensory processing. The second store holds information for up to several seconds, is limited in capacity, and is related to cognitive processing. In vision, this second type of store has become known as visual short-term memory (VSTM). It is hypothesized that sensory memory is needed to integrate sensory information obtained over time, albeit brief, as in a saccadic eye movement, or else sensory processing of the environment would start anew with each fixation rendering a new visual scene approximately every three to five seconds. Traditionally, there have been four characteristics of sensory memory that have distinguished it from other types of memory (Broadbent, 1958). First, the sensory memories are formed without attention. Second, sensory memory is modality-specific. Third, sensory memory is a high-resolution store, meaning that a greater level of detail is stored in sensory memory than is used in categorical memory, for example. Finally, information in sensory memory is lost within a brief period of time. Recent auditory research, however, has drawn into question these characteristics (Winkler & Cowan, 2005), leading some researchers to speculate about the degree to which memory stores can be separated (e.g., Nairne, 2002).

Although additional research is necessary to develop a more complete picture of sensory memory and how it interacts with and is different from other types of memory, sensory memory highlights an important point about sensory processing. As we interact with the environment, our senses provide us with information about what surrounds us. As we process that sensory information, we develop perceptions of the world. Although these perceptions develop quickly, they do take some time. Sensory memory, therefore, keeps information available for the additional processing necessary for us to understand that sensory information.

Summary

Sensory processing provides a link between the external physical world and the internal neural world of the brain. Sense receptors convert energy from the physical world into neural impulses that are processed in various cortical areas as basic components of a visual object, a sound, and so on. Sensory information is held briefly in sensory memory for additional processing, leading to our perceptions of the world. Sense receptors also reduce the amount of information we process since they are only receptive to a particular range of physical stimuli. Additionally, we can adapt to sensory information that is not informative. Finally, although sensory systems can be examined independently, our sensory systems are integrated to give us complex perceptual experiences about the world we interact with.

References:

- Atkinson, R. C., & Shiffrin, R. M. (1968). Human memory: A proposed system and its control processes. In K. W. Spence (Ed.), The psychology of learning and motivation: Advances in research and theory (pp. 89-195). New York: Academic Press.

- Biederman, I. (1987). Recognition-by-components: A theory of human image understanding. Psychological Review, 94, 115-147.

- Broadbent, D. E. (1958). Perception and communication. New York: Pergamon Press.

- Brown, J. (1958). Some tests of the decay theory of immediate memory. Quarterly Journal of Experimental Psychology, 10, 12-21.

- Cattell, J. M. (1886). The influence of the intensity of the stimulus on the length of the reaction time. Brain, 9, 512-514.

- Ehrenfels, von. (1890). Über Gestaltqualitäten. Vierteljahrsschrift für Wissenschaftliche Philosophie, 14, 249-292.

- Gendler, T. S., & Hawthorne, J. (Eds.). (2006). Perceptual experience. New York: Oxford University Press.

- Graziano, M. S. A., & Gross, C. G. (1995). The representation of extrapersonal space: A possible role for bimodal, visual-tactile neurons. In M. S. Gazzaniga (Ed.), The cognitive neurosciences (pp. 1021-1034). Cambridge, MA: MIT Press.

- Leigh, R. J., & Zee, D. S. (1999). The neurology of eye movements (3rd ed.). New York: Oxford University Press.

- Marr, D. (1982). Vision. San Francisco: W. H. Freeman.

- Miller, G. A. (1956). The magical number seven, plus or minus two: Some limits on our capacity for processing information. Psychological Review, 63, 81-97.

- Mozel, M. M., Smith, B., Smith, P., Sullivan, R., & Swender, P. (1969). Nasal chemoreception in flavor identification. Archives of Otolaryngology, 90, 367-373.

- Myers, D. G. (2007). Psychology (8th ed.). New York: Worth. Nairne, J. S. (2002). Remembering over the short-term: The case against the standard model. Annual Review of Psychology, 53, 53-81.

- Palmer, S. F. (1975). The effects of contextual scenes on the identification of objects. Memory & Cognition, 3, 519-526.

- Perfetti, C. A. (1985). Reading ability. New York: Oxford University Press.

- Peterson, L. R., & Peterson, M. J. (1959). Short-term retention of individual items. Journal of Experimental Psychology, 61, 12-21.

- Phillips, W. A. (1974). On the distinction between sensory storage and short-term visual memory. Perception and Psychophysics, 16, 283-290.

- Sperling, G. (1960). The information available in brief visual presentations. Psychological Monographs, 74, 1-29.

- Winkler, I., & Cowan, N. (2005). From sensory to long-term memory: Evidence from auditory memory reactivation studies. Experimental Psychology, 52, 3-20.

See also:

Free research papers are not written to satisfy your specific instructions. You can use our professional writing services to order a custom research paper on any topic and get your high quality paper at affordable price.