This sample Dual Use Research Paper is published for educational and informational purposes only. Free research papers are not written by our writers, they are contributed by users, so we are not responsible for the content of this free sample paper. If you want to buy a high quality paper on argumentative research paper topics at affordable price please use custom research paper writing services.

Abstract

The concept of dual use refers to the misuse of civilian technology for hostile purposes. While dual use in warfare has been practiced for millennia, the global risks of an adverse biosecurity event happening today are hugely increased by the rapid advance of biotechnology research and industry. Arguably lacking in a parallel development of ethical consideration and debate, biotechnology is not only a massive benefactor to humankind but also a potential source of significant risk to human security due to its nature and scope. The possibilities for misuse arising from it not only include potential dual use applications in the form of biological and/or chemical attacks by both state and non-state groups but also, and probably more likely, unintentional outcomes arising in the form of accidental exposures. This entry looks at some examples of historical and current events and considers some ethical approaches in a bid to introduce the topic in a general sense and relate it to bioethics.

Introduction

This entry aims to lead from a description of the ethical problems of dual use towards some possible ethical responses. While dual use can refer to a range of applications in the real world, in this entry the focus is on the security understanding of the term: the appropriation of peaceful technology for hostile purposes, often military. This entry also highlights nonmilitary dual use and the potential for unintended dual use that may be employed by, or may result from actions taken by, substate-level groups (including scientists and other technologists), which must also be considered as security risks.

For the purposes of this entry, the term “technology” is used to refer to science and scientific activity as well as other forms of technology, in its broadest sense. While this entry discusses biological weapons as a focus of bioethics, the issue of chemical weapons is also included as these weapons are also a bioethical challenge. The World Health Organization (1970) defined biological agents as those that “depend for their effects on multiplication within the target organism, and are intended for use in war to cause disease or death in man, animals or plants” (WHO 1970, p. 12). It also defined chemical weapons as agents of warfare including “all substances employed for their toxic effects on man, animals or plants” (WHO 1970, p. 12).

Clearly, there is an overlap in these definitions. Ethical consideration of this convergence is increasingly important due to the difficulties experienced by both scientists and the security community in dealing with these two branches of science as separate entities.

Conceptual Clarification

As a concept, dual use is often referred to in the form of biological or chemical weapons, or even as “weapons of mass destruction.” While recognizing these as some of the most well-known applications of dual use, it is also possible to consider the use of any peaceful civilian technology that has been adapted for the purposes of aggression as an example of dual use. The term can also refer to any peaceful technological developments that are to be delivered by aggressive means (e.g., through terrorist activity) to achieve a specific desired outcome.

At its simplest level, dual use could refer to something as ordinary as the use of a kitchen knife. While its primary purpose is to cut up food, the knife may also be used to kill someone. This is not usually thought of as being “dual use,” but it is. In the same way, humans have long sought to use disease, poisons, and venom as biological and toxin weapons against enemies, even when they did not fully understand the ways in which these worked. The process also works in reverse, so a disease-causing pathogen may be turned into the basis for a vaccine. This is also a form of dual use, because the pathogen exists for one purpose (in nature, the pathogen exists to reproduce itself and to be passed on between organisms), but through scientific endeavor, it is turned to another, beneficial, use for the betterment of humankind. Problems may then emerge if the vaccine is used in order to manipulate health, of course. By denying it to certain groups, or using it as a means of controlling some aspect of wellbeing, vaccines can become an unethical tool and therefore another example of hostile or malign dual use.

Today, humanity is faced also with the possibility that dual use may be achieved unintentionally through scientific advances that slip through existing containment processes or which are advanced without sufficient ethical debate. It is also useful to consider two further sources of risk – state level and substate level activities – as posing a top-down risk (state activity) and a bottom-up risk (substate activity). Both require ethical responses applied in practice.

In security terms, the intentional hostile use of technology is viewed, at least by those not deploying such weapons, as the misuse of technology. The term “misuse” expresses a judgment. This element of judgment gives rise to some disagreements between those working in security circles and, for example, the world of science.

Several Western powers developed biochemical weapons programs in the twentieth century before abandoning them (Dando 2006, chapters 3–4). While the West is currently highly judgmental about the use of such weapons by countries which have used chemical and biological weapons since the advent of international prohibitions, this does not prevent the West from holding nuclear armaments, which can also be judged as a misuse of science by the same moral or ethical standards.

In recent years, the concept of the intentional misuse of technology, in which technology is weapon zed on purpose, has been expanded to also include the unintended outcomes of technological advancement (Taylor 2006). Here, technology is rendered useful as a weapon through an unintended consequence of technological activity. This is ethically complex, as it is often difficult to decide what constitutes a genuine accident and what is just an unintended but reasonably foreseeable possible outcome. Whatever the cause of the outcome, the results of any accidental or unintentional adverse consequences of technological advancement can be of the same or similar magnitude as those arising from intentional misuse. This poses a big ethical dilemma.

The potential for dual use, inherent in much technological advancement today, is currently something of a contested area between security and science professionals especially (Yong 2012). Significant educational efforts are being made currently to prevent or minimize the risks of such unwanted outcomes occurring (Rappert 2010), but serious concerns are expressed by scientists about the possible limitations such efforts may impose on research. How the interests of security and science are to be balanced is a key ethical issue.

There have been numerous documented and suspected cases of the use of biochemical weapons in the twentieth and twenty-first centuries, as well as earlier. Today, the risks of dual-use are potentially greater than ever before. It is reasonable to assume that the development and potential use of nuclear and radiological armaments is restricted largely, if not wholly, to state-level actions due to the required infrastructure and costs of production. However, a greater range of risks is potentially to be found among those substate-level individuals or groups working in science and technology who, if so minded, could easily access the necessary equipment and materials to produce biological and chemical weapons purposely. Plus, there is always the chance of the accidental release of pathogens or information, enabling malicious individuals or groups to carry out hostile acts.

This raises the issue of security concerns associated with current scientific and industrial advances in biotechnology, which are massive and being carried out on a global scale. Many countries see research and development in biotechnology as a relatively “quick fix” to their economies, and the fields of pharmaceuticals, agriculture technology, neuroscience, and genetics, to name a few, are the sources of significant income to many developing, and developed, economies. As these activities develop and expand, the risks of unintended consequences also expand. It is to this area that focus is needed, applying an ethical lens in order to consider dual-use risks both in the present and the future.

In 2000, Matthew Meselson of Harvard University’s Department of Molecular and Cellular Biology said:

Every major technology — metallurgy, explosives, internal combustion, aviation, electronics, nuclear energy — has been intensively exploited, not only for peaceful purposes but also for hostile ones. Must this also happen with biotechnology, certain to be a dominant technology of the twenty-first century? (Meselson 2000)

Meselson went on to comment:

If this prediction is correct [citing a 1989 prediction of biotechnology as a source of security problems], biotechnology will profoundly alter the nature of weaponry and the context within which it is employed.. .. During the century ahead, as our ability to modify fundamental life processes continues its rapid advance, we will be able not only to devise additional ways to destroy life but will also become able to manipulate it — including the processes of cognition, development, reproduction, and inheritance.. .. Therein could lie unprecedented opportunities for violence, coercion, repression, or subjugation. (Meselson 2000) [Italics mine]

Such are the possibilities of dual use ahead of us. Clearly, this is an issue that needs to be addressed at the highest levels of politics and ethical debate.

History And Development Of Dual-Use Weaponry

Dual Use Prior to the Twentieth Century Human warfare has been utilizing biological and toxin weapons in one form or another for thousands of years. Venomous snakes, scorpions, mandrake root in wine, toxic honey, diseased body parts, and the unidentified Greek fire have all been used as a means of overpowering enemies in antiquity (Mayor 2009). Perhaps, the best documented example of a verifiable biological weapon attack in the historical past was carried out by the British against elements of the Native American population in North America in 1763. The British military, when under siege at Fort Pitt, gave smallpox-infected blankets to a group of Native Americans in order to pass on the disease (Dando 2006). The desired outcome was achieved. In antiquity and into the Common Era, Jewish, Christian, and Muslim authorities have all attempted to lay down rules governing what is now known as conventional conflict between nations and groups, but the abhorrence of biochemical weapons was first addressed by international agreement in the nineteenth century. The development of biological weapons in the twentieth century was enabled by the scientific discoveries of the previous century. Work by Lister, Pasteur, and Koch on the mechanisms of microorganisms was foundational in the elaboration of modern public health measures and the development of responses to what had, in the previous generations, wiped out millions through infection. Koch’s postulates showed how the introduction of a microorganism into a healthy host would not only result in illness developing in the host but also potentially pass on the microorganism to further hosts. This became the basis for the development and use of biological weapons.

Prior to the beginning of World War I in 1914, people were already thinking about how to use the science behind human health improvements as a means to develop biological agents as weapons. In the Brussels Declaration of 1874 and the two Hague Conventions of 1899 and 1907, codified responses to the use of poison in warfare were devised, in order to prohibit the use of such weapons. However, chemical weapons were still used in WW1 (Dando 2006, p. 16).

As a response to the use of chemical armaments in WW1, the Geneva Protocol of 1925 subsequently addressed the use not only of chemical weapons but also of “bacteriological” weapons. As Unfortunately, as this Protocol only prohibited the use of such weapons, a considerable amount of work went on subsequently into research and development instead. Various countries established research into biochemical weapons during and after WW1. During and following WW2, Germany, France, Japan, Britain, the USA, and Russia, among others later, became engaged in active work to research, develop, and stockpile biochemical weapons. In so doing, they launched programs to develop their own offense/defense capabilities (Dando 2006).

By the early 1970s, international opinion around such weapons of mass destruction led to the inception of the Biological and Toxin Weapons Convention, which entered into force in 1975 (see the United Nations Office at Geneva website). This was the first international treaty to completely ban an entire class of weapons. By that time, most national weapons programs had closed, although some had not. This was followed by the Chemical Weapons Convention, which entered into force in 1997, the first treaty to ban an entire class of weapons with a verification process (see Organization for the Prohibition of Chemical Weapons, OPCW, website).

In practice, there are still substantial problems with these two classes of weapon. There is no verification process under the BTWC, which relies instead on states to report their “confidence building measures.” There is also, of course, a remaining ethical challenge in the form of those states who have chosen not to either sign or fully ratify their engagement with either the CWC or the BTWC.

Ethical Dimensions In Addressing Dual Use

Current State Of Play

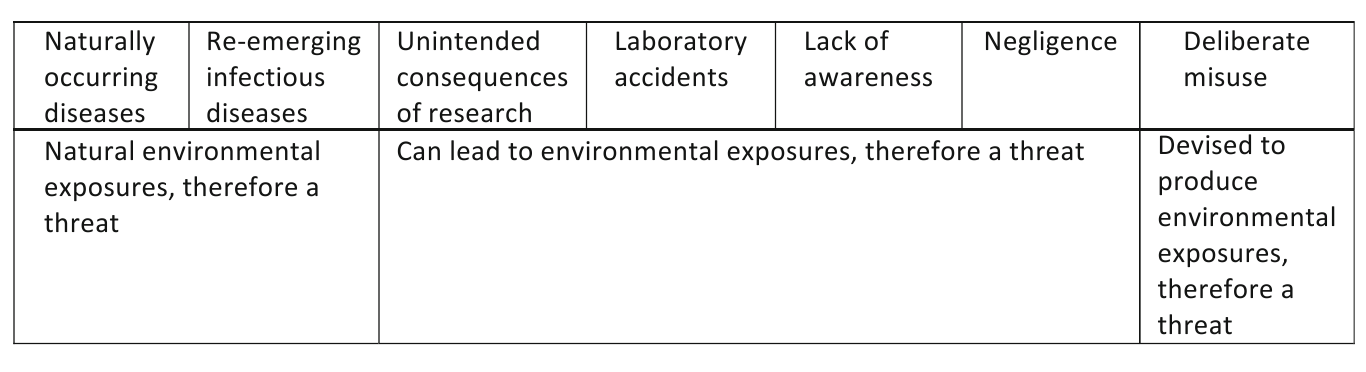

Taylor (2006) outlined the full spectrum of biological risks (Fig. 1) that must be addressed if the international community is to recognize and respond comprehensively to the problem of dual use through all aspects of the biotechnology being practiced today, often in areas of the world that are not paying as much attention to ethics as others. From left to right, this spectrum moves from naturally occurring diseases present in the environment through to deliberate human-made threats that can have the same disastrous effects.

Much bioethical debate has been going on for years about the nature of environmental threats to human health as seen on the left of the spectrum, including biosecurity in terms of disease (through public health responses), food security (agricultural biosafety responses), and environmental protection in general (environmental bioethics), among others. To date, however, there has been an insufficient practical ethical focus on the areas towards the right side of the spectrum. Society has trusted in science to work for the common good, which of course it does. However, the economic flourishing of biotechnology must be matched with an equally strong ethical response to both challenge and support it. Biosafety is a powerful tool in protecting humans from “bad” pathogens. But who is protecting pathogens from “bad”, misguided or uninformed people? This is where biosecurity and bioethics can meet.

Biosafety is built on the ethical principle of “do no harm” and on protection, through containment, of the individual, the group, and the environment from unwanted exposure to harmful pathogens. Biosecurity is built on the same ethical principles of “do no harm” and on protection, through containment (or some form of appropriate restriction, whether temporary or permanent) of information, knowledge, and communications that could result in either accidental, unintentional, or deliberate harm arising from scientific and technological advances.

Biosafety stipulates that certain activities should not be undertaken, or undertaken only under strict regulation, due to the risk of exposure or escape of pathogens or other harmful substances from containers or from the laboratory. Certain risk-mitigating questions are asked prior to work commencing and as work goes along; appropriate responses are then made in the form of containment processes and standard operating procedures. This is all ethically mediated even if it is not obvious. In the interests of doing no harm, biosafety imposes various limitations on personal and group autonomy in the pursuit of individual and group safety. Consent to this is required in order to work in the laboratory. Benefit to humankind is assumed.

Biosecurity likewise stipulates that certain activities should not be undertaken, or at least restricted, due to the risk of “exposure” or “escape” of information, knowledge, and communications from the laboratory or office of the scientist or technician. Just as a pathogen can be dangerous, so can information, knowledge, and communications be dangerous if they provide a person with hostile intent with the necessary means to effect a biological or chemical attack.

Figure 1. The full spectrum of biological threats, showing the links between natural and man-made environmental exposures (After Taylor 2006, p. 61)

Figure 1. The full spectrum of biological threats, showing the links between natural and man-made environmental exposures (After Taylor 2006, p. 61)

However, ethical responsibility tends to be deemed to have ended once work is published or otherwise completed, as in biosafety. This is a crucial gap in the biosecurity sense of responsibility as is shown later in this entry. Biosecurity is mediated by the same ethical principles as biosafety. Unless practitioners are aware of the risks and of a practical ethical framework in which to work, no ethical recognition can occur and no risk mitigation can be put in place. In biosecurity, the scientist needs to look ahead, post-publication or post-research completion, and consider his/her responsibilities as they can be reasonably foreseen at that time. He/she can then take action to prevent or mitigate these reasonably foreseeable biosecurity risks in the most effective way possible at that time.

Thus, the ethics education of science technologists is itself an ethical issue. Effective, ethically driven biorisk management (combining both biosafety and biosecurity) is therefore one of the strongest tools in our global response to the potential threats to humankind of biological and chemical weapons as form of dual use.

Ethics And Dual Use In Practice

There are at least three problems in addressing biosecurity in the world of practicing science and technology. Firstly, there is the problem of the ethical issues inherent in dual use itself. Secondly, there is the often unrecognized potential for unintended dual use that may develop if steps are not taken to mitigate these risks. Thirdly, there is the need for a broader understanding of practical ethics – most people associated with potential dual-use activities are not specifically trained in ethics, leading to potential weaknesses in the recognition of ethical problems and how to tackle these.

This is not saying that scientists and other professionals do not have any existing ethical values or perspectives – they clearly do – it is merely suggesting that without explicit training in applied ethics, it is more difficult to evaluate and respond to the risks in research. Taylor stated “.. .to realize the full humanitarian and economic benefits from the advances in the life sciences, it is essential that … risks are properly identified, understood and effectively managed” (2006, p. 61) and “.. .there is a need for leadership from the private and academic sectors of the life sciences to identify and manage biological risks, whatever their origin” (2006, p. 63).

The ethical perspective on dual-use biochemical weapons is already addressed in theory if not in practice. Global opinion has been expressed in the form of the two prohibition treaties, the BTWC and the CWC. Most reasonable adults agree that the use of such weapons is abhorrent. However, despite the treaties, we still see their use in practice.

The problem of the widespread lack of recognition of the potential for unintended outcomes in science and technology communities today must be addressed through education in ethics and in the practical application of ethical values to scientific and technological work within a framework combining biosafety and biosecurity as biorisk management.

The lack of specific training in ethics is complex. Most people confuse their private values with public ethics and are insulted if told they need to be “taught” ethics. Additionally, ethics is often seen as a door slamming on progress. This is often the fault of the people promoting ethics. If ethics were to be taught and promoted more effectively, there would be better “buy-in” to it and the world would be a safer place.

Identifying Ethical Standards In Dual-Use Situations: Private And Public Tensions

The various commonly recognised ethical principles, such as individual and group autonomy, informed consent, beneficence, the requirement to do no harm, and long-term responsibility for the outcomes of science, are relevant to the dual-use scenario as by implementing these, risks may be reasonably foreseen. These are fairly comprehensive principles that most would agree with. It is useful to consider all dual-use situations by reference to them.

Most people without a formal ethics education would still agree that ethics is a set of standards that govern how people should act towards one another. Views depend on the perspective and role of the person considering his/her ethical stance, whether a child, parent, student, scientist, teacher, businessman, citizen, adherent to a religion, politician, armed forces member, and so on. People hold multiple roles in their daily lives. Unfortunately, this multiple identity then leads to the private/public paradox: what is ethically acceptable at home may not be acceptable at work or in the public arena. How can these differences be balanced with the minimum of tension to the individual and of conflict for the group or wider public?

Ethical values have been traditionally associated with personal morals and religious ideals. In the current global climate in which religion is seen as an increasingly divisive concept, this is not always helpful. However, most people would probably agree that those religious ideals which focus on the common good, doing no harm and promoting health, happiness, and harmony, are worth taking on board the ethics spectrum.

There is also a tension here between personal ethics and community ethics. Should an individual adhere to his personal ethics, values, and morals or should he, in certain circumstances, accept that these have to take second place to the ethics, values, and morals of his group (ethnicity, nationality, professional association, and so on)? For example, if a local scientist is employed in a developing country whose biotechnology industry is growing fast and is providing her with great economic benefits, why should she worry that biosecurity is not considered adequately in her laboratory? Why should she care if dual use arises from her work as long as her family is fed? The inner conflict comes typically only when adverse outcomes have occurred. By then, it is usually too late for hand-wringing.

On a state level, by accepting biological weapons, is the scientist ethically defending himself and his family while attacking someone else and his family? Is the first use of biological weapons acceptable if it deals a lethal blow to the efforts of the scientist on “the other side,” preventing further hostilities? If scientist A responds to the first use of biochemical weapons by scientist B and, in so doing, kills or causes serious illness to enough of scientist B’s population to prevent them from further hurting scientist A’s population in response, is that acceptable?

Dual Use And Bioterrorism

Bioterrorism, even a generation ago, would have been unthinkable. Today, it is possible. One may argue that the risks of bioterrorism are exaggerated and that such attacks could only cause small-scale disruption. However, as is increasingly obvious to populations around the world, it is not the size of the terrorist event that is the principal cause of disruption; it is the governmental response to it. In advance of potential attacks, there is the implementation of preparedness through limitations on personal freedom arising from security needs. After an event, there is the further strengthening of security at the expense of more personal freedoms, which often constitutes the greater event in itself. Of course, there is also the potential for a bioterrorism event to cause harm to subsequent generations of the victims’ families. Thus bioterrorism can still cause major disruption from a potential or actual relatively small event, the perpetrators knowing that governments cannot be seen to allow danger on their own streets. Bioterrorism is therefore likely to remain a public issue.

Ethics At The Point Of Transition To Hostile Use

Somewhere behind most dual-use weapons, it is possible to identify the point at which a civilian development was turned into a weapon. For example, guns, bombs, grenades, rockets, and so on are all dual-use applications because in their original forms, they relied on gunpowder. This substance was apparently developed as an unintentional outcome of Chinese alchemy – ironically, in the search for an elixir of immortal life – which became, through dual use, one of the most influential and harmful substances devised by humankind. From its first use in fireworks, its potential for use in weaponry started when someone thought this would be a good way to advance his interests. The rest, as they say, is history.

At what practical point should, or could, the possibility of the dual use of gunpowder technology have been recognized? At the point of invention? During the transition to dual use? At the point of first hostile use? Perhaps dual-use risk was recognized, but more powerful voices won out. Is it always possible to recognize when the transition to dual use is being considered or actually being carried out? Should dual use have been stopped once it had been started? How can the ongoing repercussions of dual use be stopped? Is that even possible? All of these are ethical questions. The answers vary depending on the questioner and respondent and on their various perspectives, depending on their background, personal values, religious ideals, morals, and public ethical stance.

An Example From The Recent Past

A good example of these dilemmas is seen in a twentieth-century dual-use case that illustrates not only the difficulties of dual-use prevention that can occur in practice, but how the human repercussions and the ethical issues keep on growing over time even after dual-use implementation has been stopped.

Arthur W. Galston was a botany graduate in the USA during the 1940s. He identified a synthetic substance that improved the growth of plants. As well as emphasizing the positive aspects of his synthetic substance, Galston also reported, in his 1943 thesis, that if the substance were applied in heavy concentrations, it was toxic.

Later, during the 1960s, Galston found that the US government had used his work, and that of others, to develop powerful herbicides for warfare. He had, unknowingly, actively contributed to the development and use of Agent Orange. The US military went on to deposit over 50,000 t of this toxic material across Vietnam. Galston then engaged in protests about this, and from 1966 communicated the risks of the substance to the US government. However, the damage was done. The effects of Agent Orange are still seen today, not only in those who were directly affected by exposure but also in those of subsequent generations affected by birth defects and other impairments (King 2012). Galston said:

I used to think that one could avoid involvement in the anti-social consequences of science simply by not working on any project that might be turned to evil or destructive ends. I have learned that things are not that simple. . . . The only recourse is for a scientist to remain involved with it to the end. (Galston 1972).

Is Galston responsible for the development of Agent Orange? If not, what degree of responsibility, if any, does he hold? If he is not responsible, who is? Should he have done something to prevent his work being misused in this way? Why should he have expected anyone to misuse his work? Was the development of Agent Orange a misuse at the time? Most reasonable people today would say that it was – but is this view simply benefitting from hindsight? Even had Galston wished to protest at the misuse of his work prior to it being actually used, on what grounds could he have complained at that time, given that the use was planned as a military tactic by his own government? What are the ethical responsibilities of the US government to those who were victims of Agent Orange both before and after concerns were raised about its effects on humans? Where does the responsibility stop?

In the past, a soldier killed by a gun, maimed by a bomb, or blinded by an explosion flash could be affected only inasmuch as his own life prospects, and perhaps those of his family, were subsequently diminished by death, illness, or other incapacity (all of which are terrible but are limited to a relatively small group of people within a wider population). With the use of biological weapons, however, the direct target is not the only victim. Firstly, tens, hundreds, thousands, or more of the target’s neighbors are likely to be infected as well, going on to infect their neighbors. Secondly, in the case of some chemical weapons, the target’s children, grandchildren, and subsequent generations may also be affected as though directly, through the continuing legacy of birth defects, genetic conditions, and other physical and mental impairments that can have a severely limiting effect on their longevity and quality of life. Is this a just way to carry out war?

A paper discussing studies into the effects of Agent Orange on US and Vietnamese people (King 2012) provides a useful demonstration of the ongoing nature of the associated ethical issues (the italics in the quote below are added, as are the comments in square brackets):

In 1991… . . the United States Government passed the Agent Orange Act. This act mandates the US government to pay for the medical care of any Vietnam War veteran, regardless of length of service, related to an Agent Orange disease [related to does not mean a cause-and-effect relationship, only a presumed relationship]. These recognized diseases [this term refers to the recognition of the diseases themselves, not their proven relationship to Agent Orange] include several types of cancer, Parkinson’s disease, and ischemic heart disease. In addition, The US congress passed subsequent bills to include compensation for children with .. … .birth defects born to female veterans.

Researchers have struggled to describe the effects of Agent Orange on Vietnamese civilians.. . . . . First, the effect of dioxin [the active component in Agent Orange] on embryonic development varies by the amount of dioxin the embryo is exposed to [implies that there must be a proven cause-and-effect relationship in determining illness]. Many of those who researched Vietnamese populations studied medical records and self-reports of exposure, thus quantitative measures of exposure were rare [this is focusing on methodological weaknesses in research as a reason not to accept the effects of dioxin on the Vietnamese people in these studies]. Second, dioxin persists in soil, which can contaminate future crop yields. Dioxin can also contaminate fish, a major food source in Vietnam, one hypothesized to be the major source of dioxin exposure to Vietnamese civilians. Because dioxin can persist in the environment, researchers struggle to estimate dioxin exposure levels and to definitively state the source of that dioxin exposure [again, this relies on a lack of research proving cause-and-effect to bolster the argument that Vietnamese victims cannot be compensated.]

So, here can be seen an ethically challenging argument in the way that the effects of dual use are dealt with between two groups of victim. In order to recognize the effects of Agent Orange on US veterans, solid, methodologically sound research proof in each case of a definite cause-and-effect relationship is not necessary; for Vietnamese people, it is. This twenty-first century situation arose from the misuse of a fertilizer that was devised in the 1940s, which is still echoing ethically today. By failing to “do no harm,” those assuming an element of responsibility for the exposure of people to a harmful agent are trying to “make up for” their ethical failure. However, they are also failing ethically now, in that they are applying one rule for one group and another rule for another group. This could be read as a further failure to “do no harm.”

This continuing ethical narrative is further demonstrated on the US Veterans Affairs (VA) website, which states (again, italics are added):

VA assumes that certain diseases can be related to a Veteran’s qualifying military service. We call these “presumptive diseases”. VA has recognized certain cancers and other health problems as presumptive diseases associated with exposure to Agent Orange or other herbicides during military service. Veterans and their survivors may be eligible for benefits for these diseases. (US Department of Veteran Affairs 2013)

Firstly, many of the presumptive diseases are also highly associated with Western lifestyle choices. While in King’s paper above, the potentially non-Agent Orange environmental exposures (e.g., dioxin lingering in the soil or in fish) suffered by Vietnamese were reasons to discount Agent Orange as a causative factor of their disease, for the US veterans of Vietnam, known environmentally mediated diseases such as diabetes, ischemic heart disease, and prostate cancer are all willingly presumed to be due to exposure to Agent Orange and may possibly be the subject of funding. This is a massive ethical issue resulting from a dual use application. The “presumption” that certain diseases are a result of veterans’ military service in Vietnam and presumed exposure to Agent Orange is not ethically wrong in itself. Rather, it is ethically admirable: the government is willing to accept responsibility for the disease in individuals even if the presumption of cause is wrong (many veterans will have led postwar lifestyles that are highly associated with many of the presumptive diseases). However, when the same presumption of cause is not also offered to the other people exposed to Agent Orange, in this case, the Vietnamese, it is ethically questionable, if not simply wrong.

While considering this example from the USA, it is important to recognize that all major Western powers have engaged in the past in ethically dubious activities in pursuit of their own goals.

This example of Agent Orange and the ethical problems arising out of its use could equally well be applied to the past activities of many other Western powers as well as to the USA. However, in times past, societies did not necessarily have the benefit of an effective, developed, and developing concept of bioethics. Today, ethics is a significant and growing element of public policy; in the area of bioethics, public bodies are being held to account at an ever higher level of responsibility. There are fewer and fewer excuses for this sort of policy today because of this ethical accountability, but politics tends to put up barriers to ethics in practice.

International Recognition Of The Risks Of Biotechnology And Early Responses

In the BTWC and the CWC, the world has the benefit of a top-down (although not comprehensive) approach with which to control dual-use risks. Many states have enacted national legislation to include the requirements of the BTWC and the CWC. However, there also needs to be a bottom-up approach. Biosafety is well entrenched as a norm within science and technology. Recent international activities have now begun to address the biosecurity risks of biotechnology, much of it taking an ethical approach. This is increasing the nature and scope of states’ and institutions’ effective responses to biothreats around the world today. By engaging scientists and biotechnologists of all relevant disciplines in ethics and bioethics, these communities will be better equipped to play their part in managing and minimizing biorisks through dual use. Such education can strengthen scientists and others’ existing skills from the start of their careers to the end (Sture 2013).

Much international progress, based on many meetings around the world focusing on biosecurity oversight and education, has been published in the USA, in collaboration with other national partners. The first of several important reports from the US National Academy of Sciences dealing with national security and the life sciences was published in 2004, known as the Fink Report (NRC 2004). Seven recommendations were made, highlighting areas of action, including: educating the scientific community; review of plans for experiments; review at the publication stage; creation of a national science advisory board for biodefense; additional elements for protection against misuse; a role for the life sciences in efforts to prevent bioterrorism and biowarfare; and harmonized international oversight. The report also identified a range of “experiments of concern” having these characteristics: experiments that would demonstrate how to render a vaccine ineffective; experiments that would confer resistance to therapeutically useful antibiotics or antiviral agents; experiments that would enhance the virulence of a pathogen or render a nonpathogenic agent virulent; experiments that would increase the transmissibility of a pathogen; experiments that would alter the host range of a pathogen; experiments that would enable the evasion of diagnostic or detection tools; and experiments that would enable the weaponization of a biological agent or toxin.

The Fink Report was soon followed by the Lemon-Relman Report (NRC 2006), which recommended five actions that promoted the endorsement and affirmation of policies and practices that, to the maximum extent possible, promote the free and open exchange of information in the life sciences; the adoption of a broader perspective on the threat spectrum; the strengthening and enhancement of scientific and technical expertise within and across the security communities; the adoption and promotion of a common culture of awareness and a shared sense of responsibility within the global community of life scientists; and the strengthening of public health infrastructure and existing response and recovery capabilities.

A major meeting on biosecurity ethics education was held in Warsaw in November 2009, and its recommendations published in 2010 (NRC 2010). The meeting concluded that: an introduction to dual-use issues should be part of the education of every life scientist; this education should usually be incorporated within a broader coursework and training rather than via standalone courses; an international open-access repository of materials that can be tailored to and adapted for the local context should be set up, perhaps as a network of national or regional repositories; the repository should be under the auspices of the scientific community rather than governments, although support and resources from governments will be needed to implement the education locally; materials should be available in a range of languages; and so on.

The USA has in recent years set up its own “watchdog” board to consider issues of biosecurity, following on from these reports. The National Science Advisory Board for Biosecurity (NSABB) considers issues of biosecurity for the US government (see the website of the National Institutes of Health’s Office for Science Policy):

NSABB has defined dual use research of concern as research that, based on current understanding, can be reasonably anticipated to provide knowledge, products, or technologies that could be directly misapplied by others to pose a threat to public health and safety, agricultural crops and other plants, animals, the environment or materiel.. .. (NIH website, no date)

Ethics And The Technologist Today

So, how does the ethically aware scientist or technologist know that his/her work is not going to be used for hostile purposes in the future? Should there be some level of prohibition of research into new or changing pathogens if they have the potential to cause serious disease if not contained properly? Should scientists be “allowed” purposely to change the form or function of a pathogen in controlled laboratory circumstances, just in case it may happen “in nature,” thus providing an advantage should there ever be a need to produce a vaccine for it? Where should the line be drawn on any scientific or technological advance just in case dual use arises from it? Should a line be drawn at all? Interestingly, NSABB, the US biosecurity advisory board, was unable, or unwilling, to prevent the publication of two recent controversial papers describing how scientists purposely engineered new, more virulent flu pathogens. Under public and professional pressure prior to the publication of the first of these papers, NSABB was tasked to investigate whether this publication should go ahead. Despite the concerns of many, it did (National Institutes of Health 2012). This raises questions about the uses and limitations of such national committees. Controversy still surrounds this case.

Dual Use Bioethics And Education: A Range Of Approaches

A range of philosophical and ethical approaches have been and still are used in the education of scientists, although the depth and scope of these are variably applied, and sometimes not at all. Uptake of these depends to a large extent on the willingness of the individual to question his own work. There are also many scientific communities around the world who are, for their own reasons, more driven by economics than by ethics. To these communities, ethics is arguably an afterthought, if thought of at all. This is not only a bioethical issue but a politico-cultural one. Work has shown that many university science programs have little or no focus on ethics (e.g., Manchini and Revill 2008), highlighting the need to educate scientists in applied ethics as a means of acting out a biosecurity or chemical security norm.

The cost-benefit approach (always a popular response when the topic of ethics is mentioned) asks scientists to gauge the “costs” (downside) of their research against the benefits of their work. The problem here is that it is not always possible to foresee the costs. Where do you draw the line in terms of time and space when considering possible costs? Think of Galston and his fertilizer. Obviously, most scientists are hardly likely to weigh the costs of their work as greater than the benefits. When large grants, prestigious publications, promotion, TV programs, and media attention are at stake, what scientist is going to “cost” himself out of the game? Equally, it is not usually appropriate for anyone outside the scientific circle to do the cost-benefit equation on behalf of scientists – nonscientists simply don’t understand the issues as scientists do. This provides a clear recommendation for scientists to learn how to carry out sound ethical reviews of their own work themselves; and such a review has to be ongoing, not a one-off event at the beginning. It is arguably more effective to choose some other approach rather than the cost-benefit assessment (even though there is an ethical dimension in that approach) in order to avoid accusations of overcooking the benefits at the expense of the costs. There are some guidance materials available to assist scientists in ethically assessing their work (Sture 2010), and such guidance can easily be incorporated into daily work both during and after research is complete. Responsibility can no longer simply stop at the point of publication. Remember Galston, who said that “The only recourse is for a scientist to remain involved with it to the end.”

The precautionary principle is an approach that has found some favor, especially among policymakers and politicians. This is probably because it appears to be a fairly straightforward test of what not to do. It is arguably not a particularly robust approach at all, but its weaknesses make it popular in policy-making circles (those in power can be seen to be doing “something” even if it is not the “right thing”). This approach says that where a risk has been identified, but there is no scientific proof that harm will occur, then the responsibility to prove that no harm will occur rests with those taking the action that may cause the harm. Action should be taken to mitigate an unproven risk, in other words. Almost the entire global climate change policy response has been built on this relatively weak ethical code, but politically it is very attractive.

The precautionary principle makes much of the presence or lack of scientific consensus, but as is all too obvious, science is not as consensual as we would like to think. Two opposing “camps” can each claim proof that black is white based on their own work. For those outside the scientific community, with limited powers of evaluation of scientific rigor, who is to be believed? If the scientists currently working on H5N1 assure those challenging them that their work is perfectly safe and poses no risks to the public or the environment, should they be believed without question? What about the (even remote) possibility of a laboratory accident or some negligence on the part of a single bench technician (as postulated by Taylor in his spectrum of risks)? If laboratory mistakes never happen, why are there accident books? Are we absolutely certain that every staff member working in such contexts is an upstanding citizen committed to doing no harm? Is it acceptable or ethical to do personality profiling or background checks on scientists?

A further approach that can invoke ethical principles is the appeal to morals. This sounds good in theory but can quickly fall down because “morals” are notoriously hard to define and vary from person to person as much as from nation to nation. There can be little or no assumption that everyone agrees on what constitutes harm, for example. Is it possible even that, given the political and security climate in the world in the early twenty-first century, people can all agree on what is basically right and wrong? Has this yet been managed with the BTWC and CWC? States may sign up for something with one hand while still shaking the test tube with the other behind the scenes.

Talk of morals leads inevitably to religious perspectives. Religion already plays a large public role in science, with influence over research using embryonic stem cells, reproductive medicine, abortion, and end-of-life issues being reflected in many national legislations. While nonreligious people may not agree with these impositions, it is important to remember that most ethical principles (do no harm, consent, autonomy, benefit, and so on) are either derived from, or align with, religious ideals. It is arguably possible to engage scientists with bioethics through reference to religious ideals as long as religious ideals do not become the sole or explicit foundation for doing (or not doing) certain research. But not everyone agrees with this perspective.

The philosophical and ethical perspectives of teleology and deontology are also invoked by scientists in one way or another when trying to make ethical judgments. A deontological approach says that certain actions should never be taken – the BTWC/CWC outright ban on biochemical weapons is a deontological action. The problem with this is that while your enemy is busy making dual use weapons, you cannot return in kind as your principle forbids it; the threat of mutually assured biological or chemical destruction may be of some use, but is barred to you. Is this ethical price acceptable to the voting (or not-voting) public?

A teleological approach (often referred to as consequentialism) says that a “bad” or negative action may be taken if the outcomes are “good” or beneficial to someone somewhere. In this case, a dual use biological weapon could be devised and used because it is good for you in that it may kill your enemies. Its use may be mitigated by you agreeing not to engage in first use, but only in retaliation. The question must then be asked – if a person is to be killed by a retaliatory dual use weapon, is he somehow comforted by the thought that the missile whizzing towards him was ethically launched because it was not a first-use attack?

Ethical Issues In Dual Use: Looking Ahead

One way forward in support of a biosecurity or chemical security norm is that of the Responsible Conduct of Research approach. Under this banner, the publication On Being a Scientist (Institute of Medicine et al. 2009) uses the case of Galston and his fertilizer to highlight the dangers of dual use. While this introduction of dual use into the publication is admirable, the book stops with Galston’s quote about staying involved with it to the end. There is no attempt to discuss what can be done to prevent dual use occurring. Surely, this is an ethical failure in a publication that seeks to outline what makes a “good scientist”! Having said that, at least the Responsible Conduct model is actively promoting awareness of biosecurity risk, which is a major step forward in itself, making it probably one of the strongest approaches to biochemical security.

Work seeking existing levels of awareness of the risks of dual use (e.g., Manchini and Revill 2008) has shown that there is still an almost wholesale unawareness among scientists and science students as to their own responsibilities under the BTWC and the CWC, along with a lack of recognition that their own work can be misused in disastrous ways. The only way to tackle this is to facilitate a bioethical awareness, with a skill set or “toolkit” being taught, through which to apply this in the science community. While certain sections of the scientific community are already more than familiar, if not overloaded, with ethical concerns and restrictions, the growing biotechnology industry requires a greater awareness of the security risks and ethical limitations of its activities. Medical, environmental, food-based, and reproductive bioethics are now viewed as commonplace. But in biotechnology in general, it can be argued that a bioethics of dual use is simply not in place.

Conclusion

Biosafety and biosecurity are both based on containment. Both tap into existing knowledge of pathogenicity, infection, public health, and social responsibility. The gaps in biotechnology ethics education could be filled with bioethics education at all stages of a scientist’s career. The same applies to chemical security and the education of chemists. Ethics should be introduced at every level in ways that match the types of responsibilities and ethical decision-making confronting scientists at that point in their careers. Such ethics education implementation would then go some way to matching the top-down management of dual use risk that is in place through the BTWC and the CWC. Currently, the security risks from grass roots upwards are largely unrecognized and unaddressed.

Most governments, and most people, agree that the defense of the population is a key responsibility that should be the focus of significant attention. Unfortunately, the very advances that make human life easier and healthier are also potential sources of materials and know-how that can maim and kill, and, terrifyingly, even change human genetic makeup to afflict the next generation of children and then their children in turn.

While highlighting these risks, it is an unfortunate reality that in the search for ways to mitigate them, efforts can be hampered by questions that demand to know if the risks are real or imaginary. By their very nature, dual-use risks can take years to develop, can often be relatively easily hidden, and may therefore appear to be of little consequence until an event has taken place. Other apparently more pressing issues can appear to be more important. However, can science afford to ignore the warnings of Meselson, Galston, and others and not take further steps to tackle the dual-use risks that face the public in this uncertain world of terrorism, inter and intrastate aggression?

By implementing a biochemical security norm based on informed ethical research practices, the world could be something of a safer place. In order to achieve this, the development and application of a bioethics of dual use, in the form of an informed, well-educated, and ethically aware science security norm, is needed. This can then be applied in tandem with biosafety, to form a principled, responsive biochemical risk management strategy from the grass roots up. This is arguably more important now than ever before.

Bibliography :

- Dando, M. (2006). Bioterror and biowarfare: A beginner’s guide. Oxford: Oneworld Publications.

- Galston, Arthur W. (1972). Science and Social Responsibility: A Case History. Annals of the New York Academy of Science, 196, 223.

- Institute of Medicine, National Academy of Sciences, & National Academy of Engineering. (2009). On being a scientist: A guide to responsible conduct in research (3rd ed.). Washington, DC: National Academies Press. http://www.nap.edu/catalog.php?record_id=1292. Accessed 15 Sept 2014.

- King, J. (2012). Birth defects caused by agent orange. In Embryo project encyclopedia http://embryo.asu.edu/ handle/10776/4202. Accessed 15 Sept 2014.

- Manchini, G., & Revill, J. (2008). Fostering the biosecurity norm: Biosecurity education for the next generation of life scientists. Bradford/Como: University of Bradford/Landau Network-Centro Volta (LNCV)/Bradford Disarmament Research Centre (BDRC). www.brad.ac.uk/bioethics/monographs. Accessed 15 Sept 2014.

- Mayor, A. (2009). Greek fire, poison arrows and scorpion bombs: Biological and chemical warfare in the ancient world. New York/London: Overlook Duckworth.

- Meselson, M. (2000). Averting the hostile exploitation of biotechnology. CBW Conventions Bulletin, 48, 16–19.

- National Institutes of Health. (2012). Press statement on the NSABB review of H5N1 research. http://www.nih. gov/news/health/dec2011/od-20.htm. Accessed 15 Sept 2014.

- National Research Council. (2004). Biotechnology research in an age of terrorism. Washington, DC: National Academies Press (Often referred to as the Fink Report). http://www.nap.edu/catalog.php? record_id=10827. Accessed 15 Sept 2014.

- National Research Council. (2006). Globalization, biosecurity and the future of the life sciences. Washington, DC: National Academies Press (Often referred to as the Lemon Relman Report). http://www.nap.edu/ catalog.php?record_id=11567. Accessed 15 Sept 2014.

- National Research Council. (2010). Challenges and opportunities for education about dual use issues in the life sciences. Washington, DC: National Academies Press. http://www.nap.edu/catalog.php?record_id=12958. Accessed 15 Sept 2014.

- Rappert, B. (Ed.). (2010). Education and ethics in the life sciences: Strengthening the prohibition of biological weapons. Canberra: ANU E-Press. http://press.anu. edu.au/titles/centre-for-applied-philosophy-and-publicethics-cappe/education-and-ethics-in-the-life-sciences/. Accessed 15 Sept 2014.

- Sture, J. (2010). Dual-use awareness and applied research ethics: A brief introduction to a social responsibility perspective for scientists. Research report for the Wellcome Trust project on ‘building a sustainable capacity in dual-use bioethics’. www.brad.ac.uk/bioeth ics/monographs. Accessed 15 Sept 2014.

- Sture, J. (2013). Moral development and ethical decisionmaking. In B. Rappert, & M. Selgelid (Eds.), On the dual uses of science and ethics: Principles, practices, and prospects (pp. 97–120). Canberra: ANU E-Press. http://epress.anu.edu.au. Accessed 15 Sept 2014.

- Taylor, T. (2006). Safeguarding advances in the life sciences. EMBO Reports (European Molecular Biology Organization), 7(Special Issue).

- US Department of Veteran Affairs. (2013). Veterans’ diseases associated with agent orange. http://www.publichealth. va.gov/PUBLICHEALTH/exposures/agentorange/con ditions/index.asp. Accessed 15 Sept 2014.

- World Health Organisation. (1970). Health aspects of chemical and biological. Report of a WHO group of consultants. Geneva: WHO. http://www.who.int/csr/ delibepidemics/biochem1stenglish/en. Accessed 15 Sept 2014.

- Yong, E. (2012). Influenza: Five questions on H5N1. Nature, 486, 456–458. http://www.nature.com/news/influenza-five-questions-on-h5n1-1.10874. Accessed 15 Sept 2014.

- Medicine, Conflict and Survival, 28(1). (2012). Special Issue: Preventing the hostile use of the life sciences and biotechnologies: Fostering a culture of biosecurity and dual use awareness. http://www.tandfonline.com/ toc/fmcs20/28/1. Accessed 15 Sept 2014.

- Miller, S., & Selgelid, M. J. (2007). Ethical and philosophical consideration of the dual-use dilemma in the biological sciences. Science and Engineering Ethics, 13, 523–580. http://www3.nd.edu/~cpence/eewt/Miller2007.pdf. Accessed 15 Sept 2014.

- National Research Council (US). (2007). Science and security in a post 9/11 world: A report based on regional discussions between the science and security communities. Washington, DC: National Academies Press (US)/ Committee on a New Government-University Partnership for Science and Security. http://nap.edu/catalog. php?record_id=12013. Accessed 15 Sept 2014.

- The website of the International Red Cross contains a library of relevant documents such as international conventions, declarations and other items of interest relating to dual use issues. https://www.icrc.org/en/ war-and-law. Accessed 15 Sept 2014.

See also:

Free research papers are not written to satisfy your specific instructions. You can use our professional writing services to buy a custom research paper on any topic and get your high quality paper at affordable price.