This sample Multisite Trials in Criminal Justice Settings Research Paper is published for educational and informational purposes only. If you need help writing your assignment, please use our research paper writing service and buy a paper on any topic at affordable price. Also check our tips on how to write a research paper, see the lists of criminal justice research paper topics, and browse research paper examples.

This research paper describes the issues associated with conducting multisite trials in criminal justice settings, including how to determine the interventions to be tested, the selection of control groups, and the potential threats to internal validity of the trial, and gives two real-world examples of trials conducted and how they were managed and analyzed.

Introduction

Researchers conducting an experiment must decide on the type of design and the number of sites to include. The latter decision is one that receives little attention. As previously argued by Weisburd and Taxman (2000), multicenter trials have many advantages, including the opportunity to test a new protocol or innovation within various settings. Single-site trials provide a starting point to test out the feasibility of a new innovation, but multisite trials have a clear advantage in testing the innovation under varied conditions. The multisite trial may offer the potential to accumulate knowledge more quickly by using each site as a laboratory for assessing the characteristics or factors that affect the question of “value-added” or improved outcomes. It creates the opportunity to simultaneously test the innovation as well as learn from the sites about the parameters that affect outcomes. Multisite trials provide insights about areas where “drift” may occur, whether it is in the innovation, the management of the study, external factors that affect the innovation, or the inability of the environment to align to the innovation.

This research paper provides insight into the complexity of a multisite trial and how different design, site preparation, and research management characteristics can affect the integrity of the experiment. Case studies of two recently completed multisite trials that involve complex innovations or innovations that involve more than one operating agency are used. In these case studies, the various types of threats to internal validity of the trial are used as a conceptual framework to illustrate the decisions that researchers must make in a study.

The Multisite Trial

While randomized control trials are the “gold standard” of research, they are often challenged by design and implementation issues. Adding diverse sites complicates matters, particularly when the goal is to develop a standardized protocol across sites and the study sites have a wide range of expertise and resources. These issues can be even more pronounced in criminal justice settings, where design considerations are often impacted by the structure and administration of local, state, and federal agencies. While there has been a push for more randomized trials in the criminal justice field (Weisburd 2003), the number of randomized studies remains relatively low to the field of medicine, substance abuse, education, and other similar disciplines.

The first step of a multisite trial is to develop a study procedure detailing the intervention for the study sites and research team. The protocol should have (1) the rationale for the study, (2) a description of the intervention, (3) a list of the procedures to implement the study, (4) a copy of the instruments and key measures, and (5) human subject procedures including consent forms and certificates of confidentiality. This protocol is needed to provide the guidance in terms of implementation of the study in real-world settings. The protocol should consider the unique characteristics of the sites involved, particularly if these characteristics are likely to impact findings or influence the fidelity of the intervention. Up-front analyses of these issues, through pipeline or feasibility analyses or piloting of procedures, will increase the likelihood that the trial is uniformly conducted across study sites. The goal is to mitigate differences, which reduces the need to control for these issues during the analytic stage. The issues to be addressed in the protocol are: Rationale for the Study. Every trial has a purpose that should be specified in study aims and hypotheses. The rationale provides a guidebook as to why the trial is necessary and what will be learned from the trial.

Theoretical Foundation. Trials are about innovations, either the introduction of a new idea or a change in existing procedure or process. The innovation should be guided by theory which explains why the new procedure or process is likely to result in improvements in the desired outcomes. It is important to ensure that each component of the procedure is theory-based to provide an explanation of why the component is needed as part of the innovation.

Defining the Study Population. Based on the study aims, the criteria for inclusion and exclusion should be specified to ensure that the innovation reaches the appropriate target population. Generally inclusion criteria relate to traits of the individual or setting. But in real-world criminal justice settings, other selection forces may be present that are a function of the process or environment. Researchers may not have access to the full base population, which can potentially bias results. Wardens or correctional officers may decide that offenders under certain security restrictions are ineligible for study participation. Selection forces can vary between study sites because of factors that are often not evident. Also, sites may operationalize selection criteria differently. A recent article on multisite trials in health services research recommended that the lead center develops selection criteria but allows each site to operationally define these criteria given the local environment (Weinberger et al. 2001).

Defining the Intervention: The innovation that is being tested should, by definition, be different than traditional practice. As discussed above, the intervention needs to be outlined in detail, including theoretical rationale, dosage and duration, procedures essential to implementation, and core components that will be tracked using fidelity measures (Bond et al. 2000). Most interventions consist of different components that are combined to produce the innovation. It is important to specify who will implement the intervention and how they will become proficient in the skills needed to deliver the intervention. Clear delineation of how the innovation will be integrated into the existing infrastructure of an organization and what potential issues are expected to occur and how these will be handled is also needed.

Defining the Control Group(s) or Practice as Usual: The main purpose of the control group is to serve as a contrast to the innovation. The control group represents practice as usual. This group is expected to have the same distribution of other factors that could potentially affect outcomes as the treatment group, which avoids the possibility of confounding. Randomization gives each eligible subject an equal chance of being assigned to either group in a two-armed design. Blinding, in conjunction with randomization, ensures equal treatment of study groups. In general, the services received by the control group should follow the principle of equipoise, meaning that there should be uncertainty about whether the control or the experimental condition is a better option (Djulbegovic et al. 2003). It is important for the hypotheses to be concerned about how alternative strategies (including treatment as usual) impact outcomes.

Measurement and Observations: Another important component of a study is how effects what instruments that is, will be used to measure outcomes and how and when these instruments will be used. Dennis and colleagues (2000) outline four types of variables to be collected: design variables (sites, study condition, weights, etc.), other covariates (demographics, diagnoses, etc.), preand postrandomization intervention exposure, and preand post-dependent variables. Most experiments have multiple waves of data collection. Outcome measurements are subject to a number of potential biases, including recall bias (self-report), sensitivity and specificity issues (biomedical tests), and rater error (file reviews). The types of data to be collected should be based on the hypotheses to be tested and the availability and cost of data. Multiple sources of measurement are recommended for the main outcomes, such as self-report and urine testing for drug outcomes.

Quantitative Analysis: The analysis of multisite trial data is dependent on the types of measures collected and the format of the variables. While the trial is ongoing, interim analyses may be conducted, mainly for data monitoring purposes. Some trials in the justice system require oversight from a data and safety monitoring board (DSMB). A DSMB reports on a regular basis to determine if subject flow and follow-up are adequate and if treatment effects are evident in the data. These interim data runs often utilize t-tests, ANOVAs, or effect sizes (Cohen 1988). For more formal analyses, multivariate models are used to account for the repeated measures and multisite designs. With repeated measures data, hierarchical models can be used, which nest observations within person over time (Raudenbush 2001). Different types of these models are available including latent growth models (LGM), which capture individual differences in change trajectories over time (Meredith and Tisak 1990).

A multisite trial allows for the ability to pool the overall and specific impacts of treatment within separate sites in the context of a single statistical model. The sites in a multicenter trial can be seen in this context as building blocks that can be combined in different ways by the researchers. The randomization procedures allow each center to be analyzed as a separate experiment as well as combined into an overall experimental evaluation where the researcher is able to identify direct and interaction effects in a statistically powerful experimental context.

A multisite study is not a multicenter study where there is a random sample of sites themselves. Without a random sample of sites, a number of restrictions must be placed on the generalizations that can be made and the type of statistical models that can be estimated. Site must be defined as a fixed factor in the statistical models employed, because of the possible variations in the context of the sites. It is important to consider that the generalizability of these effects is limited and cannot be applied to the larger population.

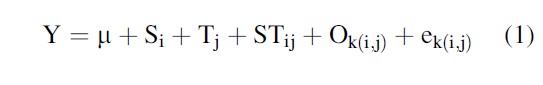

Weisburd and Taxman (2000) recommend a statistical process for using mixed-effects models in analyzing multisite studies. A model for this example is presented in Eq. 1 below.

Tj is the treatment effects averaged across sites to obtain larger population of centers for the mixed-effects model.

Si is the average impact of a site (or center) on outcomes, averaged over treatments.

STij is the interaction effect between treatment and site, once the average impacts of site variation and treatment variation have been taken into account.

Ok(i,j) is nested within a site by treatment combination.

Tools To Monitor Implementation: CONSORT Flow Charts In CJ Settings

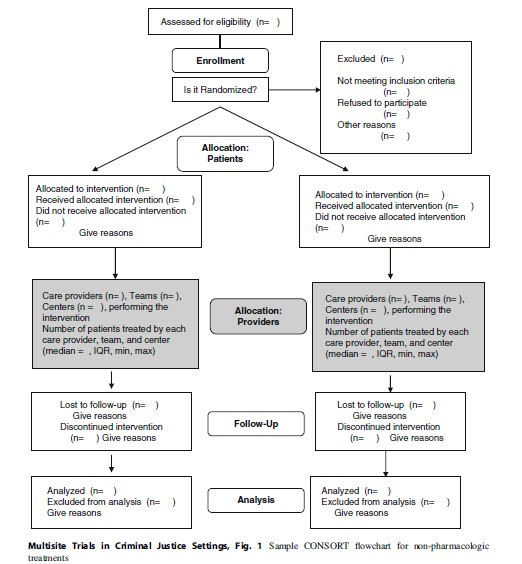

Understanding the flow of subjects through the different phases of an experiment is important to manage the quality and integrity of the experiment. In multisite trials, subject flow may be influenced by facets of the particular criminal justice system, the behavior of study subjects, and/or factors that are difficult to identify. In 2001, the Consolidated Standards of Reporting Trials (CONSORT) statement was issued as a way to improve the reporting of information from experiments in healthcare (Moher et al. 2001), and it has now become a standard that experimenters strive for in social policy areas like criminal justice. The CONSORT flow chart illustrates how to report subjects through each trial phase; it was amended in 2008 to specifically address issues related to non-pharmacologic treatments (such as different types of therapy or different modes of sanctions). A copy of the amended chart is in Fig. 1 (Boutron et al. 2008). The CONSORT chart provides documentation for each phase of the experiment and provides sufficient information to assess the quality of the implementation of the experiment. The CONSORT chart is a standardized reporting format that clarifies the steps in the study and the attention to recruitment and retention issues.

This section describes the CONSORT flow chart for non-pharmacologic treatments (Fig. 1) as it relates to implementing trials in criminal justice settings. The unique challenges presented by these settings not only include the target population as potentially vulnerable to coercion and exploitation but also reflect the difficulties of work in settings that are, by their nature, authoritarian and often not amenable to the rigors of randomized trials.

The first section concerns eligibility. Most trials involve decisions about specific inclusion and exclusion criteria that should be in place for the trial so that the results can be generalizable to the appropriate population. The identification of people that fit the desired criteria may involve a multistep process. For example, a specific protocol may require the exclusion of sex offenders. This will require the research project to define the term “sex offenders” and then develop specific criteria to meet this term. In practice, researchers will generally need to conduct a prior chart review of offenders to make a determination of which offenders might meet the eligibility criteria. This screening often occurs before the consent process and baseline research interview. Other types of eligibility criteria are offense or location specifics, age or gender, language, or other characteristics. In general, screening criteria should be geared toward obtaining a study population that would be appropriate for the intervention. The questions for the CONSORT chart are how many subjects meet the criteria and what process is used to identify the target population. This defines the intent to treat group.

Prior to randomization, subjects may be dropped from the study because they fail to meet screening criteria or refuse to participate in the study. Once they are randomized, they may either receive their allocated intervention or they may not receive it for a variety of reasons including not meeting the eligibility criteria when they are interviewed, dropping out of the study, transferring to another CJ facility, or having a change in criminal justice status. All of these are examples of post-randomization factors that may affect participation in a study. The randomization process is such that once a subject is assigned to a condition, they are eligible for follow-up even if they only receive a partial dosage of the intervention or they did not complete the period of time devoted to the intervention.

The provider allocation section of the CONSORT chart was added in 2008 and characterizes the settings where the treatment(s) takes place. The researcher needs to identify all of the potential settings that might be involved in the experiment. These could be treatment facilities, jails, prisons, probation offices, courts, or other areas. Included are the number of providers, teams, and/or centers and the number of subjects in each area. Each organization will have different characteristics, and these require documentation.

A key issue is the follow-up rates for subjects in each arm of the study. The documentation should include the number that has been followed up and the reasons for missed follow-ups. Reasons for attrition from a study are critical to ensure sufficient power for the analyses. It is important to document follow-up rates at each wave, along with reasons for exclusions of any cases.

The last component of the CONSORT documentation is the results from the final outcome variables that relate to the study aims and the timing of all variables. The researcher is also required to document any assumptions that were made about the data.

Some criticisms have been made of the lack of detail present in the CONSORT chart, specifically the lack of information on implementation details. As our case studies demonstrate, the CONSORT chart does not capture a number of issues that can present threats to the internal validity of a multisite trial. Other scales and checklists have been developed to measure the quality of randomized trials, including the Balas scale for health services research (Balas et al. 1995) and the Downs scale for public health research (Downs and Black 1998). A review of these scales found little widespread use of them and also found that most had not been tested for construct validity or internal consistency (Olivo et al. 2008).

Case Studies Of RCTs In Criminal Justice Settings

The following section present case studies of two RCTs that were implemented in settings covered in the Criminal Justice Drug Abuse Treatment Studies (CJDATS), a ten-center research cooperative sponsored by the National Institute on Drug Abuse from 2002 to 2008. Part of the objective of CJDATS was to use rigorous scientific methods to conduct studies in criminal justice settings. The purpose of the studies was to conduct trials at the point where offenders transition from incarceration to the community, referred to as the structured release or reentry process. The studies involved numerous agencies including prisons, probation/parole departments, prison or community treatment providers, and nonprofit organizations.

The case studies illustrate the issues regarding study design and management. The conceptual framework for this analysis is the threats to internal validity that are specified in many textbooks on conducting social experiments (Campbell and Stanley 1966). By examining these issues, as well as looking at how the investigators managed the study, researchers can learn about the issues inherent in implementing multisite trials.

Threats To Internal Validity

- Confounding: The degree to which a third variable, which is related to the manipulated variable, is detected. The presence of spurious relations might result in a rival hypothesis to the original causal hypothesis that the researcher may develop.

- Selection (bias): Both researchers and study participants bring to the experiment many different characteristics. Some of these characteristics that may not have been included in the eligibility criteria but may influence who participates in an experiment. These factors may influence the observed outcomes. The subjects in both groups may not be alike in regard to independent variables.

- History: Study participants may be influenced by events outside of the experiment either at the onset of the study or between measurement periods. These events may influence attitudes and behaviors in such a way that it might be detectable.

- Maturation: During the experiment, subjects may change and this change may result in how the study participant’s reacts to the dependent variable.

- Repeated testing: Conducting multiple measures over period of time may result in bias on the part of the study participants. These impacts may occur from the failure to recall correct answers, or the prospects of being tested.

- Instrument change: An instrument used during the experimental period could be altered.

- Mortality/differential attrition: Study dropouts may be the result of the person, the experiment, or the situation. An understanding of reasons for the attrition and the differential rates of attrition can address the issue of whether the attrition is natural or due to some other factors.

- Diffusion: The experimental category should be different than traditional practice and movement from the experimental to control group or vice versa may pollute the assigned conditions.

- Compensatory rivalry/resentful demoralization: The control group behavior could be tainted by the result of being in the study.

- Experimenter bias: The researcher or research team may inadvertently affect the experiment by their actions.

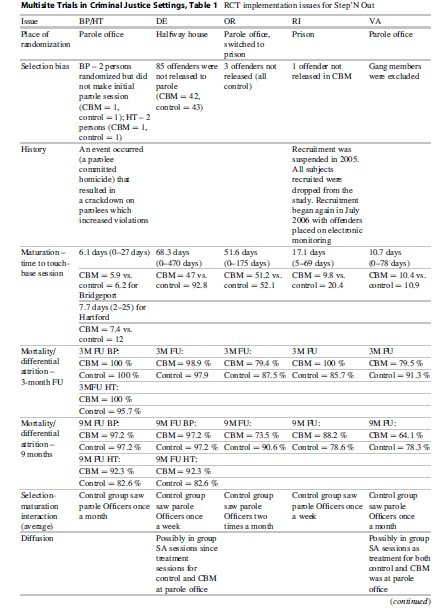

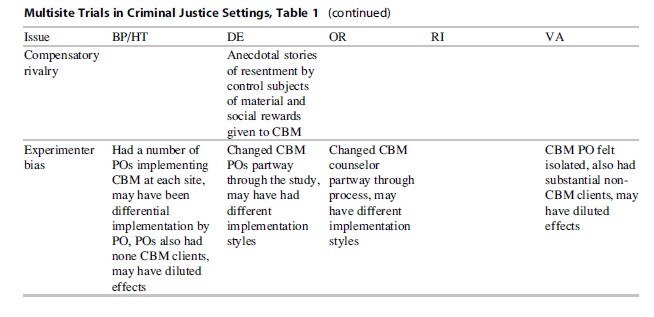

Step’N Out: An RCT At Six Parole Offices In Five States

Step’N Out tested an integrated system of establishing target behaviors for offenders on parole, known as collaborative behavioral management (CBM). CBM requires the parole officers and treatment counselors to work together with offenders over a 12-week period, using a system of graduated sanctions and incentives. A more detailed description of the study can be found elsewhere (Friedmann et al. 2008).

Theoretical Intervention. CBM has three major components. First, it articulates parole and treatment staff roles, as well as the offenders and also makes explicit the expectations of each party. The clarification of expectations is covered in role induction theory where the emphasis is on clients understanding the roles and responsibility of different functions of the process. Second, a behavioral contract defines the consequences if offenders fail to meet those expectations. The behavioral contract specifies concrete target behaviors in which the offender is expected to engage in on a weekly basis; these target behaviors include requirements of supervision and formal addiction treatment and involvement in targeted behaviors that compete with drug use (e.g., getting a job, enhancing nondrug social network). Third, it regularly monitors adherence to the behavioral contract and employs both reinforcers and sanctions to shape behavior. The motto is “Catching People Doing Things Right,” which is to say, the intervention creates the conditions to notice and reward offenders for achieving incremental pro-social steps as part of normal supervision (covered in the research literature on contingency management).

The CBM contract specifies expectations in terms of concrete target behaviors that the offender must meet before the next weekly session. These target behaviors are managed using a computer program which prints out a contract with copies for all three parties to sign and keep for their records. The CBM contract is monitored weekly to expedite identification and reinforcement of compliance and sanction of noncompliance, and then the contract is renegotiated and printed for the following week. Compliance with the contract earns points and, when preestablished milestones are reached, material and social rewards.

Control Condition. Offenders were supervised by the assigned parole officer in the normal manner that was used by that jurisdiction (“treatment as usual”). As shown in Table 1, each site had different standards for parole visits and drug testing.

Study Sites. Five CJDATS centers participated in Step’N Out – the University of Delaware (Wilmington parole office), Brown University/ Lifespan Hospital (Providence parole office), UCLA (Portland, Oregon, parole office), Connecticut (Bridgeport and Hartford parole offices), and George Mason University (Richmond, Virginia, parole office). All sites except Connecticut had one parole officer and one treatment counselor assigned to the CBM condition at their sites. In Connecticut, two or three officers were trained in CBM at each site, with one counselor also trained at each office.

Site Preparation. The initial two-and-half-day training for the Step’N Out teams occurred in December 2004. This training brought together parole officer and addiction counselor teams and their supervisors. After an overview of the intervention, most of the training focused on having each team practice skills in case-based role-plays with reinforcement and corrective feedback. A checklist of the key elements for fidelity to the protocol guided the role-plays and feedback. The teams were encouraged to negotiate roles with regards to initiating the role induction discussion, establishing goals, and setting target behaviors, but the protocol recommends that the parole officer (PO) takes primary responsibility for rewards and sanctioning and the counselor oversees problem-solving.

Additional on-site trainings were also done due to the lag time between the initial training and the time that sites began recruitment, the addition of new sites, and staff turnover. A 2-day booster training session in September 2006 focused on enhancing both the fidelity and finesse with which teams delivered the intervention.

POs were also asked to tape selected sessions. Feedback on the sessions was to be given on a monthly basis to build the skills of the POs in using the contingency management procedure.

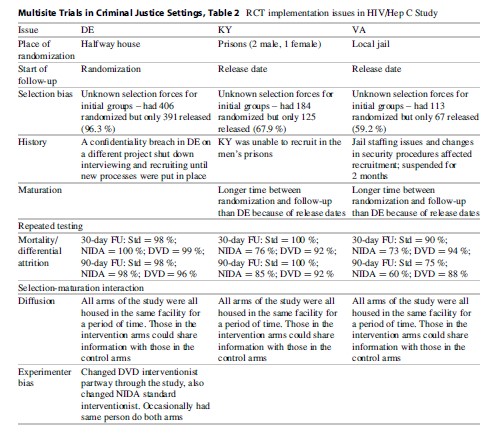

Study Implementation. Table 2 outlines the issues encountered in implementing the study across the five sites. One of the first challenges was the point of randomization. Because those who were randomized to the CBM condition had to be assigned to a specific officer (the one who had received the CBM training), randomization had to occur at a point where PO assignment had not yet happened. This point varied among sites, with some assigning POs prior to an offender’s release from prison, while others assigned the officer once the offender appeared at the parole office. While the ideal situation was to randomize at the parole office where the intervention was occurring, this was not possible in Delaware and Rhode Island. At those sites, the agency practice was to assign the officer prior to an offender’s release, so screening and randomization were done while the offender was still incarcerated; this allowed the offender who was randomized to CBM to be assigned to the CBM officer. In Oregon, randomization began at the parole office, but recruitment was very slow in the initial months of the study and it was determined that the research staff had a better working relationship at the prisons; thus, recruitment was moved to the prison after6 months. In the end, half of the sites were randomizing in prison and half were randomizing at the parole office.

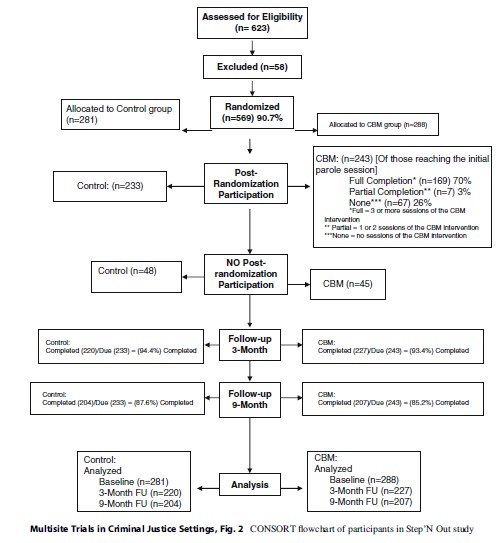

A consequence of the place of assignment is that not all randomly assigned participants were exposed to the intervention. A substantial portion of those who were randomized in prison never made it to the parole office. In Rhode Island, this was due to the fact that many offenders “flattened” in prison, or served out their parole sentences in prison, because of the unavailability of space in halfway houses where offenders were assigned upon release. In Delaware, many offenders committed violations in the halfway house that resulted in them being returned to prison before they could begin their parole sentence. This group of “post-randomization dropouts” was problematic, because they were technically part of the study population to be followed, yet they were not exposed to the intervention or any study activities. Many had no time in the community post-randomization. Rhode Island dropped their initial cases and changed their recruiting practices so that all of their cases were to be placed on electronic monitoring after release. Delaware also changed their recruiting to make their screening closer to the initial parole session. These measures drastically reduced post-randomization dropouts. The final CONSORT chart for Step’N Out is given in Fig. 2 (note that when the study was done, the revised CONSORT for non-pharmacologic interventions had not yet been published). There were a total of 93 post-randomization dropouts, with the majority (n ¼ 85) from the Delaware site.

The “place of randomization” turned out to be a significant confounding variable in statistical analyses. Since the studies had different places of randomization (i.e., prior to release from prison, at the parole office, etc.), we were able to disentangle the impact of place of randomization on the study outcomes. Also, as is shown in Table 1, other selection forces also occurred during the study at different sites. In Virginia, gang members were never sent to the research interviewer for screening because a special program for gang members was being conducted with an assigned PO.

Another important site difference in implementation was the time it took for a person to begin the intervention after randomization. In some sites, those in the CBM group were able to begin parole services sooner than those in the control group, perhaps due to the availability of a dedicated PO for the CBM intervention who did not have the same large caseloads as other officers. In both Delaware and Rhode Island, it took about half the time for those in CBM to begin parole services as compared to those in the control group. This affects the outcomes which may be a function of the timing rather than the superiority of the CBM intervention attributes (MacKenzie et al. 1999).

The follow-up clock for Step’N Out began with the date of the first parole session (the “touch-base” session). Because the time to the touch-base session was longer for some subjects than for others, the time to the follow-up interviews also varied. In some cases, the 3-month follow-up occurred about 3 months after randomization (when randomization occurred close to the first parole session), but in other cases, there was over a year of time between randomization and the first parole session resulting in over 15 months between the baseline and the 3-month follow-up. While the follow-up was capturing the first 3 months on parole, there was a wide variation in how much time had elapsed since randomization for each subject. It was also complicated since some sites (Delaware) tended to have different timing between follow-up periods.

An attrition-related issue involved missed follow-ups. The Delaware center, which had the largest number of clients in Step’N Out, had staffing issues during the project and missed the 3-month follow-up window for a number of subjects. Because the main outcomes relied on data from the 3-month interview, provisions were made to obtain these data at a later date. At the 9-month interview, a full timeline follow back was completed for these subjects which included all time back to the initial parole session. The chances for historical and maturation issues were increased by this procedure, especially with the different time frames for data collection across the various sites. This strategy allowed for the study to obtain needed outcome data, but it also created potential recall issues, where subjects were asked to self-report drug use, arrest, crime, and living situation data on a daily basis up to 18 months after it occurred. The varying lengths of the follow-up period may also have affected the integrity of the data.

An issue that was inherent to the specific criminal justice systems in each state was the status of the control group. Because this group received parole and treatment services “as usual” in each state, the services received by the control group were not uniform across all sites, as shown Table 1. In some sites, parole officers met with subjects once a month; in others, once a week. These were due to standard conditions of parole, but other variations could occur because individual offenders had different conditions which required different schedules. The same issues applied to substance abuse treatment. While the screening instrument used to assess eligibility ensured that those in the study had substance abuse dependency issues, it was not required that members of the control group would receive treatment since this decision depended upon the local parole systems. Not all parole officers recommended their clients for treatment. Thus, variations occurred in the conditions under which the control group was supervised both within a site and across sites.

The HIV/Hepatitis C (Hep C) Study: A Three-Armed RCT At Three Centers

This RCT was designed to test the efficacy of a criminal justice-based brief HIV/HCV intervention administered during the reentry period. Rates of both HIV and hepatitis C are disproportionately high for those involved in the criminal justice system, and the reentry period has been found to be a particularly risky time for offenders. This study was a three-group randomized design, with participants assigned to the following: (1) current practice, a group of transitional releases who saw an HIV awareness video shown as part of group sessions in the facilities in the prerelease process; (2) the NIDA Standard Version 3 HIV/Hep C prevention intervention delivered by a health educator via cue cards in a one-on-one didactic setting; and (3) the CJDATS Targeted, a near-peer facilitated intervention that used an interactive DVD format with gender/race congruent testimonials, also in one-on-one setting. Participants in all three groups were offered HIV and HCV testing. The full study design has been described elsewhere (Inciardi et al. 2007). The study was conducted by three CJDATS centers, the University of Delaware (lead center), the University of Kentucky, and George Mason University. The main purpose of the study was to test the interactive DVD method to determine if those who were exposed to a gender and culturally specific message had greater reductions in risky behaviors than those that receive the NIDA standard and current practice arms of the study. Follow-ups for this study were done at 30 and 90 days after either randomization or the date of release from prison.

Theoretically Driven Intervention. Based on focus groups with offenders, the study team developed a DVD that addressed issues related to reducing risky behaviors that involve the transmission of HIV/Hep C. The messages were then developed to be consistent with the gender and race (i.e., Caucasian, African American) of the offender. The theory was that the messages delivered in a gender-and culturally specific manner would result in greater compliance. The DVD arm of the study was administered by a near-peer, usually a former addict and/or someone with HIV or HCV. There were four separate DVDs, based on race/gender combinations (black male, black female, white male, white female), and they included testimonials from former offenders and persons infected with HIV and HCV discussing their situations.

Control Conditions. In this study, several treatment as usual conditions were used: (1) traditional practice where a group of offenders were shown an a HIV awareness video as part of group sessions in the facilities in the prerelease process and (2) the NIDA Standard Version 3 HIV/Hep C prevention intervention delivered by a health educator via cue cards in a one-on-one didactic setting (National Institute on Drug Abuse 2000). These represented two different conditions that reflected the “variety of practices” available in the field.

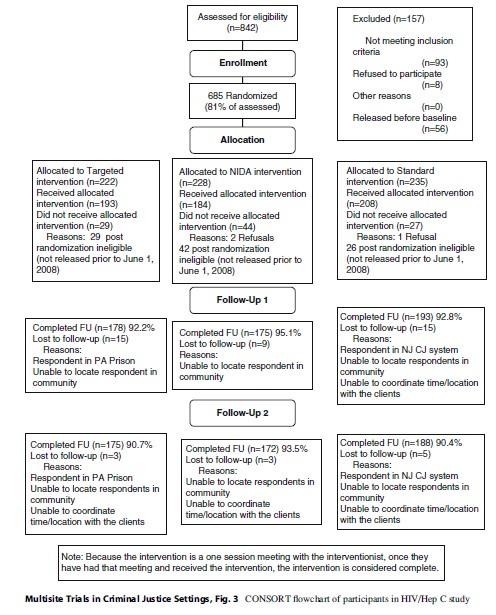

Study Recruitment. Potential participants were shown a video on HIV prevention in a group setting while still incarcerated (see section on setting by site) and were given information about the study and asked if they would like to participate. If they said yes, they were then screened individually to determine eligibility, and if eligible, they went through the informed consent process and were randomized into one of the three arms of the study. Testing for HIV and HCV was done at baseline and was voluntary.

Implementation. Table 2 presents some of the implementation issues of this study. Most of the challenges resulted from the varied settings where the intervention took place. For Delaware, the study was implemented in a halfway house facility, a place which ensured an adequate flow of, and continuous access to, participants. This site selection was convenient but in many ways altered the original intent of the study, as the offenders at this facility were already at risk in the community since they reside in the halfway house but still can spend time in the community. In Kentucky, the study was implemented at a men’s prison and a women’s prison, while in Virginia it was implemented at a local jail. Because the point of the follow-up period was to obtain information on risky behaviors in the community, the start of the follow-up clock was different according to site. In Delaware, because subjects were already at risk at the time of randomization, the follow-up clock started at the randomization date. In both Kentucky and Virginia, the follow-up clock did not start until the subject was released from the institution. This situation created a number of problems, including the fact that the type of site (prison, jail, halfway house) was completely nested within state and that the time at risk was not comparable among all subjects. Those in Delaware may have had some time at risk prior to being enrolled in the study. While baseline behavior data were gathered for the period prior to the last incarceration, the follow-up data collected may not reflect the same period of initial risk for those in Delaware as for those just released from incarceration in Kentucky and Virginia.

Another issue that may have affected implementation was the initial selection of the group of offenders to watch the video at each site. Generally, these groups were gathered by personnel at the facilities who were given the criteria for the study (offenders had to be within 60 days of release, speak English, and not have cognitive impairments). Researchers were not often privy to the decisions about the type of offenders that participated in the sessions. It may be that different types of groups were selected for inclusion at the different sites.

Since follow-up was dependent on release in both Kentucky and Virginia, a substantial percentage of those randomized were ineligible for follow-up because they were not released during the time frame of the study. While those randomized were initially scheduled for release within 60 days of recruitment, the release date often changed because of pending charges or other issues. In Kentucky, 33 % of the randomized population was never released, and in Virginia, 41 % of the randomized population was not released during the time frame of the study. This issue had the potential to create selection bias and differential attrition. The final CONSORT chart for this study is given in Fig. 3. A substantial number of those assessed were lost both prior to completing the baseline and also post-randomization, because of prison release issues.

Finally, in one site, the same interventionist, a peer counselor, administered the NIDA arm and DVD arm for a period of time. Bias should have been minimized since the interventionists could also do the testing for those in the control arm. There is a potential that the interventionists provided information to that group that was specific to their arm of the study. The interventionists were not blind to the study allocation of the subjects.

Discussion

Multisite trials are important tools to accomplish the scientific goals of (1) testing the efficacy of an innovation; (2) simultaneously “replicating” the study in multiple sites to determine the robustness of the innovation; and (3) allowing the unique characteristics of agencies, communities, and/or target populations to be concurrently examined in the test of an innovation to specify acceptable parameters. The trials represent the science of conducting field experiments but the process also reveals that there is an art in conducting randomized trials. It is the art – the crafting of the experiment, the mechanisms to conduct the experiment, and the daily decisions regarding the handling of “real-world” situations – that determines whether the experiment achieved the goal of testing the theoretically based innovation.

The analysis in this research paper presented the art in terms of issues that have been identified as threats to internal validity (Dennis et al. 2000). We used these tests of internal validity to demonstrate how experimental processes and research designs affect the integrity of, and findings from, an experiment. These two experiments allow us to draw lessons about study management to reduce the degree to which the threats affect the dependent variables:

- Confounding. An important step in reducing the influence of third variables is to conduct three pipeline flows: (1) the research process in each participating site, (2) the core components of the innovation should be outlined to determine what other factors affect the independent variable, and (3) the potential mediators or moderators that may affect the independent variables. All three of these preexperimental processes are important to minimize extraneous variables.

- Selection Bias. The inclusion and exclusion criteria of an experiment are critical in assessing potential sources of bias. But more important than simply analyzing these a priori decisions is to map these criteria to the study population to ensure that the research processes are not affecting selection bias. For example, in the Step’N Out experiment, the researchers in one study site were unaware that the agency did not release offenders without the use of extra controls (e.g., electronic monitoring). Yet, in the early stages of the experiment, when this was discovered, it resulted in consideration of new eligibility criteria. Maps of the system would assist in this process.

The two multisite trials analyzed here had other selection biases that crept into the study such as the use of different types of recruitment sites in the HIV/Hep C study. While this variation was useful in assessing the elasticity of the innovation across different correctional settings (prison, work release, jail), it introduced new problems, including a large population of post-randomization ineligibles who were not released from the prison/jail within the study period of 12 months. This created a potential bias because it affects the intent to treat group, and the differences in releasees versus detainees must be assessed to determine the degree to which the study protocol affected study outcomes. The use of a historical control group can mitigate issues with selection bias for this part of the population and can also reduce other biases, including diffusion, compensatory rivalry, and experimenter bias. The researcher must usually rely on records to obtain data for the historical group, but this strategy is useful, especially when the experimental condition appears to be clearly superior to the treatment as usual and denying it to a portion of the current population would be unethical. Another related issue is to have a historical control group that could define the base rates for the dependent variables which can assist with understanding whether there was a Hawthorne effect with the study or whether the inclusion and exclusion criteria affect the dependent variable(s).

- History. The impact of events outside of the experiment, either at the onset of the study or between measurement periods, may influence measured outcomes or independent variables. In Step’N Out, a crackdown on parolees in Connecticut occurred during the follow-up period, resulting in a higher number of parole violations than would have otherwise occurred. While it was felt that this event did not differentially affect the study groups, it was presumed that the overall number of parole violations was higher in the study than it normally would have been. This information is important to know and to report with all analyses of the study, especially as parole violations were considered a main outcome and ended up being much lower for the CBM group than the control group.

- Maturation. During the experiment, subjects may change, and this change may result in how the study participant reacts to the dependent variable. In both studies, some sites had longer times between randomization and the follow-ups because of timing and release issues and some subjects also had longer times until their follow-ups were completed. In these cases, it is difficult to determine if the intervention caused positive changes or if more time in the community and exposure to other factors could be a possible cause. Future studies could focus on ensuring that randomization and follow-up schedules are implemented so that there is little room for variance and that research interviewers are trained to understand the importance of completing follow-ups in the given time windows. For example, studies that focus on the release period could delay randomization until an offender is released to avoid the uncertainty associated with release dates.

- Mortality/Differential Attrition. Study dropouts may be the result of the person, the experiment, or the situation. In some Step’N Out sites, the CBM group had lower follow-up rates at both 3 and 9 months, indicating that offenders may have found it more difficult to complete the CBM intervention and to stay connected with the parole office. The implementation of the intervention at these sites should be explored to determine how it differed from other sites. In Oregon, for example, those participating in CBM had to attend parole sessions at a different office which may have been inconvenient for them. In another site, the CBM officer was required to also maintain a caseload of regular parole clients, and she often had scheduling conflicts with her CBM clients. These issues provide insight into how implementation can affect participation. One suggestion for future iterations of Step’N Out was to have it be an office-based, rather than officer-based intervention, where the entire parole office practiced CBM.

- Diffusion. The experimental category should be different than traditional practice, and movement from the experimental to control group or vice versa may pollute the assigned conditions. While there was no known crossover from one study condition to another, there was potential in both studies for offenders from different conditions to mix with each other and share information. In Step’N Out, one site had a control group that was not dissimilar to the CBM group, as the study site had specialized parole services where POs met with their clients weekly. These POs often used novel methods of sanctions and rewards. In these cases, it is difficult to determine the explicit effects of the intervention under study, as (1) the control group may have been influenced by the intervention and (2) the control group may not reflect current practice accurately. For multisite trials, this variation among current practice at sites is an issue that is often raised. An initial feasibility study at each site should identify potential issues and provide insights on how each site works. Each study protocol should have some flexibility so that it can be adapted to multiple sites but also must specify minimum conditions for the control group that must be met in order for the site to participate.

- Compensatory Rivalry/Resentful Demoralization. The control group behavior could be tainted by being in the study. This is more of a possibility in longer lasting interventions where the treatment under study appears as a “better” alternative to those who are targeted for treatment. In Step’N Out, there was some evidence that persons wanted to be in the CBM condition (often asking for this at randomization) and felt those in CBM were getting an “easy ride.” This could lead to resentment from the control group, especially if they were aware that the CBM group would receive material rewards for good behavior and they would not. This could create a disincentive for good behavior for those in the control group, and study results could be biased upward, with results better than they should be under normal circumstances. Researchers should also be careful in using language to describe the study conditions to ensure that the experimental condition is not seen as highly preferable to the current practice and should also work with staff at the agencies to train them not to present the intervention as an improvement or something better, as staff attitudes are usually quickly conveyed to offenders.

- Experimenter Bias. The researcher or research team may inadvertently affect the experiment by their actions. Both of the studies we examined here had interventionists on-site at justice agencies who were not blinded to the study condition assignment of the offenders. Blinding cannot be done for the interventionist in these types of studies as they must know what condition a person is in so that they can deliver the correct intervention. While a number of measures were in place to ensure fidelity (tapes in Step’N Out, checklists in HIV), there were concerns that POs may have implemented CBM differently at different sites and even at the same site, based on the person involved. The same is true for the health educator and peer interventionist in HIV, especially the peer counselor who had less of a script to work with and used more of their own experience in working with their clients. While the tapes were a good idea in Step’N Out, they were not used effectively as a feedback tool, as there was a long delay in coding and providing feedback to the POs. Because delivery of the intervention is such a key component of the study, researchers should make provisions for ongoing and timely fidelity monitoring of the intervention, with a clear plan on how fidelity will be tracked, how feedback will be provided, and how corrective actions will be taken (Carroll et al. 2007).

As a researcher considers validity threats to a multisite experiment, they should ask themselves the following five questions and care fully consider how the decisions that are made affect the experiment:

- How does the issue affect the flow of subjects on the CONSORT chart?

- How does the issue affect inclusion and exclusion (selection forces)?

- Does the issue disrupt the theory underlying the intervention? Or does it disrupt the treatment as usual?

- How might the issue affect the dependent variable(s)?

- How might the issue affect possible confounders?

Conclusion

Multisite field experiments can test an innovation as well as the environment where an innovation can be offered. In addition, the multisite field experiment requires the researcher and/or research team to be even more sensitive to the impact of design and implementation decisions on the integrity of the experiment, as well as the generalizability to the wider target population. This research paper has been designed to help researchers think about the issues related to internal validity threats and how to make decisions about the target population, eligibility processes, intervention design and processes, and instruments. Managing one study site presents sufficient challenges, while managing more than one study site requires the researchers and investigator(s) to be cognizant of how each decision at each site might affect whether the goals of the study can be obtained. In both case studies, we have examples where practical realities crept into the experiment. More work will need to be done to determine the impact on the outcome variables. In the end, it is apparent that scientists must also be good research administrators to ensure the study sites uphold the experimental design and that each decision is weighed against the concern about the integrity of the design.

Bibliography:

- Balas EA, Austin SM, Ewigman BG, Brown GD, Mitchell JA (1995) Methods of randomized controlled clinical trials in health services research. Med Care 33(7):687–699

- Bond G, Evans L, Saylers M, Williams J, Kim H (2000) Measurement of fidelity in psychiatric rehabilitation. Ment Heal Serv Res 2(2):75–87

- Boutron I, Moher D, Altman DG, Schulz KF, Ravaud P (2008) Extending the CONSORT statement to randomized trials of nonpharmacologic treatment: explanation and elaboration. Ann Intern Med 148(4):295–309

- Campbell DT, Stanley JC (1966) Experimental and quasiexperimental designs for research. Houghton Mifflin, Boston

- Carroll C, Patterson M, Wood S, Booth A, Rick J, Balain S (2007) A conceptual framework for implementation fidelity. Implement Sci 2:40–48

- Cohen J (1988) Statistical power analysis for the social sciences, 2nd edn. Lawrence Erlbaum Associates, New York

- Dennis ML, Perl HI, Huebner RB, McLellan AT (2000) Twenty-five strategies for improving the design, implementation and analysis of health services research related to alcohol and other drug abuse treatment. Addiction 95:S281–S308

- Djulbegovic B, Cantor A, Clarke M (2003) The importance of the preservation of the ethical principle of equipoise in the design of clinical trials: relative impact of the methodological quality domains on the treatment effect in randomized controlled trials. Account Res 10(4):301–315

- Downs S, Black N (1998) The feasibility of creating a checklist for the assessment of the methodological quality both of randomised and non-randomised studies of health care interventions. J Epidemiol Commun Health 52(6):377–384

- Friedmann PD, Katz EC, Rhodes AG, Taxman FS, O’Connell DJ, Frisman LK, Burdon WM, Fletcher BW, Litt MO, Clarke J (2008) Collaborative behavioral management for drug-involved parolees: rationale and design of the step’n out study. J Offender Rehabil 47(3):290–318

- Inciardi JA, Surratt HL, Martin SS (2007) Developing a multimedia HIV and hepatitis intervention for druginvolved offenders reentering the community. Prison J 87(1):111–142

- MacKenzie D, Browning K, SKroban S, Smith D (1999) The impact of probation on the criminal activities of offenders. J Res Crime Delinq 36(4):423–453

- Meredith W, Tisak J (1990) Latent curve analysis. Psychometrika 47:47–67

- Moher D, Schulz KF, Altman DG (2001) The CONSORT statement: revised recommendations for improving the quality of reports of parallel-group randomised trials. Lancet 357(9263):1191

- National Institute on Drug Abuse (2000) The NIDA community-based outreach model: a manual to reduce the risk of HIV and other blood-borne infections in drug users. National Institute on Drug Abuse, Rockville, Report nr (NIH Pub. No. 00-4812)

- Olivo SA, Macedo LG, Gadotti IC, Fuentes J, Stanton T, Magee DJ (2008) Scales to assess the quality of randomized controlled trials: a systematic review. Phys Ther 88(2):156–175

- Raudenbush SW (2001) Comparing personal trajectories and drawing causal inferences from longitudinal data. Annual Reviews, Palo Alto

- Weinberger M, Oddone EZ, Henderson WG, Smith DM, Huey J, GiobbieHurder A, Feussner JR (2001) Multisite randomized controlled trials in health services research: scientific challenges and operational issues. Med Care 39(6):627–634

- Weisburd D (2003) Ethical practice and evaluation of interventions in crime and justice: the moral imperative for randomized trials. Eval Rev 27(3):336–354

- Weisburd D, Taxman FS (2000) Developing a multicenter randomized trial in criminology: the case of HIDTA. J Quant Criminol 16(3):315–340

See also:

Free research papers are not written to satisfy your specific instructions. You can use our professional writing services to buy a custom research paper on any topic and get your high quality paper at affordable price.