This sample Behavioral Pharmacology Research Paper is published for educational and informational purposes only. If you need help writing your assignment, please use our research paper writing service and buy a paper on any topic at affordable price. Also check our tips on how to write a research paper, see the lists of psychology research paper topics, and browse research paper examples.

Behavioral pharmacology is an area of scientific study that bridges the fields of psychology and pharmacology, with subject matter covering any aspect of the interaction between drugs and behavior. Most often, behavioral pharmacologists study the influence of drugs on the entire behaving organism rather than focusing on the brain or periphery. The goal of behavioral pharmacology is to identify behavioral mechanisms involved in drug action (Branch, 2006).

Born out of learning theory, the field of behavioral pharmacology emerged from studies in two interesting, but quite diverse, research areas: (a) laboratory studies that showed that animals can seek out and administer certain drugs (called self-administration), and (b) the discovery and application of chlorpromazine as a psychoactive substance that effectively treats symptoms of schizophrenia. Scientists in behavioral pharmacology have since sought to combine the subject matter of pharmacology with the methodology and theory of operant behavior. From this strong scientific basis, behavior pharmacologists rigorously examine the functions and effects of drugs on behavior. Indeed, they discovered the most important tenets of behavioral pharmacology early within the development of the field: Drugs can function as reinforcers, as discriminative stimuli, and can change expressions of behavior.

Some Background On Operant Behavior

To understand the principles of behavioral pharmacology, one must first understand a bit about operant behavior. The concept of operant behavior was developed in the early 1900s, but was refined by B. F. Skinner and his followers. Skinner found that most voluntary behavior has specific and predictable outcomes, or consequences, that affect its future occurrence. One type of behavior-consequence relationship, reinforcement, is the process in which behavior is strengthened, or made more likely to occur, by its consequences. For example, a common practice in operant psychology is to examine the relation between food, a common and important environmental stimulus, and behavior. In a laboratory, a slightly food-deprived rat is placed in a standard operant chamber and trained to press a lever that will produce a food pellet. If the frequency of the lever press increases with food pellet deliveries, then the food pellet serves as a reinforcer for lever pressing.

Conversely, punishment occurs when behavior produces an unpleasant stimulus, thereby reducing the likelihood that this behavior will occur again. For example, if a rat presses a lever for a food pellet, but the experimenter arranges the delivery of an additional environmental event, such as a mild shock, contingent on the lever press, lever pressing will likely decrease in frequency. The punisher (shock), then, overrides the reinforcing properties of food. In Schedules of Reinforcement, Ferster and Skinner (1957) described how arranging different types of reinforcing and punishing contingencies for behavior result in predictable changes in behavior, regardless of whether the specific behavior studied was a lever press, key peck, button press, or any other freely occurring behavior.

Ferster and Skinner also showed that organisms use environmental cues to predict the likely consequences of behavior. For example, in the presence of a particular stimulus, an animal may learn that certain behavior leads to a reinforcer. The relationship among the stimulus, behavior, and the result of that behavior is called the three-term contingency. It is represented by the following notation:

SD: B —> SR+

In this case, SD refers to a discriminative stimulus, in whose presence a particular behavior, B, leads to positive reinforcement, SR+. The discriminative stimulus can be any sort of environmental condition that an organism can perceive. In other words, the discriminative stimulus “occasions” responding and predicts that a specific behavior (B) will result in a specific consequence—in this case, the SR+, or reinforcer. Consider a pigeon in an operant that has been taught to peck at a plastic disc in the presence of a green light. Pecking the disc when the light is illuminated green produces food reinforcement; pecking the disc under any other situation (e.g., a purple light) will not result in reinforcement. The pigeon, then, learns to peck the key only when the green light is illuminated. This process, called discrimination, is how most animals (including humans) learn to discern certain environmental situations from others.

The remainder of this research-paper describes how drugs affect behavioral processes involved with the three-term contingency. More specifically, drugs may (a) affect behavior directly, (b) function as discriminative stimuli, and (c) act as reinforcers. Behavior pharmacologists have studied these behavioral processes rigorously in the laboratory, with the findings applied to real-world, drug-related human phenomena such as substance abuse. This research-paper, then, begins by describing some principles of behavioral pharmacology discovered through basic research, and ends with a discussion of the application of these principles to pharmacotherapy and behavioral drug abuse treatment.

Direct Behavioral Effects Of Drugs

Administration of a psychoactive substance often directly affects behavior. For example, a drug may increase motor activity, inhibit coordination, or decrease the rate of behavior. Psychoactive drugs bind at receptor cites located throughout the central or peripheral nervous systems to exert pharmacological, physiological, and behavioral effects. As more of a drug is ingested, it exerts its influence on more receptor sites and thereby influences behavior.

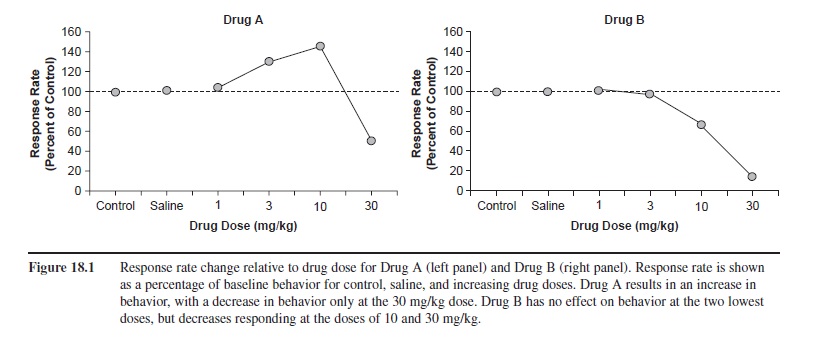

In behavioral pharmacology, a change in behavior as a function of drug dose is represented by a dose-response curve. Figure 18.1 illustrates two hypothetical dose-response curves. Drug dose is represented along the x-axis as milligrams of the drug administered per kilograms of body weight. The y-axis shows response rate relative to baseline rates. Baseline rates of behavior (behavior without any drug present) are represented by the label “control,” and saline-placebo condition is labeled “saline.” Drug A shows no effect at 1 mg/kg, but it causes response rates to increase at 3 and 10 mg/kg and to decrease relative to baseline at the 30 mg/kg dose. The rise and fall of the curve represents a biphasic (two-phased) dose-response curve. Although this example is fictitious, this dose-response curve is representative of how stimulants such as amphetamine or cocaine affect behavior. Drug B shows a different effect: Lower doses do not change behavior, but higher doses cause response rates to drop, relative to baseline. This curve, then, depicts a monophasic (one-phase) dose-response curve.

Figure 18.1 Response rate change relative to drug dose for Drug A (left panel) and Drug B (right panel). Response rate is shown as a percentage of baseline behavior for control, saline, and increasing drug doses. Drug A results in an increase in behavior, with a decrease in behavior only at the 30 mg/kg dose. Drug B has no effect on behavior at the two lowest doses, but decreases responding at the doses of 10 and 30 mg/kg.

Figure 18.1 Response rate change relative to drug dose for Drug A (left panel) and Drug B (right panel). Response rate is shown as a percentage of baseline behavior for control, saline, and increasing drug doses. Drug A results in an increase in behavior, with a decrease in behavior only at the 30 mg/kg dose. Drug B has no effect on behavior at the two lowest doses, but decreases responding at the doses of 10 and 30 mg/kg.

Rate Dependence

One of the most interesting discoveries in behavioral pharmacology is rate dependence: A drug’s effect on behavior depends on the baseline rate of behavior. Dews (1955) first demonstrated this effect, and consequently launched the behavioral pharmacology movement. Pigeons pecked a disc under two different schedules of reinforcement: One schedule selected a high rate of disc pecking for food reinforcement; the other selected a low rate of disc pecking. Next, Dews administered pentobarbital, a depressant. The same dose of the drug had very different effects on behavior, depending on whether a high rate or a low rate of pecking occurred before drug administration. When the pigeon pecked at a low rate under baseline conditions, response rate decreased dramatically at one mg/kg dose. When the pigeon pecked at a high rate during baseline, this same dose increased response rate substantially. Although speculative, the phenomenon of rate dependence may have important implications for understanding why a stimulant such as Ritalin®, which is used to treat attention deficit disorder, may have calming effects for hyperactive individuals, but stimulating effects for typical individuals, who likely have lower baseline rates of behavior.

Behavioral History

As rate dependence exemplifies, it is often incorrect to make sweeping comments like “pentobarbital depresses behavior,” “cocaine increases response rates,” “morphine disrupts behavior,” or “alcohol increases errors.” Drug-behavior interactions are dynamic, and many variables affect how one influences the other. Behavioral history, or the types of experiences one has before taking a drug, plays a substantial part in the effects that a drug will have on behavior.

A classic study in behavioral pharmacology exemplifies that the manner in which discrimination is trained plays a role in the robustness of a drug’s effect. Terrace (1966) trained one group of pigeons to discriminate a vertical line from a horizontal line by reinforcing pecking on the horizontal-line key while allowing these same pigeons to discover that pecking the vertical-line key would not produce reinforcement (called errors). With the other group of pigeons, he trained the discrimination differently—the introduction of the vertical line was so gradual that the pigeons responded very little, if at all, to it. (This procedure of fading in a second stimulus gradually is called errorless learning!) Next, Terrace introduced the drugs chlorpromazine and imiprimine (used to treat schizophrenia at the time) to both groups. Pigeons with a history of errorless training showed no signs of drug-induced disruption in their behavior: They maintained their discrimination of the vertical from the horizontal line. However, the pigeons with standard discrimination training (where errors were free to occur, and often did occur) showed disrupted behavior that was related to the dose of both drugs: the higher the dose of the drugs, the more disruption.

Terrace’s (1966) study showed that drugs may affect the same behavior (discriminating line orientations) differently, depending on how the behavior is trained. Conversely, some drugs may affect different behaviors in a similar manner. For example, Urbain, Poling, Millam, and T. Thompson (1978) gave two groups of rats different reinforcement schedule histories to examine how behavioral history interacts with drug effects. They trained each group to press a lever at either high or low rates. After responding stabilized in each group, the experimenters placed both groups on a fixed-interval, 15-second (FI 15) schedule (the first response after 15 seconds elapses produces a reinforcer). The groups both maintained the differentiation of responding established by their previous training (either high or low rates), despite being under the same schedule. Then, the experimenters administered the drug d-amphet-amine, a stimulant. Regardless of initial response rate, administering d-amphetamine produced responding at a constant, moderate level in both groups—it lowered the high response rates and increased the low response rates. This effect is termed “rate constancy.”

As evidenced by the examples in this section, drugs can have both direct and indirect effects on behavior. Although psychoactive drugs primarily exert effects through interaction with central nervous system neurotransmitter systems, the context of drug administration is an important factor in influencing behavior.

Drugs As Discriminative Stimuli

When people or animals ingest a psychoactive drug, physiologically perceptible events may occur. For example, ingestion of over-the-counter cold medicine containing pseudoephedrine may occasion a person to report feeling “drowsy” or “nervous.” Ingestion of a stimulant may occasion another person to report feeling “alert” or “hyper.” Researchers can train animals and humans to discriminate whether they have been exposed to different types of drugs using these internal cues (called interoreceptive stimuli), much as they can be trained to discriminate among stimuli such as colors or line orientations in the external environment.

Researchers use signal detection procedures to test discriminability along sensory dimensions (e.g., a visual stimulus dimension, such as detail or acuity, or an auditory stimulus dimension, such as volume). The psycho-physicist Gustav Fechner developed procedures to study the detection of “signals,” or stimuli. He developed the concept of “absolute threshold,” which refers to the lowest intensity at which a stimulus can be detected. He did so by repeatedly presenting stimuli and requiring subjects to indicate whether they detected that stimulus. Scientists can use these same procedures to test for the interoreceptive cues associated with ingestion of a drug, which is referred to as drug discrimination. For example, one may wish to determine the lowest dose at which a drug is detectable, whether an organism perceives two different drugs (e.g., morphine and heroin) as the same drug stimulus, and how organisms perceive combinations of different drugs (e.g., whether a small dose of ephedrine plus a small dose of caffeine feels like a large dose of amphetamine).

An example of a study in which researchers were interested in determining what drugs subjectively “feel” like morphine, Massey, McMillan, and Wessinger (1992) taught pigeons to discriminate a 5 mg/kg dose of morphine from a placebo (saline) by pecking one of two discs to produce food reinforcement. Pecking one disc (called the “saline disc”) only resulted in access to grain with presession saline administration. Pecking the other disc (called the “morphine” disc) only resulted in access to grain with presession morphine administration. After the pigeons learned to discriminate the 5 mg/kg dose of morphine from saline, the experimenters administered other doses of morphine and doses of other drugs (d-amphetamine, pentobarbital, fentanyl, and MK-801) prior to experimental sessions. At very low doses of morphine, pigeons responded on the saline disc; they were unable to detect morphine administration, but could detect morphine at higher doses. Additionally, by administering drugs from different drug classes than morphine (an opiate), it is possible to interpret whether an animal can perceive differences among drugs. Fentanyl, also an opiate, occasioned responding on the morphine disc, but all of the other drugs resulted in responding on the saline disc. Thus, pigeons perceived fentanyl and morphine as similar but perceived that the other drugs are either different from morphine or not perceptible at all.

Humans can also discriminate opiates (Preston, Bigelow, Bickel, & Liebson, 1989). Former heroin addicts volunteered to discriminate three conditions: saline, hydro-morphone, and pentazocine (both opioid agonists) from one another as “Drug A, B, or C,” respectively, to earn money. After training, experimenters varied the doses of the substances in order to assess discriminability at different doses. Finally, Preston et al. administered drugs that partially mimic and partially block the effects of opioid agonists (butorphanol, nalbuphine, and buprenorphine) to test for generalization to hydromorphone and pentazocine. Not surprisingly, both hydromorphone and pentazocine resulted in greater discrimination with doses higher than the trained doses. The subjects also identified butorphanol as pentazocine at higher doses. Nalbuphine, however, was not identified as either opioid. Interestingly, the participants sometimes identified buprenorphine as pentazocine and sometimes identified it as hydromorphone, which suggests that buprenorphine has some properties similar to both drugs. Thus, drugs of similar neurotransmitter action can be discriminated from one another (e.g., hydromor-phone vs. pentazocine), but some drugs are discriminated as the same drug (e.g., hydromorphone and butrophanol.)

Branch (2006) pointed out that discrimination procedures are useful at categorizing novel drugs as well as testing whether a novel drug has abuse liability. If a trained organism discriminates a novel drug similarly to known drugs of abuse, then further study on the abuse liability is warranted.

Drugs As Reinforcers

A breakthrough for early behavioral pharmacology research involved the discovery that drugs could function as reinforcers. For example, in an early study, Spragg (1940) used food reinforcement to get a chimp to lie across a researcher’s lap to receive a morphine injection. After regular morphine administrations, however, the chimp demonstrated that it “wanted” the injection by guiding the researcher to the location that injections took place, handing him the needle, and then bending over his knee. Later, Spragg gave the chimp two sticks that opened different boxes containing morphine or food. When deprived of either food or morphine, the chimp chose the corresponding box. Spragg’s research produced the first evidence that a nonhuman animal would work to obtain a drug. Until this time, drug use/abuse was viewed as a uniquely human phenomenon, as well as a disease of personal character, and not a physiological or behavioral phenomenon.

Animal Research on Drug Self-Administration

Years after Spragg’s findings, researchers began conducting laboratory-based studies to determine if drug self-administration could be studied with animals, and whether the findings could generalize to humans. Laboratory studies of animal self-administration are important because they allow researchers to manipulate variables and determine cause-and-effect relationships related to substance abuse that are not ethical to conduct with humans.

Headlee, Coppock, and Nichols (1955) devised one of the first controlled operant procedures for drug administration in animals. The experimenters pretreated rats with morphine, codeine, or saline for two weeks, and during this time also recorded the head movements of restrained rats. Then, they injected one of the drugs, but only if the rat held its head to a nonpreferred side longer than the preferred side during a 10-minute period. The rats shifted their head positioning to the nonpreferred side when receiving morphine or codeine, but not saline. These findings suggest that morphine and codeine were reinforcers for head movement.

Weeks (1962) simplified self-administration procedures by creating an automated apparatus and methodology to deliver a drug contingent upon lever pressing in an operant chamber. First, the researcher established physical dependence by repeatedly administering doses of morphine that elevated with each administration. At that point, experimenters connected a saddle to the rat, which held in place a cannula to inject a substance directly into the heart—a 10 mg/kg dose of morphine immediately contingent on programmed lever-press response requirements. Under this arrangement, the behavior of lever pressing increased, which indicated that morphine functioned as a reinforcer. When the experimenter reduced the dose of morphine to 3.2 mg/kg, response rates increased. Moreover, when they discontinued morphine injections, behavior eventually tapered to a cessation of responding after three hours, suggesting extinction of the drug reinforcer.

In a second experiment, Weeks (1962) increased response requirements for morphine, and in turn, the animals increased their responding to meet the response requirements for the drug reinforcer. When the experimenter used nalorphine, a morphine antagonist (which diminishes or blocks the effect of morphine) as a pre-treatment, drug self-administration decreased dramatically. This study is important for several reasons: (a) it confirmed that morphine functioned as a reinforcer, (b) it showed predictable changes in behavior as a result of the type of reinforcement delivery; and finally (c) it showed that administering a morphine antagonist functioned as a condition to decrease the reinforcing efficacy of morphine, as evidenced by a decrease in morphine responding. In addition, the apparatus and procedures allowed easy self-administration in rats, which set the stage for studying drug self-administration today.

One drawback of Weeks’ (1962) study as an animal model of drug use is that the experimenter initially created physical dependence to morphine by repeatedly administering morphine independent of behavior. Humans typically initiate drug use without physical dependence. The establishment of drug dependence as a necessary condition for drug dependence could have important implications for the generalizability of the data of this particular study to the problem of drug abuse. Recall that behavioral history is an important component that can change the effects of drugs on behavior. Studies since then, however, show that dependence is not a necessary or sufficient condition for drug self-administration.

For example, to determine whether dependence was necessary for self-administration, Pickens and T. Thompson (1968, as cited in Young & Herling, 1986) examined the reinforcing potential of cocaine without preexposure, while ruling out any extraneous possibilities for changes in behavior due to the physiological effects of cocaine such as motor function. Because cocaine acts as a stimulant, it could possibly produce increases in behavior that are related to increases in general motor activity, rather than motivation for cocaine reinforcement. An effective way to determine whether response increases are motivational in nature is to deliver the reinforcer without the lever-press contingency. If lever pressing continues when cocaine is “free,” then it is assumed motivation for cocaine is not the reason for lever pressing. The experimenters initially delivered 0.5 ml of cocaine for lever pressing, and responding occurred at a steady rate. When the experimenters delivered cocaine freely (independently of lever pressing), lever pressing decreased dramatically. In another condition, responding ceased when the researchers administered saline instead of cocaine. This study convincingly linked drug self-administration in animals to its counterpart of human drug abuse. Animals initiated and maintained drug administrations, showing variability in these administrations with changing environmental conditions. Moreover, physical dependence was not a necessary condition for cocaine self-administration to occur.

For example, self-administration patterns in nonhumans often reflect self-administration patterns in humans. Deneau, Yanagita, and Seevers (1969) found that drug-naive monkeys self-administered morphine, cocaine, d-amphetamine, pentobarbital, caffeine, and ethanol, all of which are drugs that humans self-administer. Interestingly, monkeys self-administered both morphine and cocaine continually until they caused their own deaths from overdose. They self-administered caffeine, but not consistently, and periods of abstinence occurred. Ethanol was not a reinforcer for all monkeys, and one monkey stopped administering it after a month of consistent administration. Most of the monkeys did not self-administer nalorphine, chlorpromazine, mescaline, or saline. The results from nonhuman animal research (e.g., see also Perry, Larson, German, Madden, & Carroll, 2005) are consistent with the general trends in human drug abuse, with humans abusing the same drug that animals self-administer.

The observation that an animal self-administers certain drugs does not always predict the magnitude of the abuse potential for the substance. Moreover, the rate of responding for a drug is not a reliable measure for abuse potential because each drug has different time courses of action, and pausing between self-administrations presumably varies due to action of the drug, its half-life, and drug dose available per administration. Thus, an additional method for quantifying the “addiction potential” of each substance is necessary.

Progressive-ratio schedules, in which the response ratio requirement for each successive reinforcer increases, allow for a measure of response strength not directly related to rate of responding. The response requirement for a progressive ratio schedule starts very small, with the first response often resulting in the reinforcer. The requirement for the next reinforcer increases in a systematic fashion, for example, from one lever press to five presses, and each subsequent requirement increases until the requirement is too high (in some cases, hundreds or thousands of responses for one reinforcer) to maintain responding (i.e., the animal no longer completes the response requirement for reinforcement). The highest completed ratio is designated as the “break point.” Young and Herling (1986) reviewed studies of break points for drug reinforcers and concluded that cocaine maintains higher breakpoints than amphetamines, nicotine, methylphenidate, secobarbital, codeine, heroin, and pentazocine.

Drug Self-Administration as a Choice

Because there is strong evidence that drugs function as reinforcers, researchers have attempted to understand the choice to self-administer drugs over other nondrug-related behaviors, such as eating, or behaviors involved with “clean living.” The initial concept of choice behavior developed in the basic operant literature by way of the concurrent schedule of reinforcement. Ferster and Skinner (1957) described a concurrent schedule as two or more schedules of reinforcement that operate independently of each other at the same time. Each schedule may be associated with different reinforcers (e.g., food vs. drug), amounts of reinforcement (e.g., low dose vs. high dose of a drug), responses (e.g., lever pressing vs. wheel running), rates of reinforcement (e.g., high vs. low), and delays to the reinforcement (e.g., immediate vs. delayed). Consider the most commonly studied dependent variable—rate of reinforcement. Different schedules may yield different reinforcement rates under controlled conditions. For example, if responding at one lever produces five reinforcers per minute and responding on a second lever results in one reinforcer per minute, lever pressing under each schedule will result in “matching”: Behavioral allocation for the different schedules of reinforcement closely tracks the ratio of reinforcement available for the separate schedules of reinforcer delivery (e.g., Herrnstein, 1961). In this case, the organism will allocate five times more behavior to the “rich” lever compared to the “lean” lever. The study of concurrently available consequences for different behaviors closely approximates “real-world” behavior. Rarely, if ever, is someone faced with a circumstance in which only one behavior for one contingency can be emitted. The use of concurrent schedules is an ideal method for examining drug preferences.

Scientists have uncovered one drug-related principle with concurrent schedules: Higher doses of drugs generally are preferred over lower doses of drugs. Using concurrent schedules, Iglauer and Woods (1974) examined the effect of cocaine reinforcer magnitude on behavior allocation in rhesus monkeys. When the experimenters programmed large differences in cocaine doses, animals preferred the larger quantity of cocaine, regardless of how many deliveries occurred under each lever. However, when doses were equivalent, matching occurred based on the relative number of cocaine deliveries available on each lever.

Griffiths, Wurster, and Brady (1981) employed a different sort of task, a discrete-trial choice arrangement, to compare the choice between different magnitudes of food versus different magnitudes of heroin. Every three hours, baboons had an opportunity to respond for an injection of heroin or a food reinforcer. During baseline conditions, baboons chose heroin injections on 30 to 40 percent of the trials. The baboons often alternated food and heroin choices, rarely choosing heroin on two consecutive trials. Increasing the amount of heroin per dose, however, shifted preference away from the food and toward the drug.

One observation that arose from Griffiths et al.’s (1981) study and others like it is that self-administration of a drug depends not only on the magnitude of the drug reinforcer, but also on the value of other nondrug alternatives. Carroll (1985) provided evidence that indeed a nondrug alternative could alter drug self-administration. Saccharin and phencyclidine (PCP) were each available under a concurrent fixed-ratio schedule—conc FR 16 FR 16 (rats had to press one lever 16 times to produce access to reinforcers on that lever; the same reinforcement contingency also operated on the other lever). The experimenters varied three concentrations of saccharin and eight different magnitudes of PCP to test for preference. Increasing concentrations of saccharin moved preference toward the saccharin lever and away from the drug lever, but increasing doses of PCP resulted in more drug choices and fewer saccharin choices. Campbell, S. S. Thompson, and Carroll (1998) later showed that preference for PCP shifted to saccharin when the response requirement for saccharin was low and the response requirement for PCP was high. This research provided important evidence that the availability of alternative reinforcers and their respective magnitudes can alter the relative efficacy of a drug reinforcer.

The discovery that drugs act as reinforcers has important implications in understanding the conditions under which drugs are “abused.” Changing environmental conditions can lead to changes in drug self-administration and drug preference. Competing reinforcers can reduce drug use under certain arrangements, which is paramount in the treatment of drug abuse. Based on the basic behavioral pharmacology literature, researchers are developing behavioral treatments to control problem drug-related behaviors.

Treatment Implications Of Behavioral Pharmacology

It is useful to frame drug abuse as a problem with frequent drug self-administration. Based on studies throughout the history of behavioral pharmacology, drug self-administration can be reduced by lowering the rate of reinforcement (how frequently the animal receives a drug reinforcer), increasing the immediacy of drug reinforcer delivery, lowering the magnitude of the drug reinforcer, implementing a schedule of punishment involved with the drug reinforcer (also called costs of self-administration), increasing the response requirement of the drug reinforcer, increasing the availability of alternative nondrug reinforcers, and reinforcing behavior that is incompatible with drug self-administration (Bigelow & Silverman, 1999). Two types of treatment for drug abuse rely heavily on findings from behavioral pharmacology: pharmacotherapy and contingency management.

Pharmacotherapy

A drug that blocks the effects of a drug of abuse can often attenuate the reinforcing properties of the drug of abuse. For many drug classes, there are drugs that block a particular neurotransmitter system effect. For example, consider opiates. Naltrexone acts as an antagonist on the opiate system that can block the effects of opioid agonists, such as heroin or morphine. When administered together, agonists and antagonists work against each other, and the behavioral and physiological effects that result are a function of the potency and dose of each drug administered (Sullivan, Comer, & Nunes, 2006). Naltrexone, therefore, can be used as a treatment for opiate abuse because it blocks, among other things, the reinforcing properties of opiates (e.g., Preston et al., 1999). A drug similar to naltrexone, naloxone, also can be used to counteract opioid overdose immediately.

Disulfiram, or antabuse, blocks the degradation of alcohol by inhibiting the enzyme acetaldehyde dehydro-genase. This blockage causes a buildup of acetaldehyde in the blood, which results in unpleasant physical sensations similar to a “hangover” such as nausea and vomiting. Pretreatment with disulfiram is an effective treatment for alcohol abuse because ingesting alcohol after a dose will cause the individual to become ill—the aversive properties of drinking now override the reinforcing properties of the same behavior (e.g., Higgins, Budney, Bickel, Hughes, & Foerg, 1993). It should be noted, however, that because compliance in taking disulfiram is low, it is not used commonly today as a treatment for alcohol abuse.

Contingency Management

The field of applied behavior analysis, also based upon the tenets of operant psychology, developed in the late 1960s. The basic aspirations and goals of this field include applying the principles discovered through basic behavioral research to real-world human behavior. Researchers using this approach to behavioral change explored an array of subject matter, including the treatment of alcoholism.

One application of basic research with drug discrimination applies to humans. Lovibond and Caddy (1970) trained alcoholics to discriminate their blood alcohol concentration (BAC) from 0 to 0.08 percent by describing to the subjects what each blood level should feel like. When the subjects estimated their BACs, Breathalyzer results acted as feedback. (Breath alcohol concentration, which is measured by a Breathalyzer, closely approximates BAC.) Experimenters attached shock electrodes to the face and neck of 31 volunteers, allowed free access to alcohol, and informed the participants that they may drink with “impunity” as long as their BAC never reached 0.065 percent. If the participants’ BACs were higher than this limit, they would receive a shock.

Experimenters varied the frequency of shock-punished trials, the duration of shocks, the intensity of shocks, and the delay of shocks through various phases of the experiment. Often, the experimenters encouraged family members to be present for conditioning procedures, and instructed them to support the alcoholic through social reinforcement for a low level of drinking. After several days of these conditioning procedures, the researchers encouraged participants to go about their everyday lives in a normal fashion. By drinking in a controlled fashion, 21 of the subjects had a positive outcome from the treatment, only rarely exceeding 0.07 percent BACs in normal settings. Three subjects improved considerably, only exceeding 0.07 percent BAC about once or twice a week. Four subjects only showed slight improvement, and the rest showed no change in drinking behavior. The authors reported no aversive reactions to alcohol, but indicated that subjects lost a desire to drink after a few glasses of alcohol.

In another study that took place in a specially equipped bar, 13 alcoholic volunteers learned to “drink using concurrent schedules” as a result of receiving finger shocks for exhibiting behavior typical of an alcoholic, such as gulping large amounts of alcohol or drinking straight liquor (Mills, Sobell, & Schaefer, 1971). The experimenters did not punish behavior associated with social drinking, such as drinking weaker drinks or taking small sips of alcohol. The type of drink ordered, the magnitude of alcohol consumed per drinking instance, and the progressive ordering of drinks determined the shock contingencies. After implementing shock, ordering and consuming straight alcoholic drinks decreased to virtually zero, the number of mixed drinks ordered remained fairly constant, and the total number of drinks consumed decreased. Sipping increased, and gulping decreased. The study, however, never tested for generality or maintenance of social drinking in other environments such as a real bar but did show that alcoholics could modify their drinking behavior without being abstinent.

Many researchers abandoned shock studies decades ago in favor of procedures that used positive reinforcement for moderate drinking or abstinence. Positive reinforcement techniques are as effective in modifying drinking behavior as the aversive conditioning techniques described. In one study (Bigelow, Cohen, Liebson, & Faillace, 1972), patients referred for treatment from an emergency room of a city hospital for alcoholism lived in a token economy-based research ward and participated in a variety of experiments examining their alcohol consumption. During control days, alcohol consumption was not restricted. On treatment days, subjects chose between a moderate level of alcohol consumption with access to an enriched environment (access to a television, pool table, interaction with other people, participation in group therapy, reading materials, allowance for visitors, and a regular diet) or a greater-than-5-ounce consumption of alcohol with access only to an impoverished environment (the participant was confined alone to a hospital bedroom with pureed food). On 76 percent of the treatment days in which the choice was in effect, the subjects chose to drink moderately and gain access to the enriched environment. The subjects did, however, consume the maximum amount of alcohol allowed under the enriched contingency.

Miller (1975) sought to reduce the amount of alcohol consumed by individuals in a population that exhibited high rates of public drunkenness offenses, unstable housing, and unstable employment. In the experimental group, Miller provided participants with goods and services such as housing, meals, employment, donations, and so on, until an instance of observed drunkenness or a Breathalyzer result revealed alcohol consumption. The control group did not have to refrain from drinking to have access to the same services. Miller reported that arrests decreased and employment or days worked increased. Blood alcohol levels were lower in the group with abstinence contingencies.

In another study, researchers used a behavioral contract to reduce drinking in one alcoholic, with the help of the alcoholic’s spouse to enforce contingencies (Miller & Hersen, 1972). During baseline, the alcoholic consumed about seven to eight drinks a day. The husband and wife agreed to a contract in which the man could consume zero to three drinks a day without a consequence, but if drinking exceeded this level, he had to pay a $20 “fine” to his wife. The researchers also instructed the wife to refrain from negative verbal or nonverbal responses to her husband’s drinking behavior, and she was to pay the same $20 fine to him for infringements on that agreement. After about three weeks, the husband’s drinking consistently remained at an acceptable level, which maintained through a six-month follow-up. (Interestingly, the wife’s nagging also decreased.)

Although the drinking behavior of alcoholics is modifiable using behavioral techniques, the general therapeutic community, most likely influenced by the early 20th century “temperance movement,” regarded this proposition as absurd. Many people believe that alcoholics must completely abstain from drinking alcohol and that their drinking can never be stabilized at moderate levels. So deeply engrained is this idea of required abstinence that researchers have been slow to develop and popularize behavior modification techniques in training moderate drinking (Bigelow & Silverman, 1999; Marlatt & Witkiewitz, 2002). Fortunately, however, a community of therapists who treated addiction to substances other than alcohol embraced the same nonabstinence methods demonstrated to be useful in decreasing drinking.

One of the first descriptions of contingency management principles applied to controlling the behavior of drug abusers did not directly seek to reinforce drug abstinence; rather, it reinforced behaviors deemed important to therapeutic progress, such as getting out of bed before eight o’clock in the morning, attending meetings, performing work, and attending physical therapy (Melin & Gunnar, 1973). Drug abusers earned points for completing these activities, which moved subjects progressively from detoxification phases to rehabilitation phases, allowing desired privileges such as having a private bedroom, having visitors, and taking short excursions away from the ward. All behaviors that earned points increased in frequency, and the researchers concluded that access to privileges served as reinforcers for this population.

Contingency management is also successful in the treatment of heroin use with addicts receiving methadone maintenance treatment as outpatients. Hall, James, Burmaster, and Polk (1977) devised contracts with six different heroin addicts to change a specific problem behavior for each. Reinforcers ranged from take-home methadone (a less potent opiate used to treat heroin abuse), tickets to events, food, bus tokens, lunches, time off of probation, less frequent visits to probation officers, and access to special privileges. One subject increased his percentage of opiate-free urines (a marker of opiate abstinence) from 33 percent to 75 percent even up to six months after treatment. When rewarded for on-time arrival to work, a truck driver increased his on-time arrival from 66 percent to 100 percent. One client habitually tried to avoid treatment by arriving at the methadone clinic at strange hours to “swindle” her methadone out of a nurse not directly involved in her own treatment. When rewarded for showing up at the clinic during regular hours and receiving methadone from a specific individual, her frequency of arriving at regular hours increased from 13 percent to 100 percent compliance. Hall et al.’s (1977) study provided one of the first indications that treatment of outpatients with contingency management techniques for compliance of treatment goals for substance abuse was successful.

This early evidence of the utility of reinforcement procedures shaped a systematic development of modern-day contingency management. Different drugs of abuse have slightly different variations of contingencies employed while some aspects of this approach are uniform across treatments. Researchers seem universally to employ urinalysis as a measure of abstinence in abusers and deliver reinforcers for negative urine samples. The nature of the reinforcers, however, varies among different populations and individuals, the nature of the substance abuse, and the resources available as reinforcers.

The standard measurement tool for detecting drug abuse is urinalysis because the actual usage of drugs is often a covert behavior. Researchers cannot expect patients to provide reliable self-reports of drug use. Moreover, researchers test urine often enough to detect any instance of drug use. Testing need not occur each day for every drug, although it may be necessary for cocaine, codeine, or heroin because a small amount of these drugs used one day may be undetectable two days later. However, a researcher using too short of a testing interval may find more than one positive result from one instance of drug use; two to five days of abstinence may be necessary for a negative urine sample to be obtained in these conditions (Katz et al., 2002).

Researchers do not set drug abstinence as the only goal in the treatment of substance abuse. Contingency management is often supplemented with counseling, work therapies, self-help groups, relapse prevention strategies, and medical care. Compliance goals with these types of treatment programs often serve as behaviors to place under contingencies of reinforcement.

Experimenters use many different reinforcers for con-trolling behavior in these interventions—for example, vouchers that are exchangeable for goods and services. Researchers also use money, methadone, and services as common reinforcers. Money is an effective reinforcer that scientists have repeatedly shown competes favorably with a drug as a reinforcer (e.g., DeGrandpre, Bickel, Higgins, & Hughes, 1994). As the value of the monetary reward increases, drug preference weakens. However, with increasing delays to monetary reinforcement, preference for drug self-administration is more likely (e.g., Bickel & Madden, 1999). These discrete choice studies show larger amounts of money have greater reinforcing value than smaller amounts, similar to magnitude of reinforcer effects found in earlier animal studies.

Money seems to be the best reinforcer for drug abstinence because it does not have a limitation on magnitude. In addition, it has a low risk of satiation, and is effective for most people. A potential problem, however, is found in using money to reinforce drug abstinence—money can be used for future drug purchases (Silverman, Chutuape, Bigelow, & Stitzer, 1999). To avoid this potential problem, researchers use vouchers in its place.

Vouchers are exchangeable for many types of goods and services in most treatment programs. Often the value of a voucher starts quite low, for example, about $2. Researchers then increase the value of the voucher systematically with each successive negative urine sample or act of treatment compliance. This reinforcement arrangement is useful because it increases the period of abstinence with each reinforcer delivery. For example, the first day of reinforcement only requires a short abstinence period, but the next reinforcer requires the original time period plus the time to the next urine test. As shown in animal research, greater reinforcer magnitudes maintain responses that require more “work.” Also embedded in this progressive reinforcer schedule is a response-cost contingency. If people choose to do drugs, they are not only losing the reinforcer for that particular day, they are losing the value of the difference between the original voucher value and the progressive value of the next potential voucher. Resetting the value of the reinforcer punishes drug-taking behavior. The progressive voucher system, then, is essentially a reinforcement of drug abstinence schedule with a response-cost punishment contingency for using drug that is simultaneously built into it.

Progressive reinforcement schedules are effective in maintaining drug abstinence in smokers. For example, Roll, Higgins, and Badger (1996) gave one group of smokers a $3 voucher for their first smoking-abstinent breath samples, with increments of 50 cents for each additional negative sample. They also gave a $10 bonus award for every third negative sample. Researchers gave a second group $9.80 for each negative sample, and gave a third group money that was equivalent to a paired individual in the progressive group (termed “yoking”), except these individuals did not need to be abstinent to receive the money. Both groups receiving contingent reinforcement exhibited more abstinence than the yoked control, but individuals in the progressive group exhibited the most abstinence of the three groups.

The data from contingency management studies nicely applies the fundamental principles of operant psychology to the area of substance abuse by assuming that drug taking is just one of many choice behaviors that have reinforcing value. Like many reinforcers, the reinforcing efficacy of drugs is not constant. Indeed, a variety of factors influence drug reinforcer efficacy, including the consequences for abstinence and the availability of alternatives.

Summary

The field of behavioral pharmacology is more than 50 years old and has contributed immensely to our under-standing of how drugs and behavior interact, especially in the area of substance abuse. Part of the reason for its success is the careful manner in which researchers characterize behavior-environment relations, which they study first in a laboratory setting and then apply to socially relevant problems. Researchers are better able to understand complex behavior related to drugs, such as drug use/abuse, when they consider it from the perspective of the three-term contingency. This conceptualization allows researchers to characterize drug effects in terms of their discriminative properties, reinforcing properties, and as modifiers of behavior. When scientists understand these behavioral mechanisms, the stage is set for effective treatments.

References:

- Bickel, W. K., & Madden, G. J. (1999). A comparison of measures of relative reinforcing efficacy and behavioral economics: Cigarettes and money in smokers. Behavioral Pharmacology, 10, 627-637.

- Bickel, W., & Vuchinich, R. E. (2000). Reframing health: Behavioral change with behavioral economics. Mahwah, NJ: Lawrence Erlbaum Associates.

- Bigelow, G., Cohen, M., Liebson, I., & Faillace, L. A. (1972). Abstinence or moderation? Choice by alcoholics. Behaviour Research and Therapy, 10, 209-214.

- Bigelow, G., & Silverman, K. (1999). Theoretical and empirical foundations of contingency management treatments for drug abuse. In S. T. Higgins & K. Silverman (Eds.), Motivating behavior change among illicit-drug abusers: Research on contingency management interventions (pp. 15-31). Washington, DC: American Psychological Association.

- Branch, M. N. (2006). How behavioral pharmacology informs behavioral science. Journal of the Experimental Analysis of Behavior, 85, 407-423.

- Campbell, U. C., Thompson, S. S., & Carroll, M. E. (1998). Acquisition of oral phencyclidine (PCP) self-administration in rhesus monkeys: Effects of dose and an alternative non-drug reinforcer. Psychopharmacology, 137, 132-138.

- Carroll, M. E. (1985). Concurrent phencyclidine and saccharin access: Presentation of an alternative reinforcer reduces drug intake. Journal of the Experimental Analysis of Behavior, 43, 131-144.

- DeGrandpre, R. J., Bickel, W. K., Higgins, S. T., & Hughes, J. R. (1994). A behavioral economic analysis of concurrently available money and cigarettes. Journal of the Experimental Analysis of Behavior, 61, 191-201.

- Deneau, G., Yanagita, T., & Seevers, M. H. (1969). Self-administration of psychoactive drugs by the monkey: A mea-sure of psychological dependence. Psychopharmacologia, 16, 30-48.

- Dews, P. B. (1955). Studies on behavior I. Differential sensitivity to pentobarbital on pecking performance in pigeons depending on the schedule of reward. Journal of Pharmacology and Experimental Therapeutics, 113, 393-401.

- Ferster, C., & Skinner, B. F. (1957). Schedules of reinforcement. Bloomington, IN: Prentice Hall.

- Griffiths, R. R., Wurster, R. M., & Brady, J. V. (1981). Choice between food and heroin: Effects of morphine, naloxone, and secobarbital. Journal of the Experimental Analysis of Behavior, 35, 335-351.

- Hall, S., James, L., Burmaster, S., & Polk, A. (1977). Contingency contracting as a therapeutic tool with methadone maintenance clients: Six single subject studies. Behaviour Research and Therapy, 15, 438-441.

- Headlee, C. P. C., Coppock, H. W., & Nichols, J. R. (1955). Apparatus and technique involved in a laboratory method of detecting the addictiveness of drugs. Journal of the American Pharmaceutical Association Scientific Edition, 44, 229-231.

- Herrnstein, R. J. (1961). Relative and absolute strength of response as a function of frequency of reinforcement. Journal of the Experimental Analysis of Behavior, 4, 267-272.

- Higgins, S. T., Budney, A. J., Bickel, W. K., Hughes, J. R., & Foerg, F. (1993). Disulfiram therapy in patients abusing cocaine and alcohol. American Journal of Psychiatry, 150, 675-676.

- Higgins, S. T., Heil, S. H., & Lussier, J. P. (2004). Clinical implications of reinforcement as a determinant of substance use disorders. Annual Review of Psychology, 55, 431-461.

- Higgins, S. T., & Silverman, K. (1999). Motivating behavior change among illicit-drug abusers: Research on contingency management interventions. Washington, DC: American Psychological Association.

- Iglauer, C., & Woods, J. H. (1974). Concurrent performances: Reinforcement by different doses of intravenous cocaine in rhesus monkeys. Journal of the Experimental Analysis of Behavior, 22, 179-196.

- Johnson, M. W., & Bickel, W. K. (2002). Within-subject comparison of real and hypothetical money rewards in delay discounting. Journal of the Experimental Analysis of Behavior, 77, 129-146.

- Katz, E. C., Robles-Sotelo, E., Correia, C. J., Silverman, K., Stitzer, M. L., & Bigelow, G. (2002). The brief abstinence test: Effects of continued incentive availability on cocaine abstinence. Experimental and Clinical Psychopharmacology, 10, 10-17.

- Lovibond, S. H., & Caddy, G. (1970). Discriminated aversive control in the moderation of alcoholics’ drinking behavior. Behavior Therapy, 1, 437-444.

- Marlatt, G. A., & Witkiewitz, K. (2002). Harm reduction approaches to alcohol use: health promotion, prevention, and treatment. Addictive Behaviors, 27, 867-886.

- Massey, B. W., McMillan, D. E., & Wessinger, W. D. (1992). Discriminative-stimulus control by morphine in the pigeon under a fixed-interval schedule of reinforcement. Behavioural Pharmacology, 3, 475-488.

- Melin, G. L., & Gunnar, K. (1973). A contingency management program on a drug-free unit for intravenous amphetamine addicts. Journal of Behavior Therapy & Experimental Psychiatry, 4, 331-337.

- Miller, P. M. (1975). A behavioral intervention program for chronic public drunkenness offenders. Archives of General Psychiatry, 32, 915-918.

- Miller, P. M., & Hersen, M. (1972). Quantitative changes in alcohol consumption as a function of electrical aversive conditioning. Journal of Clinical Psychology, 28, 590-593.

- Mills, K. C., Sobell, M. B., & Schaefer, H. H. (1971). Training social drinking as an alternative to abstinence for alcoholics. Behavior Therapy, 2, 18-27.

- Peele, S. (1992). Alcoholism, politics, and bureaucracy: The consensus against controlled-drinking therapy in America. Addictive Behaviors, 17, 49-61.

- Perry, J. L., Larson, E. B., German, J. P., Madden, G. J., & Carroll, M. E. (2005). Impulsivity (delay discounting) as a predictor of acquisition of IV cocaine self-administration in female rats. Psychopharmacology, 178, 193-201.

- Preston, K. L., Bigelow, G. E., Bickel, W. K., & Liebson, I. A. (1989). Drug discrimination in human postaddicts: Agonist-antagonist opioids. Journal of Pharmacology and Experimental Therapeutics, 250, 184-196.

- Preston, K. L., Silverman, K., Umbricht, A., DeJesus, A., Montoya, I. D., & Schuster, C. R. (1999). Improvement in naltrexone treatment compliance with contingency management. Drug and Alcohol Dependence, 54, 127-135.

- Roll, J. M., Higgins, S. T., & Badger, G. J. (1996). An experimental comparison of three different schedules of reinforcement of drug abstinence using cigarette smoking as an exemplar. Journal of Applied Behavior Analysis, 29, 495-504.

- Silverman, K., Chutuape, M. A., Bigelow, G. E., & Stitzer, M. L. (1999). Voucher-based reinforcement of cocaine abstinence in treatment-resistant methadone patients: effects of reinforcement magnitude. Psychopharmacology, 146, 128-138.

- Spragg, S. D. S. (1940). Morphine addiction in chimpanzees. Comparative Psychology Monographs, 115, 1-132.

- Sullivan, M. A., Comer, S. D., & Nunes, E. V. (2006). Pharmacology and clinical use of naltrexone. In E. Strain & M. Stitzer (Eds.), The treatment of opioid dependence (pp. 295-322). Baltimore: Johns Hopkins University Press.

- Terrace, H. S. (1966). Errorless discrimination leaning in the pigeon: Effects of chlopromazine and imipramine. Science, 140, 318-319.

- Urbain, C., Poling, J., Millam, J. D., & Thompson, T. (1978). d-amphetamine and fixed interval performance: Effects of operant history. Journal of the Experimental Analysis of Behavior, 29, 385-392.

- Weeks, J. R. (1962). Experimental morphine addiction: Method for automatic intravenous injections in unrestrained rats. Science, 138, 143-144.

- Young, A. M., & Herling, S. (1986). Drugs as reinforcers: Studies in laboratory animals. In S. R. Goldberg & I. P. Stolerman (Eds.), Behavioral analysis of drug dependence (pp. 9-67). Orlando, FL: Academic Press.

See also:

Free research papers are not written to satisfy your specific instructions. You can use our professional writing services to order a custom research paper on any topic and get your high quality paper at affordable price.