This sample Perception Research Paper is published for educational and informational purposes only. If you need help writing your assignment, please use our research paper writing service and buy a paper on any topic at affordable price. Also check our tips on how to write a research paper, see the lists of psychology research paper topics, and browse research paper examples.

Imagine that you and a friend are taking a walk through a wooded area. As you walk, you become aware of the many stimuli in your surrounding environment: the sound of old leaves shuffling under your feet and birds singing high in the trees, the sight of branches that hang in front of your path, the different touch textures of tree bark when you lean on the trees for support as you go down a steep incline, the sharp pain in your finger as you move a briar vine and accidentally grasp a thorn, the earthy smell of damp ground as you walk along a stream, and the taste of tangy wild blackberries you find growing in a sunny break in the trees. These and many other perceptions of your surroundings will continuously flow through your awareness, effortlessly and rapidly, as you interact with the environment. An even greater number of sensory neural responses to your environment will occur, although you will never become consciously aware of them. The same will be true for your friend, although what you each become aware of may differ based upon your individual interests and expectations as you take the walk.

By perceiving stimuli in the environment, humans and other species increase their likelihood of survival. Acquiring edible food, avoiding dangerous situations, and reproducing all become more likely if an organism can quickly perceive aspects of its current situation. Perceptions of our surroundings usually happen so rapidly and seem so complete that most people give them little thought. However, the multiple, simultaneous chains of events that occur in order for us to perceive our surroundings are extremely complex and still not completely understood. Further, what we perceive is not a perfect representation of the environment; it is simply one that is good enough in most ways most of the time for survival. Curiosity about perceptual processes has motivated philosophical discourse and scientific research for hundreds of years.

In addition to perception being explored simply to understand how it occurs (pure research approach), it is also studied for many practical, or applied, reasons. There are many ways by which perceptual processes can be damaged or not develop properly. The goal of many current researchers is to understand these processes and eventually develop ways to help individuals with perceptual problems. Another application of perception research involves human factors: the use of knowledge about how humans function (physically, perceptually, cognitively) to design safer and more efficient interactions between humans and objects such as machines or computers.

This research-paper presents an overview of many aspects of perception. Historically and currently, how does the study of perception fit with other areas of psychology, especially those of sensation and cognition? What are some basic principles of perception? What are some of the challenges for and theories of perception? What have special populations of participants allowed us to learn about perception?

How is perception distinguished from sensation (see Chapter 19)? There are mixed views on the issue. One simple distinction is that sensation is the neural response to a stimulus, and perception is our conscious awareness, organization, or recognition of that stimulus. Following this line of distinction, cognition is then the active, conscious manipulation of perceptions to encode them in memory, apply labels, and plan response actions. For much of the twentieth century, such distinctions allowed researchers to compartmentalize the topics of their research areas. Researchers who strictly followed such an approach often did not attempt to link their research questions and results to those from a different area.

Although in many ways this compartmentalization of topics made sense during several decades of the twentieth century, these areas of research haven’t always been separated, and most current researchers no longer draw hard lines between these areas. For example, some very early and influential perceptual researchers such as Helmholtz studied anatomy of the senses, measured neural responses (of easily accessible touch neurons), studied conscious perceptions, and attempted to understand how they were all integrated.

So, why would distinctions between sensation, perception, and cognition make sense? First, when trying to understand any complex set of processes, a good strategy is to break them into basic components and study the components individually before investigating how they all interact. Many decades of sensation, perception, and cognition research built a foundation of basic knowledge within each of these areas.

Second, until relatively recently, the available methodological techniques limited the questions that researchers studied, resulting in different types of techniques and participants being used for the different areas. Traditional techniques used to measure the neural processes of sensation and the anatomical connections between neurons were invasive and led to the majority of basic sensation research being performed on animals. (See chapter 15 for a more complete description of neural recording methods.) Meanwhile, although animals can be studied invasively (there are still many ethical constraints, of course), animals are not able to describe their perceptions to researchers. Thus, when humans participated in perception research, different methodological techniques were necessary (e.g., Fechner’s psychophysical methods, forced-choice procedures, multidimensional scaling, etc.; see Chapter 20 for more details). Therefore, much of the basic sensation and perception research used different populations of participants and different procedures. Linking the two sets of data is often difficult because of increasing differences between human and animal sensory mechanisms and brains as the focus moves from lower to higher levels of processing. The distinction between perception and sensation research is illustrated by Hochberg (1978), who gives very complete coverage to classic perception research and extremely minimal mention of any possible underlying physiological mechanisms. In those places where he does mention possible physiological mechanisms, he also explicitly mentions that they are not well understood, and that it is not clear that they actually contribute to the perceptual experiences measured with humans.

As a more concrete example of the traditional distinctions between sensation and perception research, imagine that you desire to pick up this book so you can read it. Several things would have to happen in order for you to process its location in depth so you could accurately and efficiently reach out to grab it. There are two types of depth cues you might use: monocular cues (e.g., occlusion, linear perspective, familiar size, motion parallax, etc.) or binocular cues (disparity, convergence, accommodation). The binocular cue of disparity is basically a measure of the differences in the relative location of items in the two eyes’ views, and it is used for the process of stereopsis. Stereopsis is what allows people to see 3-D in the popular Magic Eye pictures or in random-dot stereograms (first created by Bela Julesz, 1961).

Of interest to sensation researchers, as you focused on the book to determine its depth, specialized cells in your primary visual cortex would modify their neural signals in response to the book’s disparity. Some cells would be best activated when there is zero disparity (the object of interest is at the same depth as the point of focus), whereas others would prefer differing amounts of disparity either in front of or behind the point of fixation. Such signals have been studied in animals (e.g., Barlow, Blakemore, & Pettigrew, 1967) by using microelectrode recordings as a stimulus was systematically varied in depth.

Taking a perceptual rather than a sensation research focus, as you look at the book, you might also become aware of whether is it nearer or farther than some other object, say your notebook, which is also located on the table. If the two objects were relatively close to each other in depth, you would be able to simultaneously fuse the images from your two eyes into a single, 3-D image. If they were too far apart, the two eyes’ views of only one of the objects could be fused into a single image; the other would be diplopic and appear as a double image. (If you pay close attention you can notice diplopia of nonfused objects; often our brains simply suppress the double image so we don’t notice them.1) Ogle (1950) and Julesz (1971) systematically studied such relations by having humans report their subjective perceptions of fusion and diplopia as characteristics of the stimulus were systematically manipulated. Both wrote comprehensive books summarizing binocular vision, but neither attempted to link physiological and psychophysical data. This omission is quite reasonable for Ogle, given that the binocular neurons had not yet been discovered. However, it was also quite accepted that Julesz only briefly mentioned the physiological findings in a relatively cautious manner, and that he focused his writings on the perceptual aspects of binocular vision.

These examples of relatively pure sensation-oriented and perception-oriented research illustrate how important fundamental knowledge could be gained without requiring direct links between sensation and perception. There are a few counterexamples, where the level of processing was basic enough that even many decades ago researchers were able to fairly definitively link animal sensation data to human perceptual data. The most compelling of these examples is the almost perfect match between a human’s measured sensitivity to different wavelengths of light under scotopic conditions (dim lighting when only rod receptors respond to light) and the light absorption of rhodopsin (the pigment in rods that responds to photons of light) across the different wavelengths. In most cases, however, differences in anatomy and how and what was measured made it difficult to precisely link the animal and human data. Despite these difficulties, as researchers gained understanding of the fundamentals of sensation and perception, and as technology allowed us to broaden the range of testable questions, more and more researchers have actively tried to understand perceptual processes in terms of underlying neural mechanisms. Clearer links between sensation and perception data have developed in several ways.

Because animals are unable to directly communicate their perceptions of a stimulus, researchers have limited ability to use animals to study how sensations (neural responses) are directly linked to perceptions, especially human perceptions. However, although they cannot talk, animals can behaviorally indicate some of their perceptions, at least for some tasks. For example, researchers can train some animals to make a behavioral response to indicate their perception of nearer versus farther objects. Then, as the researchers manipulate the location of the target object relative to other objects, they can not only measure the animal’s neural responses but also record its behavioral responses and then attempt to link the two types of responses. Until the 1970s, such linking was done in two steps because animals had to be completely sedated in order to make accurate neural recordings. However, newer techniques allow microelectrode recordings in alert, behaving animals, so the two types of information could be simultaneously measured. Further, in many cases humans can be “trained” to make the same behavioral responses as the animals. Then, researchers can compare the animal and human responses and make a more accurate assumption of the neural responses in humans based on measures made using animals.

Another major approach that researchers are now using to infer the neural mechanisms of human perception (and other higher-level processes such as reading, memory, and emotions) is noninvasive brain activity recordings. There are several types of noninvasive brain activity technologies that have been developed (fMRI, PET, MEG, etc.; see Chapter 16). When using these technologies, participants perform a task while different measures of their brain activity are recorded, depending upon the type of technology (oxygen use, glucose use, electrical activity, respectively). The areas of the brain that are used to process a stimulus or perform a task show relatively increased use of oxygen and glucose, and those areas will also show changes in their levels and timing of the patterns of electrical activity. Both relatively basic and more complex perceptual processes have been studied. For example, these technologies have been used to study the neuronal basis of human contrast sensitivity (Boynton, Demb, Glover, & Heeger, 1999), to localize relatively distinct areas of the IT cortex that are used to process faces versus other objects (Kanwisher, McDermott, & Chun, 1997), and to localize different areas of the brain used to process different aspects of music perception (Leventin, 2006).

These noninvasive brain activity technologies all have relatively poor spatial resolution compared to microelectrode recordings. In other words, researchers can only localize a type of activity to within roughly a one-millimeter area of the brain. In comparison, invasive microelectrode recordings localize the specific activity being measured to a single, specific neuron. Thus, these two types of approaches (human brain activity measures and microelectrode recordings in animals) are still often difficult to link precisely. However, technology continuously brings animal (invasive) and human (noninvasive) methods closer together. For example, an advance in microelectrode recording technology allows researchers to record from arrays of neurons rather than a single neuron at a time. By recording from many neurons, researchers can better understand how the activity in one neuron influences other neurons, and they can better build a picture of the system processes as a whole. Multiple electrode recordings are also beginning to be used with humans in special cases—for example, individuals who are undergoing intracranial monitoring for the definition of their epileptogenic region (Ulbert, Halgren, Heit, & Karmos, 2001). Meanwhile, the spatial resolution of the noninvasive technologies is also improving. Eventually, researchers should be able to completely understand perception in terms of the activity of neural mechanisms, and the simple distinction between sensation and perception will become even less clear.

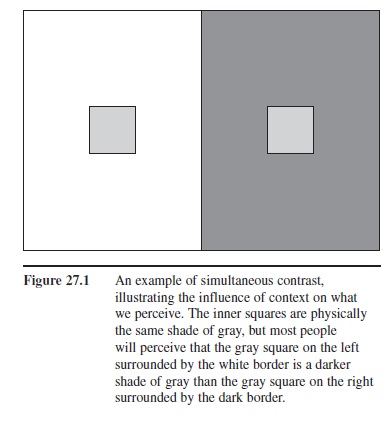

Figure 27.1 An example of simultaneous contrast, illustrating the influence of context on what we perceive. The inner squares are physically the same shade of gray, but most people will perceive that the gray square on the left surrounded by the white border is a darker shade of gray than the gray square on the right surrounded by the dark border.

Figure 27.1 An example of simultaneous contrast, illustrating the influence of context on what we perceive. The inner squares are physically the same shade of gray, but most people will perceive that the gray square on the left surrounded by the white border is a darker shade of gray than the gray square on the right surrounded by the dark border.

The division between perception and cognition is also blurring. For example, there are additional factors that would influence your reaching for a book, and these illustrate higher-level perceptual processes that interact with memory, which has traditionally been a research topic for cognitive psychologists. Although perceptual psychologists have long recognized that prior experience with stimuli can influence perceptions, they traditionally have not studied how the memories are formed, stored, or recalled. For example, based on past experience, you would have some memory of the size and weight of the book you desire to pick up. When you are determining its location in depth, your memory of these book characteristics will influence your determination of its location. Many perception researchers have investigated such interactions between stimulus-based characteristics and experience-based memories, but they have traditionally focused on the perceptual aspects rather than the cognitive ones. Related to the above book example, Ittleson and Kilpatrick (1952, as cited in Hochberg, 1978) manipulated the physical size of familiar playing cards and showed that participants mislocated them in depth (as long as there weren’t other depth or object comparison cues available). The famous Ames room manipulates depth cues and relies on participants’ previous experience with normal rooms (four walls that intersect at perpendicular angles). In the Ames room the walls are not perpendicular, but the visual perspective of the walls, windows, and other objects in the room have been created so that it appears “normal” when viewed through a monocular peephole. Viewers are fooled into perceiving well-known objects (e.g., a friend or family member) as growing or shrinking as they move through the room. As soon as the room is perceived correctly (by using a large viewing slot so both eyes can see the room and correctly interpret the unusual configuration of the walls and objects), the illusion is destroyed.

Similar to how new technologies have begun to better link sensation and perception, the new technologies are also allowing more links with topics that have traditionally been placed within the cognitive domain of research. Processes such as reading (typically considered a language-related process and within the domain of cognition) rely on perceptual input as well as language knowledge. Visual perception researchers have studied how factors such as the contrast, size, polarity, background texture, and spatial frequency of letters influence their readability (e.g., Legge, Rubin, & Luebker, 1987; Scharff & Ahumada, 2002; Solomon & Pelli, 1994). Others have focused on understanding dyslexia, and concluded that subtle changes in the magnocellular and parvocellular visual-processing streams can lead to dyslexia (e.g., Demb, Boynton, & Heeger, 1998). Greater understanding of memory processes (also traditionally within the cognitive domain) has indicated that memories for different characteristics of stimuli (visual, auditory, smell, etc.) rely on the brain areas originally used to process them. For example, if you visualize your room from memory, the pattern of brain activity observed will involve the visual-processing areas and highly resemble the pattern of activity that would be observed if you were actually viewing your room (Kosslyn et al., 1993). When individuals imagine hearing a song versus actually hearing that song, the patterns of brain activity are essentially identical (Levintin, 2006).

The blurring of the distinctions between sensation, perception, and cognition challenges current researchers, because they should now learn at least the basics of all these traditional areas. Although there are still some fundamental questions to be answered within each separate domain, understanding of what many consider to be the more interesting, complex questions will involve understanding interactive processes across multiple domains.

Some Basic Principles Of Perception

Although the process of perception usually appears simple, complete, and accurate, it is not. This is true for each of the senses, as the following principles and examples will illustrate. These principles are being listed as if they were independent, but in reality they are interrelated.

- Regardless of the sense, sensory receptors only convert a restricted range of the possible stimuli into neural signals. Thus, we do not perceive many stimuli or stimuli characteristics that exist in the environment. Further, because the design of the sensory receptors (and later perceptual systems) varies across species, different species perceive the environment differently. For example, humans can only perceive electromagnetic energy that has wavelengths between roughly 400nm and 700nm (the range that we refer to as “light”). We cannot perceive infrared or ultraviolet light, although other species are able to (e.g., some reptiles [infrared] and many birds, bees, and fish [ultraviolet]). Different species can perceive different ranges of auditory frequencies, with larger animals typically perceiving lower ranges of frequency than smaller animals. Thus, humans cannot hear “dog whistles,” but dogs (and other small animals) can. We can build technologies that allow us to perceive things that our systems don’t naturally process. For example, a radio allows us to listen to our favorite song because it has been encoded into radio waves and transmitted to our location. But, even though it is physically present in the room, we can’t hear it until we turn on the radio. It’s important to note, however, that these technologies only allow us to perceive these other stimuli by converting them into stimuli that we can perceive naturally.

- The stimuli that are transformed into neural signals are modified by later neural processes. Therefore, our perceptions of those stimuli that are encoded are not exactly accurate representations of the physical stimuli. We often enjoy such mismatches to reality through demonstrations using illusions. The shifts from reality can be due to genetically predisposed neural wiring (e.g., center-surround receptive fields that enhance the perception of edges for both vision and touch, or the motion detectors that allow us to perceive apparent movement, such as seen in the Phi Effect or in movies, which are a series of still frames). They can also be due to neural wiring configurations that have been modified based on experience (e.g., color perceptions can be shifted for up to two weeks after participants wear color filters for several days; Neitz, Carroll, & Yamauchi, 2002).

- What we perceive is highly dependent upon the surrounding context. The physical stimulus doesn’t change, just our perception of it. For example, after being in a hot tub, warm water feels cool, but after soaking an injured ankle in ice water, warm water feels hot. The color of a shirt looks different depending upon the color of the jacket surrounding it. Similarly, the inner squares in Figure 27.1 appear to be different shades of gray, but really they are identical. The taste of orange juice shifts dramatically (and unpleasantly) after brushing one’s teeth. Many visual illusions rely on context manipulation, and by studying them we can better understand how stimuli interact and how our perceptual systems process information.

- Perception is more likely for changing stimuli. For example, we tend to notice only the sounds from the baby monitor when the regular breathing is replaced by a cough or snort; we will be continually annoyed by the loose thread on our sleeve but not really notice the constant pressure of our watch; and we will finally notice a bird in a tree when it flies to a new branch. In all these cases, we could have heard, felt, or seen the unchanging stimulus if we had paid attention to it. Information about each of them was entering our sensory systems, but we didn’t perceive them. Why? Sensory systems continuously process huge volumes of information. Presumably, we are prone to perceive those stimuli that are most useful for making good response choices and actions to promote survival. When a stimulus first appears, it will tend to be perceived because it represents a change from the previous configuration of the environment. It could be a threat or a stimulus that somehow should be acknowledged, such as a friend. However, once it has been noticed, if it does not change, the system reduces its neural response to that stimulus (the process of neural adaptation). This is a reasonable conservation of energy and metabolic resources, which allows us to focus our perceptions on items of greater interest.

- Perception can be altered by attention. The principle regarding the influence of change alludes to such selective attention mechanisms. Attention can be drawn to stimuli by exogenous influences (such as the changes in stimuli noted previously, or any difference in color, loud-ness, pressure, etc., between stimuli), or attention can be placed on a stimulus due to endogenous factors (such as an individual’s personal interests or expectations). When attention is focused on a specific stimulus, the neural response to that stimulus is increased even though nothing else about the stimulus has changed (e.g., Moran & Desimone, 1985). There are two types of perceptual demonstrations that illustrate well the influence of attention on perception.

Change blindness demonstrations test our ability to perceive specific stimulus changes across different views or perspectives of a scene. The crucial factor is that the different perspectives are not temporally contiguous; there is some short break in our viewing of the scene (which can be less than 0.1 of a second), so that we have to hold the previous perspective in memory in order to make comparisons with the following perspective of the scene. This frequently happens in real life, such as when a large truck passes us and blocks our view of a scene, or when we turn our heads to look at something else and then turn back. Rensink (2002) systematically studied change blindness by using two views of a scene that altered on a computer screen with a blank screen interspersed between alterations. There was one obvious difference between the two views of the scene (e.g., the color of an object, the deletion of an object, a shift in placement of an object). He found that, unless participants were directly attending to the changing object, they had a very difficult time perceiving the change. Movie producers often rely on change blindness; across screen cuts there might be obvious changes in the objects in a room, or an actor’s clothing, but unless you happen to be paying attention to that particular item, it is unlikely you will ever notice the change.

Inattentional blindness demonstrations illustrate that we are often not aware of obvious, changing stimuli that are in the center of our field of view. For example, Simons and Chabris (1999) showed film clips of two teams playing basketball, instructing participants to attend to the ball and count how many times one of the teams passed the ball. The basketball game clip was modified so that a second clip was interleaved with it—one of a gorilla walking across the basketball court. When participants were attending to the ball, most of them did not report seeing the gorilla walking through the scene. You can view examples of this clip as well as other examples of change blindness and inattentional blindness on the Visual Cognition Lab of the University of Illinois Web site (http://viscog.beckman .uiuc.edu/djs_lab/demos.html).

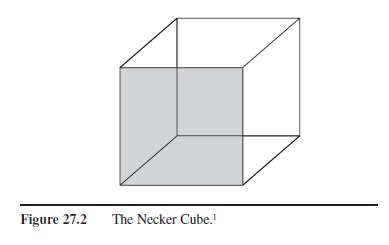

Figure 27.2 The Necker Cube.

Figure 27.2 The Necker Cube.

1-This stimulus illustrates qualitative ambiguity because a viewer’s interpretation of it can alternate between two different plausible configurations. In this case, both configurations are that of a cube, but the gray-shaded panel of the cube can be either the front-most surface of the cube, or the rear-most surface of the cube.

- Perception is the result of both bottom-up and top-down processes. Essentially all information individuals acquire of the world comes in through their senses (often referred to as bottom-up processing). This type of processing is what most people think about when they reflect on what they perceive—”I perceive my dog because my eyes see my dog” or “I feel the smooth texture of the glass because my finger is moving smoothly across it.” Indeed, unless you are dreaming or hallucinating (or are part of The Matrix), your perceptions are dominated by external stimuli. However, the incoming sensory information is not the only influence on what you perceive. Of the millions of sensory signals being activated by environmental stimuli, only a small subset will enter conscious perception (those attended to). Of those, only a few are processed deeply enough to permanently change the brain (i.e., be encoded as a memory). These previously encoded experiences can influence your expectations and motivations, and in turn, influence the bottom-up sensory signals and the likelihood they will achieve conscious perception (often referred to as top-down processing). An increasing body of research has demonstrated top-down influences on the neural responses of relatively low-level sensory/perceptual processing areas of the brain (e.g., Delorme, Rousselet, Mace, & Fabre-Thorpe, 2004).

There are innumerable examples of top-down influences. If we are walking in the woods, and I tell you that there are a lot of spiders around, you might then perceive any soft movement on your skin as being a spider, at least for a second. Once you attend to it carefully and increase the bottom-up information that negates the spider interpretation, you will correctly perceive that it was just a leaf on a nearby bush. If I had not told you about spiders in the first place, you might not have even perceived the soft touch.

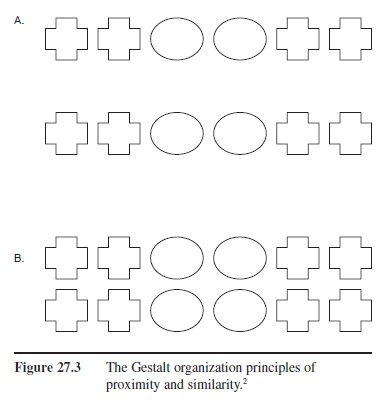

Figure 27.3 The Gestalt organization principles of proximity and similarity.

Figure 27.3 The Gestalt organization principles of proximity and similarity.

2 In (A) most people tend to describe the stimuli as two rows made up of the shapes: plus, plus, oval, oval, plus, plus. The spacing between the two rows causes the shapes to group according to row rather than group by shape. In (B), the two rows are positioned so the vertical space between the shapes is equal to the horizontal space between the shapes. In this case, most people tend to describe the stimuli by grouping them by shape, i.e., a set of four pluses, then a set of four ovals, and then another set of four pluses.

This example shows how your perception can be fooled by top-down expectations. However, top-down influences are very useful. They can prime you to perceive something so that you can react to it more quickly. They can also help you perceive incomplete stimuli or interpret ambiguous stimuli, both of which happen in daily life (e.g., top-down processing helps us find objects in cluttered drawers or interpret a friend’s handwriting). Top-down processes often lead two people to have different interpretations of the same event.

The above principles may imply that our perceptual systems are not very good.

There are things we cannot perceive, what we do perceive may not be exactly accurate, and our perceptions may change based on context, attention, or other top-down processes. However, our perceptual systems are for the most part amazingly accurate and have developed to maximize processing efficiency and promote our survival. If we perceived everything, we would be overwhelmed with information, most of which is not necessary for the task at hand. In the natural environment, the mismatches to reality will promote survival (e.g., by enhancing edges) more than threaten it, and the ability to use top-down as well as bottom-up processes allows for more flexible responses as a function of experience.

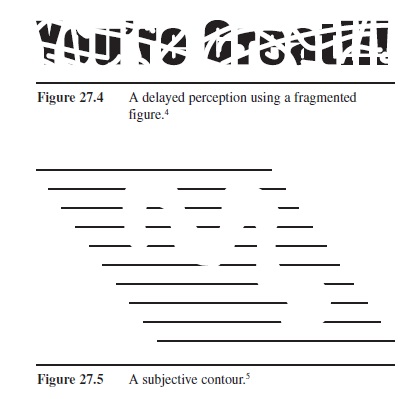

Figure 27.4 A delayed perception using a fragmented figure.

Figure 27.4 A delayed perception using a fragmented figure.

4 – After several seconds of viewing, most people are able to decipher the phrase. (Give it a try. If you’re stuck, read the following backwards: !!taerG er’uoY) After perceiving the “completed” figure, viewers will typically be able to more rapidly perceive it the next time it is viewed, even if a large amount of time has elapsed.

Figure 27.5 A subjective contour.

5 – Your perceptual system creates the impression of the white, worm-shaped figure that appears to be lying across the background of black horizontal lines. In reality, there is no continuous shape, only black lines that have strategic parts of them missing.

In fact, our perceptual systems are able to process complex environmental surroundings more quickly and accurately than any computer. The stimulus information that activates our sensory receptors is often ambiguous and incomplete; however, our systems are still able to rapidly produce richly detailed and largely accurate perceptions. Do we learn to do this as we experience interactions with the environment, or are perceptual abilities controlled by innate mechanisms? The next subsection discusses some of the theories related to this question, along with some challenges a perceptual system must overcome when making the translation from stimulus energy to perception.

Challenges For Perceptual Systems And Theories Of Perception

There are many theories of perception, several of which have roots in age-old philosophical questions. (See Chapters 1 and 2 for additional summaries of some of the historical and philosophical influences in psychology.)

Regardless of the theory of perception, however, there are several challenges that any perceptual system or theory must handle in order to be considered complete. As already mentioned, our systems tend to quickly provide us with amazingly accurate perceptions, for the most part. Thus, researchers did not appreciate many of the challenges outlined in the following until they attempted to study the perceptual processes or create artificial perceptual systems. The following list of challenges was taken from Irvin Rock’s (1983) book, The Logic of Perception. These challenges and examples tend to focus on vision, but they also hold true for the other senses (e.g., see Levintin, 2006, for multiple examples using music and other auditory stimuli).

- Ambiguity and Preference. Rock recognized two types of ambiguity, dimensional and qualitative. Dimensional ambiguity refers to the fact that a single external stimulus (distal stimulus) can lead to multiple retinal images (proximal stimulus), depending upon the distal stimulus placement in our 3-D environment. For example, the retinal image of a business card could appear to be a perfect rectangle (front view), a trapezoid (due to an angled view with one side of the card closer to the eye so that its retinal image length is longer than the far side of the card), or a line (edge view). However, our perception of the business card will almost always be of a perfect rectangle. (Sometimes, if the viewing perspective is an uncommon one, the object may not be accurately recognized.) Qualitative ambiguity refers to the fact that two different distal stimuli can lead to the same proximal stimulus. In both cases we do not usually have any problems perceiving the correct distal stimulus because, somehow, we have a preference for a single, usually correct interpretation of the ambiguous proximal stimulus. There are exceptions to preference, but these generally occur for artificial stimuli such as the Necker Cube (see Figure 27.2), or other 2-D reversible figures.

- Constancy and Veridicality. Even though proximal stimuli vary widely across different environmental conditions (lighting, placement, etc.), we tend to perceive a distal object as having color constancy, shape constancy, size constancy, and so on. In other words, we perceive the “true” distal stimulus, not one that varies as conditions change. Thus, somehow, perception becomes correlated with the distal rather than the proximal stimulus, even though the only direct information the perceptual system receives from the distal stimulus is the changing proximal stimulus.

- Contextual Effects. As already explained, perceptions of distal stimuli are affected by neighboring stimuli, both in space and in time.

- Organization. Our perceptions tend to be of discrete objects; however, the information in a proximal stimulus is simply a pattern of more or less electromagnetic energy of certain wavelengths; thus, it often does not clearly delineate object borders. For example, some objects may be partially occluded, and some “edges” may really be shadows or patterns on the surface of an object. With respect to organization, Rock highlights the Gestalt fundamentals of organization such as figure/ground, proximity, similarity, continuation, and so on. Figure 27.3 (A and B) shows how proximity and similarity can influence the perception of a group of objects.

- Enrichment and Completion. Also recognized by the Gestaltists, sometimes what we perceive is more than what is actually available in the proximal stimulus. As their famous saying goes, “The whole is greater than the sum of its parts.” This might happen because distal objects are partially occluded by other objects. Further, once an object is recognized, it becomes more than just the visual image of the object because perceptions such as functionality are included. Rock suggests that these completion and enrichment abilities are due to prior experience with the object being perceived. A second class of enrichment/completion occurs when we perceive something not present at all, such as subjective contours (see Figure 27.4) and apparent motion. Experience is not necessary to perceive this second class of enrichment/completion.

- Delayed Perceptions. In most cases, perception occurs rapidly; however, in some special cases, a final perception can occur many seconds (or longer) after the proximal stimulus has started to be processed. Examples of this include stereopsis of random-dot stereograms (or the Magic-Eye pictures) and the processing of some fragmented pictures (see Figure 27.5). Usually, once a specific example of either type of stimulus is perceived, subsequent perceptions of that same stimulus are much more rapid due to top-down processing based on memories of the earlier experience.

- Perceptual Interdependences. Perceptions can change depending upon the sequence of stimuli, with a previous stimulus altering the perception of a subsequent stimulus. This is basically a temporal context effect, and it illustrates that it is not just information included in the proximal stimulus that influences how that stimulus is perceived.

In addition to the challenges outlined by Rock (1983), there seems to be at least one additional challenge that is currently getting increasing amounts of research attention: Integration/Binding. How do we end up with cohesive perceptions of stimuli? Even within a single sense, different aspects of a stimulus are processed by different populations of neurons. Further, many stimuli activate more than one sense. For example, as we eat, we experience the texture, the taste, and the smell of the food. When we vacuum a room, we see and hear the vacuum cleaner, and we can hear the sound change as we see and feel it go across different surfaces. How are those distinct perceptions united?

Now that the challenges for perception and perceptual theories have been outlined, what are some of the theories of perception that have been proposed? Regarding the question, “How do our perceptual abilities come to exist?” there have been two major theoretical perspectives, nativism and empiricism. Nativism proposes that perceptual abilities are innate rather than learned. Empiricism proposes that perceptual abilities are learned through meaningful interactions with the environment. As summarized by Gordon and Slater (1998), these two perspectives are rooted in the writings of several famous philosophers: John Locke and George Berkeley (supporting empiricist viewpoints), and Plato, Descartes, and Immanuel Kant (supporting nativism viewpoints). There is evidence supporting both perspectives. The nativism perspective is supported by both ethological studies (studies of animals in their natural environments) and some observations of newborn humans. For example, newly hatched herring gulls will peck the red spot on the beak of its parent in order to obtain food (Tinbergen & Perdeck, 1950, as cited in Gordon & Slater, 1998), and baby humans will preferentially turn their heads toward the smell of their mothers’ breast odor (Porter & Winberg, 1999). In contrast, support is given to an empiricist perspective by observations that restricted sensory experience in young individuals will alter later perceptions. Kittens exposed to only vertical or horizontal orientations during the first several weeks after their eyes opened were impaired in their ability to perceive the nonexperienced orientation. If young human children have crossed eyes or a weak eye, then their stereoscopic vision will not develop normally (Moseley, Neufeld, & Fielder, 1998). Given the evidence for both perspectives, only a relatively small group of researchers have exclusively promoted one over the other. (See Gordon and Slater for a summary of both the philosophical background and the early works in the development of the nativism and empiricism perspectives.)

Under the domain of each of these broad perspectives, many theories of perception exist (not necessarily exclusively as either nativistic or empirical). For example, Rock (1983) outlined two categories of perceptual theories: stimulus theories and constructivist theories. Stimulus theories are bottom-up and require a relatively perfect correlation between the distal and proximal stimuli. Rock pointed out that this is not possible for low-level features of a stimulus (due to ambiguity, etc.), but it is possible that higher-level features could show better correlation. He uses J. J. Gibson’s (1972) Theory of Direct Perception as an example of such a high-level-feature stimulus theory. Gordon and Slater (1998) categorize Gibson’s theory as an example of nativism because Gibson assumed that invariants existed in the natural, richly complex environment, and that additional, constructive processes were not necessary for perception. According to Rock, although perception is obviously largely driven by the stimulus, pure stimulus theories should not be considered complete because they do not take into account the influence of experience, attention, and other top-down processes.

Constructivist theories suggest that the perceptual systems act upon the incoming bottom-up signal and alter the ultimate perception. Rock (1983) distinguishes two types of constructivist theories: the Spontaneous Interaction Theory and cognitive theories. According to the Spontaneous Interaction Theory, as the stimulus is processed in the system there are interactions among components of the stimulus, between multiple stimuli, or between stimuli and more central representations. Rock credits the Gestalt psychologists with the development of the Spontaneous Interaction Theory (although they did not call it that). The spontaneous interactions are assumed to be part of bottom-up neural processes (although the specific neural processes have largely been unspecified). Because the Gestalt theory doesn’t include many known influences of prior experience and memory, Rock also considered it to be incomplete. Gordon and Slater (1998) point out that the Gestalt psychologist Wolfgang Kohler used the Minimum Principle from physics to explain the Gestalt principles of organization; in turn, he supported an extreme nativist position (whereas other Gestalt psychologists such as Koffka and Wertheimer did not).

A cognitive theory, according to Rock (1983), incorporates rules, memories, and schemata in order to create an assumption about the perceived stimulus, but these interpretations are nonconscious processes. Rock believed this complex constructive process explains why perception is sometimes delayed, and that the cognitive theory also handles the other challenges for perception that he outlined. For example, constancy (the perception that objects appear to have the same shape, size, and color under differing perspectives and conditions) requires access to memory, which is not acknowledged in either the stimulus or spontaneous interaction theories.

Given its reliance on memory and prior experience, Rock’s (1983) cognitive theory may seem to fit with an empiricist perspective, but he personally believed that there were meaningful distinctions between a cognitive theory and the empiricist perspective, at least as described by Helmholtz. According to Rock, prior experience alone does not include the use of logic, which can allow for inferences not directly based on prior experience. Similar to Rock’s cognitive theory is Richard Gregory’s theory (1980, as cited in Noe & Thompson, 2002) that Perceptions are hypotheses, which proposes that perceptions are the result of a scientific inference process based on the interaction between incoming sensory signals and prior knowledge. However, according to Gordon and Slater (1998), Gregory clearly supported the empiricist perspective.

Given the evidence of both innate and learned aspects to perception, it is not surprising that more current researchers have proposed theories that acknowledge both. For example, Gordon and Slater (1998) summarize Fodor’s modularity approach, which includes perceptual input systems (largely innate in their processing) and more central systems that rely on learned experiences. Gordon and Slater also summarize Karmiloff-Smith’s approach, which moves beyond simple modularity but still recognizes both innately predisposed perceptual processes and perceptual processes that rely on experience.

The above overview of theories of perception further supports the complexity of perception. If it were a simple process there would be little debate about how it comes to exist. The benefit of having a theory about any scientific topic is that it will give structure to frame new hypotheses and, in turn, promote new research studies. By having multiple theories, the same problem or question is approached from different perspectives, often leading to a greater expansion of understanding.

Using Special Populations To Study Perception

The previous discussion of participants in perception studies distinguishes some relative benefits of using animals (fewer ethical constraints, which allows invasive measures) and humans (they have a human brain and can directly report their perceptual experiences). However, there are special populations of humans that have made important contributions to our understanding of perceptual processes. Persons with damage to perceptual processing areas of the brain and young humans (infant through early childhood) are two of these special populations. Each group presents unique challenges with respect to their use, but each also has provided valuable insight on perceptual processes that could not have been ethically obtained using normal adult humans.

The study of brain-damaged individuals parallels the use of lesion work in animals, but humans can give subjective reports of the changes in their perceptual abilities and perform high-level tasks that are difficult or impossible for animals. The major limitation of the use of brain-damaged humans is that the area and extent of damage is not precisely controlled. In most cases, strokes or head trauma will damage multiple brain areas, or not completely damage an area of interest. Thus, it is difficult to determine precisely which brain structures suffer the loss of specific perceptual (or other) abilities. However, by comparing several individuals with similar patterns of damage and by taking into account the results of precise lesion work in animals, some reasonably firm conclusions can be made.

Of special interest to perception researchers are studies of individuals with agnosia (the inability to remember or recognize some selective aspect of perception). Generally, agnosias are limited to the processing of information from one sense, with the other senses able to still process that aspect of perception. For example, a person with prosopagnosia (inability to process faces) can still recognize another individual from the person’s voice or by feeling the person’s face. Examples of additional documented visual agnosias include color agnosia (cerebral achromatopsia), motion agnosia (cerebral akinetopsia), and different forms of object agnosia. Some individuals can recognize objects but are not able to localize them in space or interact with them meaningfully using visual information. Affecting the sense of touch are astereognosia (inability to recognize objects by touch) and asomatagnosia (failure to recognize parts of one’s own body). Careful study of such individuals has supported a modular perspective of perceptual processing, in that damage to specific populations or groups of neurons leads to specific deficits in perception.

The study of young humans is challenging in that, like animals, they cannot directly (through language) communicate their perceptual experiences, and they are limited in the range of tasks they are able to perform. However, through technology (e.g., noninvasive event-related potentials) and the development of many special techniques (e.g., preferential looking, the conditioned head turn, and the high-amplitude sucking procedure), major advances have been made in our understanding of perceptual development.

The use of human infant participants has been key to examining nativism versus empiricism. Aslin’s (1981, as cited in Gordon and Slater, 1998) model of perceptual development summarizes many perceptual development studies and provides support for both philosophical perspectives. This model proposes three types of visual development at birth: undeveloped (which can be induced or remain undeveloped based on postnatal experience), partially developed (which can be facilitated, maintained, or show loss due to postnatal experience), and fully developed perceptual abilities (which can be maintained or show loss due to postnatal experience).

Perceptual development research has also advanced our understanding of and ability to help individuals with problems due to perceptual development disorders. As with the use of brain-damaged participants, perceptual development researchers have used human as well as animal participants because some controlled manipulations of development would not be ethical to perform on humans. For example, some human babies are born with strabismus (eye misalignment), which can lead to amblyopia (the loss of visual acuity in an ophthalmologically normal eye). The condition can be induced in animals, allowing investigation of the resultant impact on brain development (measured using invasive techniques), and allowing researchers to test possible treatments prior to using them with humans. However, of more direct interest to parents of such babies are the studies of other strabismic babies prior to and following treatments (by measuring the impact of treatment on observable behaviors and through the use of other noninvasive measures)

Summary

Perception is a complex process, and although researchers have produced volumes of work in our endeavor to understand it, there is still much to be learned. Historically, visual perception research has dominated the research efforts of the other senses, due to both the better accessibility of the sensory and neural structures of vision and humans’ tendency to put greater value on that sense. However, auditory research is also well advanced, and research on the other senses is progressing rapidly. Perception research is also interfacing with areas such as artificial intelligence and the study of consciousness. Greater understanding of the processes of perception will continue to impact daily living through the development of devices to aid individuals with perceptual problems (e.g., computer chips to replace damaged brain areas), the development of new technology (e.g., artificial noses to detect bombs or illegal drugs; multisensory virtual reality), and the design of everyday items (e.g., more readable highway signs and Web pages).

References:

- Barlow, H. B., Blakemore, C., & Pettigrew, J. D. (1967). The neural mechanism of binocular depth discrimination. Journal of Physiology, 193, 327-342.

- Boynton, G. M., Demb, J. B., Glover, G. H., & Heeger, D. J. (1999). Neuronal basis of contrast discrimination. Vision Research, 39, 257-269.

- Delorme, A., Rousselet, G. A., Mace, M., & Fabre-Thorpe, M. (2004). Interaction of top-down and bottom-up processing in the fast visual analysis of natural scenes. Cognitive Brain Research, 19, 103-113.

- Demb, J. B., Boynton, G. M., & Heeger, D. J. (1998). Functional magnetic resonance imaging of early visual pathways in dyslexia. The Journal of Neuroscience, 18(17), 69396951.

- Enns, J. (2004). The thinking eye, the seeing brain: Explorations in visual cognition. New York: W. W. Norton & Company.

- Gibson, J. J. (2002). A theory of direct visual perception. In A. Noe & E. Thompson (Eds.), Vision and mind: Selected readings in the philosophy of perception (pp. 77-89).Cambridge, MA: MIT Press.

- Gordon, I., & Slater, A. (1998). Nativism and empiricism: The history of two ideas. In A. Slater (Ed.), Perceptual development: Visual, auditory, and speech perception in infancy (pp. 73-103). East Sussex, UK: Psychology Press Ltd.

- Hochberg, J. (1978). Perception (2nd ed.). Englewood Cliffs, NJ: Prentice Hall.

- Humphreys, G. W. (Ed.). (1999). Case studies in the neuropsychology of vision. East Sussex, UK: Psychology Press Ltd.

- Julesz, B. (1961). Binocular depth perception of computer generated patterns. Bell System Technical Journal, 39, 1125-1162.

- Julesz, B. (1971). Foundations of cyclopean perception. Chicago: The University of Chicago Press.

- Kanwisher, N., McDermott, J., & Chun, M. (1997). The fusiform face area: A module in human extrastriate cortex specialized for face perception. The Journal of Neuroscience, 17(11), 4302-4311.

- Kosslyn, S. M., Alpert, N. M., Thompson, W. L., Maljkovic, V., Weise, S. B., Chabris, C. F., et al. (1993). Visual mental imagery activates topographically organized visual cortex: PET investigations. Journal of Cognitive Neuroscience, 5, 263-287.

- Legge, G., Rubin, G., & Luebker, A. (1987). Psychophysics of reading. V. The role of contrast in normal vision. Vision Research, 27, 1165-1177.

- Levintin, D. (2006). This is your brain on music: The science of a human obsession. New York: Dutton.

- Moran, J., & Desimone, R. (1985). Selective attention gates visual processing in the extrastriate cortex. Science, 229, 782-784.

- Moseley, M. J., Neufeld, M., & Fielder, A. R. (1998). Abnormal visual development. In A. Slater (Ed.), Perceptual development: visual, auditory, and speech perception in infancy (pp. 51-65). East Sussex, UK: Psychology Press Ltd.

- Neitz, J., Carroll, J., & Yamauchi, Y. (2002). Color perception is mediated by a plastic neural mechanism that is adjustable in adults. Neuron, 35, 783-792.

- Noe, A., & Thompson, E. (Eds.). (2002). Perceptions as hypotheses. Vision and mind: Selected readings in the philosophy of perception (pp. 111-133). Cambridge, MA: MIT Press.

- Ogle, K. (1950). Researches in binocular vision. Philadelphia: W. B. Saunders Company.

- Porter, R., & Winberg, J. (1999). Unique salience of maternal breast odors for newborn infants. Neuroscience and Biobehavioral Reviews, 23, 439-449.

- Rensink, R. A. (2002). Change detection. Annual Review of Psychology, 53, 245-277.

- Rock, I. (1983). The logic of perception. Cambridge, MA: MIT Press.

- Scharff, L. F. V., & Ahumada, A. J., Jr. (2002). Predicting the readability of transparent text [Electronic version]. Journal of Vision, 2(9), 653-666.

- Simons, D. J., & Chabris, C. F. (1999). Gorillas in our midst: Sustained inattentional blindness for dynamic events. Perception, 28, 1059-1074.

- Solomon, J. A., & Pelli, D. G. (1994). The visual filter mediating letter identification. Nature, 369, 395-397.

- Ulbert, I., Halgren, E., Heit, G., & Karmos, G. (2001). Multiple microelectrode-recording system for human intracortical applications. Journal of Neuroscience Methods, 106, 69-79.

See also:

Free research papers are not written to satisfy your specific instructions. You can use our professional writing services to order a custom research paper on any topic and get your high quality paper at affordable price.