This sample Evidence-Based Policy In Crime And Justice Research Paper is published for educational and informational purposes only. If you need help writing your assignment, please use our research paper writing service and buy a paper on any topic at affordable price. Also check our tips on how to write a research paper, see the lists of criminal justice research paper topics, and browse research paper examples.

Overview

Evidence-based policy in crime and justice is defined here as a conscientious approach to intentionally use research to make decisions about programs, practices, and larger scale policies. Evidence-based policies, by and large, are those found to be effective in prior rigorous studies to reduce crime or improve justice. This research paper first summarizes the rationale for an evidence-based approach and the different types of evidence needed to respond to different policy questions.

Exactly how much influence evidence should have on policy is then considered, and key mechanisms in the approach are illuminated. After providing a brisk history of evidence-based policy, the article concludes with some important by-products and limitations to the approach.

Introduction

In recent years, the term “evidence-based” has become the catch phrase to describe the intentional use of research evidence to make policy and practice decisions. The cornerstone of evidence-based policy would be a conscientious attempt by government and other decision-makers to adopt policies, practices, and programs (hereafter, policies) that have been shown to be effective in prior evaluation studies, usually rigorous randomized controlled trials or high-quality quasi-experiments. There are a few who would argue that policies are “evidence-based” if they have sound theoretical and conceptual footing based on prior research, even if the actual policy has not been previously tested. A commitment to evidence-based policy would be demonstrated, among other things, by decision-makers determining what prior research indicates about their current policies and whether they should be continued or revised and what prior evidence is on any new policies they are considering adopting.

Why Evidence-Based Policy In Crime And Justice?

Why has evidence-based policy become “all the rage” (Tilley and Laycock 2000), in the USA and elsewhere? The pursuit of evidence-based policy has several drivers. First, policies may have a range of effects, from strong positive impact (helping the situation) to no effect (basically a zero impact in the setting) to a large negative effect (hurting the situation). Policy makers who wish to maximize the possibility of having positive impacts with the future policies they implement may do so by adopting policies shown to be effective in prior research.

Second, governments are under pressure to make choices and commitments while simultaneously dealing with shrinking resources. Dollars spent and resources expended toward ineffective strategies are costly and hard to recoup. Allocating those resources to policies that, at least, have been demonstrably effective somewhere else is seen as a “safer bet.”

Third, evidence is viewed as a more rational input for making decisions about policies than factors such as the special case or anecdote, ideology, politics, pressures from special interests, and tradition. Policies implemented on the basis of such input are sometimes criticized for not being based on rational consideration of the problem and potential solutions.

Finally, it is important to recognize that some policy makers and decision-makers do indeed want to make improvements in their venue and collaborate with others to help generate the evidence on what works and what does not. Indeed, some of these individuals are recognized annually through awards, made by George Mason University’s Center for Evidence-Based Crime Policy, for instance, to police chiefs and others who have contributed notably to improvement through the generation and use of evidence.

What Evidence Should Answer What Question?

Evidence-based policy in crime and justice invites attention to the kinds of dependable evidence that exists, or can be generated, so as to inform decisions at the policy level. Five major evaluation questions invite efforts to generate dependable evidence.

The first is “What is the nature and severity of the problem? And what is the evidence?” No consideration of crime and justice policy can proceed without good understanding of where the problems are. Sherman (1998), in his seminal paper for the Police Foundation, argued that evidence-based policing would come to depend on large-scale monitoring databases which would help to empirically identify problems requiring law enforcement intervention. In recent years, such dependable evidence has been embodied in such information sources as COMSTAT, police administrative data (which is often used to identify trouble spots in the jurisdiction known as hot spots), and other sources in local jurisdictions. At the national level, answers have depended on sources such as the National Crime Victimization Surveys (NCVS) and Federal Bureau of Crime (FBI) police-captured crime reports. There are many standards for these kinds of data. The most critical and best articulated concern the statistical quality of the sample, the data’s reliability currently and over time, and the data’s validity in capturing what the researchers and policy or practice partners intend to measure.

The second set of questions fall under the general umbrella of etiology or causation, such as “Where did the problem come from?” and “what is the evidence?” There are many approaches to identifying potential causes of crime or other problems related to justice. However, longitudinal cohort studies are often recommended as a powerful form of evidence to answer questions about etiology of criminal behavior. This is because such studies usually include repeated measurements on individuals over substantial time periods. For example, longitudinal studies that included measurements on individuals from a very young age to adulthood can better identify factors that preceded the start or onset of delinquency or crime than cross-sectional studies that include only one measurement at the same point in time.

The third set of questions regarding evidence-based crime and justice policy is “What programs are deployed to address the problem, and how well are they deployed, and what is the evidence?” Before implementing new policies and programs to address a problem, it is useful to inventory what is currently being done about it. These data can be elusive, but funding agencies, organizations providing services, and schools or communities receiving services may be sources of learning what is going on. Evidence standards for such data are not as well thought out, but would likely include how comprehensive and reliable the data are, including how was current practice sampled. Some organizations will conduct “policy scans” to identify what is going on; this strategy may be simple, as in documenting state laws for sentencing sex offenders, or it may be more complex, as in documenting violence prevention strategies employed by schools, police, and community groups in a city. In any case, the quality of the policy or program’s implementation is usually a crucial consideration whether the activity is ongoing or new and whether the program is tested in a controlled trial or not.

A fourth set of questions has to do with “What works and could work better?” Further, what is the evidence? This is often the crux of research contributions to evidence-based policy. As mentioned earlier, the initial policy idea may seem to be a good one, but it may go belly up after implementation. There may be unforeseen negative or backfire effects. Evaluations provide the evidence on answering questions about whether a policy has worked or not. But such impact evaluations are not all “created equal,” and some types of studies have higher internal validity, i.e., they do a better job of convincing the skeptic that reasons for the outcome other than the program have been satisfactorily controlled.

In the context of this fourth set of questions, the randomized controlled trial is viewed by most researchers as providing the least equivocal evidence on what works. The aim of the randomized trial is to generate fair and unbiased evidence about whether one or another approach works better. The key theme is fair comparison. Randomized controlled trials, including place-based ones, on hot spot policing, mandatory arrest for domestic violence, Scared Straight programs, and others mounted since the 1960s.

Because policies in crime and justice are often administered at federal or state level, they may be difficult to evaluate through randomized experimental design. Another class of statistical designs for impact evaluations that are viewed by some researchers as convincing (and having high internal validity) are quasi-experiments. These study designs do not use randomization to assign entities in the study to conditions. Instead, they use matching or other techniques to assess the impact of policies so as to approximate the results of a randomized controlled trial.

The final set of questions is “What is the cost effectiveness of alternatives? And what is the evidence?” Once there is some demonstrable evidence on effectiveness of a policy, it is also important to capture data on the costs of the policy and potential alternatives. This can be a very important consideration for policymakers, particularly if two alternatives are likely to achieve similar effects; the cheaper alternative may be the best option. To date, explicit standards for cost data are not well articulated or agreed upon. Cost-benefit analyses are demanding and often require researchers to make assumptions that are difficult to sustain and to estimate costs for phenomenon such as “psychic harm.” Cost-effectiveness analyses are generally easier to generate as they estimate costs per unit of improvement for a program, i.e., every $1.00 spent under the policy results in a return on investment of $1.50. Data such as these permit researchers to compare economic data across interventions, thereby increasing the policy relevance of the research.

How Strong A Connection Between Evidence And Policy?

In some sense, evidence-based policy is simply a new label for a goal to which applied researchers have long aspired: a greater role for science in decision-making. Substantial research has been done, for instance, whether and why or why not dependable scientific evidence gets used in policy decision-making. The themes in this “knowledge utilization” literature are complex but indicate that the uses of research to make definitive decisions about policy, often referred to as “instrumental use,” are not common. Carol Weiss, a leading scholar on the uses of research, discussed a different role for evidence, which she calls “conceptual use.” Evidence may not be used to make clear-cut decisions about policies, for instance. But over time, in a context which she calls “the circuitry of enlightenment,” the research results to provide new generalizations, ideas, or concepts that are useful for making better sense of the policy scene (Weiss et al. 2005).

The term, evidence-based policy, however, indicates a stronger role for research and evaluation than conceptual use. Many writers in the evidence-based arena argue for an evidence-informed approach that research and evaluation would, at the very least, “have a seat at the table,” with other input criteria before a decision is made. Some writers have argued that decision-making should go beyond mere consideration of research (or being informed by studies) to actively using it in making choices about policies. In recent years, some have articulated a different role, in which policy makers implement a decision about a program and then seek evidence to post hoc “support” their decision. This has been referred to, with appropriate sarcasm, as policy-based evidence.

The Mechanisms For Evidence-Based Policy

Regardless of the exact nature of the connection, the current emphasis on evidence-based policy has created concern about the causal chain between research and evaluation evidence and the decisions and choices that are made about policy and practice in crime and justice. Enhancing the likelihood that the evidence will be used presents the challenges considered next.

First, the potential user of evidence must know of the existence of evidence. Evidence producers often assure that this happens by identifying potential users and developing a system that gets the information to them. Many organizations have been using passive mechanisms, such as having potential users self-subscribe to email newsletters and making specialized websites available. Active dissemination campaigns take time and money to develop properly even if one can identify the relevant target populations and the most appropriate vehicle for transmission of information to them. For example, the University of Maryland Report to the Congress on Crime Prevention (Sherman et al. 1997) was subsequently sent with summary and cover letter by the Jerry Lee Foundation to 500 leading US justice policymakers.

Another link in the evidence-based policy chain concerns the fact that the mere receipt of evidence does not assure understanding. How researchers can communicate the evidence is one aspect of this. Another aspect is the ability of the intended users to understand the evidence they receive. For example, legislators and legislative staff vary in their ability to understand what a randomized controlled trial is and why it is important. Lum et al. (2011) have generated an evidence policing matrix that portrays in brief prose, simple numbers, and graphs on the evidence for what works with what target populations and to what extent. New publications such as Translational Criminology have the aim of assuring that potential users can understand easily the evidence from dependable research. The US Department of Justice Office of Justice Programs has initiated a web-based effort with similar aims at http://crimesolutions.gov.

A major consideration is the organizational or political constraints on the use of evidence. The potential user may well be aware of the evidence and have an understanding of it (including limitations), but there may be serious internal and external constraints to its use. For example, on rotating shifts among the New York City Police Department officers was the subject of an evaluation conducted by the Vera Institute in the 1980s (Cosgrove and McElroy 1986). One set of precincts retained the rotating shift (where officers were continuously rotated every few weeks to different shift hours, such as 8–4, 4–12, 12–8), and the treatment precincts were provided with steady shifts. Although the experiment found that rotating shifts harmed officer health, increased sick days, and had other negative effects, the NYPD kept rotating shifts because of its traditional concerns with potential for officer corruption (i.e., shifting rotations makes it harder to know the “wrong people”). Although some actors in the NYPD asked for the study and welcomed the news, the more powerful in the bureaucracy were reluctant to make any changes.

Finally, there must be incentives to use dependable scientific evidence. Not everyone will want to come to the party or have incentives to do so. Weiss and colleagues (2008) have identified one major incentive, however, that has surfaced since the late 1990s in the United States, the “mandated use” of evidence. Mandated use means that grant funding is tied to the use of programs or policies from an accepted list or registry of evidence that identifies evidence-based programs. Jurisdictions sometimes will receive money to implement programs selected from the list. Weiss et al. (2008) raised concerns about this approach, particularly as the criteria for making the lists can also be questioned and can be politicized.

Often, there are disincentives to evidence-based policy, as decision-makers may worry about the consequences of adopting evidence-based programs if it means admitting that the ones they had implemented earlier were the wrong choices. For instance, high-quality evaluations may be eschewed by risk-averse executives who do not want to have to answer questions about their having earlier adopted “bad policy.” Donald Campbell (Campbell 1969), one of the seminal writers on the evidence-based approach to policy decision-making, argued that policy makers should not be punished for using evidence when it showed their decision was wrong. Instead, they should be applauded – even rewarded – for following an approach of implementing, rigorously testing, and then modifying policy in light of this evidence.

A Brisk History Of Evidence-Based Policy

Researchers have long focused on and written about the relationship between scientific research evidence and policy, although they did not specifically use the phrase “evidence-based.” Indeed, in the seventeenth century, John Graunt, who produced the first serious statistical compendium of policy-related data for England, declared that one of his motives for doing so was “good, certain, and easy government.” More recently, Donald T. Campbell (1969), for whom the international Campbell Collaboration is named, called for the US and other modern governments to adopt an experimental approach to developing and testing public policy:

The United States and other modern nations should be ready for an experimental approach to social reform, an approach in which we try out new programs designed to cure specific social problems, in which we learn whether or not these programs are effective, and in which we retain, imitate, modify, or discard them on the basis of apparent effectiveness on the multiple imperfect criteria available. (p. 409)

Oakley (1998) called the period of the 1960s–1970s in the United States the “golden age of evaluation” because many government programs, rigorous experiments and quasiexperiments were influential in considerations about policy choices. As Oakley (1998) lamented, however, rigorous studies produced a number of null results, and the general critics’ response was that there was something inherent in experimentation that could not detect those positive impacts that such interventions were undoubtedly having.

Although randomized experiments and quantitative systematic reviews of such evidence, called meta-analyses, have become common approaches to evidence generation since the 1960s, the more modern view of “evidence-based” policy was formulated in medicine and in the United Kingdom. A government researcher named Archie Cochrane argued that many healthcare practices had already been shown to be ineffective in clinical trials but were still being used to the detriment of patients. Conversely, some medical procedures had been demonstrably effective in such rigorous studies but were being ignored in light of tradition, authority, education, clinical experience, and anecdote. Later, a group of researchers led by Iain Chalmers summarized the results of controlled trials in the area of childbirth and pregnancy, so as to identify what worked or did not based on rigorous studies. The success of that effort led Chalmers to begin working with other researchers to develop an international organization that would summarize trials in all other areas of health care. This was named the Cochrane Collaboration in honor of the man who inspired it (www.cochrane.org). Thus, an apparatus for summarizing research and getting that evidence into the hands of medical practitioners was born.

The early work summarizing the results of childbirth and pregnancy trials in Oxford and the success of the nascent Cochrane Collaboration led some to more closely connect prior evidence and current decision-making. A Canadian medical researcher, David Sackett, and his colleagues are often credited with articulating the first modern use of the term “evidence-based.” Sackett and others (1996) called evidence-based medicine:

.. .the conscientious, explicit and judicious use of current best evidence in making decisions about the care of the individual patient. It means integrating individual clinical expertise with the best available external clinical evidence from systematic research. (p. 71)

Since the early 1990s, the use of the term evidence-based has grown appreciably. In crime and justice research, Sherman’s (1998) evidence-based policing report invoked the phrase probably for the first time. Sherman argued that large-scale police databases would serve as information to identify problems in need of innovation (new policies), and randomized experiments would be conducted to test the impact of the innovation on the problem. Police departments would be learning organizations that would refine their policies based on evidence from these RCTs.

The close coupling of evidence-based policy and systematic reviews began in the late 1990s. During that time, Chalmers approached the authors and others about establishing an analog to the Cochrane Collaboration that would prepare, update, and disseminate high-quality reviews of research in other areas outside of health care, such as crime and justice, education, and social welfare. These early discussions led to the official inauguration of the international Campbell Collaboration (www.campbellcollaboration.org) in January 2000. The Campbell Crime and Justice Group has overseen the development of systematic reviews relevant to crime policy and has organized annual conferences funded by the Jerry Lee Foundation to disseminate the results of these reviews to federal decision-makers and others on Capitol Hill. Collections of these reviews have also been published by notable journals including the Annals of the American Academy of Political and Social Science and the Journal of Experimental Criminology.

The advent of the C2 coincided with other major, cross-field evidence-based policy efforts, including the funding by the UK Economic and Social Research Council (ESRC) for several centers (called nodes) on various aspects of the interplay between research and decision-making, including the creation of a new journal called Evidence & Policy, published by the Policy Press. A US-based group called the Coalition for Evidence-Based Policy (http://coalition4evidence.org/wordpress/) that would advocate for federal government decisions to be closely tied to results of rigorous evaluations was created and has had considerable influence in getting legislation to mandate randomized controlled trials as the design of choice to evaluate these new policy initiatives (Wallace 2011). This has been mirrored in the UK by the creation of the Alliance for Evidence.

Evidence-based policy has now become a popular term throughout criminal justice, demonstrated by the creation of the Center for Evidence-Based Crime Policy at George Mason University in Virginia, USA (http://gemini.gmu. edu/cebcp/), directed by David Weisburd, and two new book series, on Evidence-based Crime Prevention and Translational Criminology, respectively, started by Springer Press.

Some By-Products Of The Evidence-Based Policy Movement

Regardless of the long-standing interest in criminal justice and other fields on generating and using research in government decision-making, the more recent emphasis on evidence-based policy has led to a number of important by-products. For one, it has led to even more discussion and debate over the nature and quality of evidence that should influence policy decisions. This is seen most convincingly in the area of determining what evidence should be relied upon to determine whether an intervention works. There seems to be a consensus among scientists and policy makers that randomized controlled trials provide the least equivocal evidence on the effects of a policy intervention. But this is, by no means, unanimous, and challenges have been periodically raised to whether experiments are the best design for generating evidence on what works (Boruch 1975; Pawson and Tilley 1994). Despite these “common contentions,” the emphasis on evidence-based policy has led to the recognition of the role of randomized experiments to evaluate justice policy when it is appropriate and possible to do so. For example, the Academy of Experimental Criminology was initiated in 2005 to recognize researchers who have conducted experiments as “Fellows” and to promote the use of experimental methods generally in the field of criminal justice (Weisburd et al. 2007). The Journal of Experimental Criminology was also initiated to provide a forum for rigorous evaluations and systematic reviews relevant to crime policy.

As aforementioned, because some policies cannot be evaluated using random assignment, such as when all of a population is receiving the intervention at the same time, the emphasis on evidence-based policy has led to proposals for using more sophisticated quasi-experimental approaches to evaluate crime policy (e.g., Henry 2009). Statistical impact evaluation designs such as regression discontinuity approaches and propensity score matching have been advocated and tried out in the social sciences (e.g., Campbell and Stanley 1966) and criminal justice (Berk and Rauma 1983; Minor et al. 1990) for several decades are now more commonly utilized. In fact, workshops for researchers to become better acquainted with the latest techniques have been offered since 2010 by professional associations such as the American Society of Criminology.

Besides putting the light on evidence, and highlighting the generation and use of results from randomized controlled trials and certain classes of quasi-experiments, the evidence-based policy focus has also led to increased attention to how the results of prior studies that bear on a policy are synthesized. Techniques for analyzing separate but related studies have been around for many decades (e.g., Mosteller and Bush 1954). Further, quantitative approaches popularized by the term “meta-analysis” have been extensively used in the social sciences since the late 1970s (see Smith and Glass 1977). “Systematic reviews,” as they are now commonly referred to, have become the fulcrum of the evidence-based policy approach. This has also led to even more attention to how researchers locate relevant studies, how they can be pulled together and appraised for quality, and how they can be analyzed (Boruch and Petrosino 2010). For example, some studies are not published in peer reviewed journals, but if they meet quality standards, the evidence needs to be considered. The focus on evidence-based policy has brought with it attention on the rigorous and explicit methods needed to make sure that such evidence is located and included in syntheses designed for the decision-maker.

Another important by-product of the focus on evidence-based policy has been on developing systems that get the evidence on policies into hands of busy decision-makers. Many public agencies do not have staff that can spend the time necessary to do a systematic review, and they generally rely on external and trusted sources for evidence. The advent of electronic technology has meant that summaries of evidence from systematic reviews can be provided quickly so long as the intended user has access to the Internet and can download documents. Groups such as the Campbell Collaboration’s Crime and Justice Group not only prepare and update reviews of evidence but make them freely available to any intended user around the world.

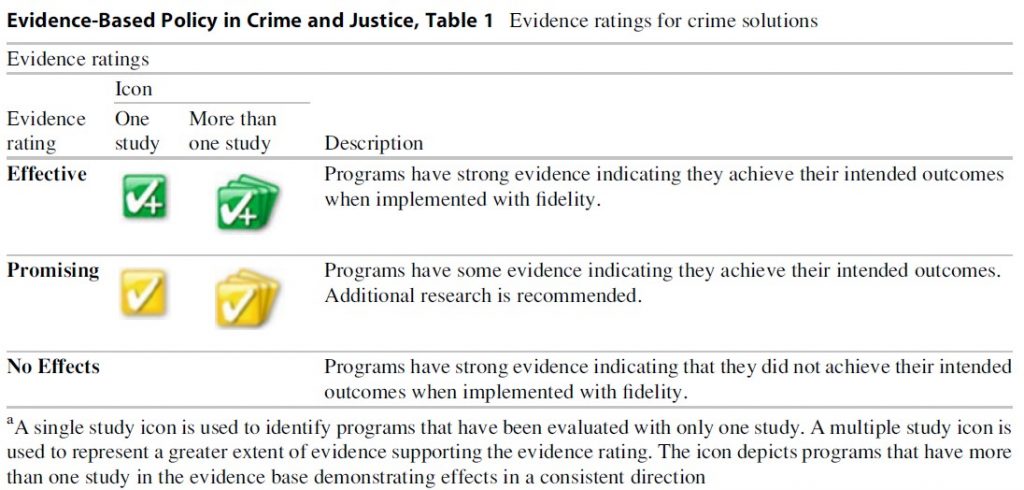

Evidence-Based Policy in Crime and Justice, Table 1 Evidence ratings for crime solutions

Evidence-Based Policy in Crime and Justice, Table 1 Evidence ratings for crime solutions

Campbell Collaboration reviews tend to be broad summaries of “what works” for a particular problem (e.g., gun violence) and classes of interventions (cognitive-behavioral programs). They are not usually focused on brand name programs or very specific, finegrained definitions of an intervention.

Because decision-makers often need evidence on particular interventions, other approaches to providing evidence that is more fine grained have been developed. Some of these approaches fall under the rubrics of “best practice lists” or “evidence-based registries.” For instance, such a registry might summarize the evaluation evidence for a particular brand name of a program (e.g., D.A.R.E., Drug Abuse Resistance Education). Although the number of such registries and lists relevant to justice have grown since the late 1990s (Petrosino 2005), the largest effort in the field, Crime Solutions (crimesolutions.gov), was initiated in 2010. This project compiles the available evidence on more or less branded programs like “Functional Family Therapy” and “Aggression Therapy,” rates how rigorous the evidence is according to a schema (which prioritizes results from randomized controlled trials), and then provides a summary based on rigor and amount of evidence. Table 1 provides the schema on how evidence for these programs and policies are rated (Crime Solutions. gov 2012) (Table 1).

Limitations Of Evidence-Based Policy

Despite the gains in the development and synthesis of rigorous evidence and the methods for rapid transmission into hands of policy makers (e.g., electronic distribution), there are some century-old barriers that limit and challenge those who wish to see evidence inform crime policy. As Lipton (1992) has written, the justice decision-maker at the policy level has many inputs into the choices made about funding, programs, allocations, and deployment. Research evidence is but one of the many “desiderata” of factors, and although evidence-based policy places a premium on scientific research to guide decision-making, choices are also influenced by ethics, fairness, resources, politics, ideology, special interests, tradition, experience, training, and many other factors. Researchers in criminal justice want a seat at the table, but they might find that the table is very crowded and that their voice may not be heard in the din.

There are many areas in US policy in which emotions are so charged that it is difficult for evidence to make an impact. For example, gun control has zealous advocates both for and against it, and impassioned discussions about evidence and their role in policy are often difficult if not impossible to have (Webster et al. 1997). A high profile murder, particularly if it is sexual in nature and involves a child victim, can cause knee jerk policy reactions and overreactions that have no basis or even run counter to research evidence (Petrosino 2000). In such charged situations, it is difficult for evidence to get a fair hearing, if any hearing at all.

As Boruch and Lum (2012:4) have written, “sturdy indifference to new and dependable evidence are a fact of life.” Reluctance to trust evidence, even the most compelling, is true in the policy sector and other parts of society. As aforementioned, Campbell (1969) wrote about the reluctance of policy makers to embrace evaluation for fear that it will highlight failure of their decisions. Overcoming this to promote learning organizations that are not in fear of “upsets” has long been a challenge. Finckenauer and Gavin (1999) write that after the evaluation questioning the effect of California’s Scared Straight program (known as SQUIRES) came out in 1983, the reaction by policy makers was to get rid of evaluation and keep the program. Instead of studying the program further, they evaluated it with “testimonials” from letters written to and by participating prisoners.

“Evidence destruction techniques” are easy to mount and often difficult to contend with. For example, in the case of Drug Abuse Resistance Education, or D.A.R.E., a long litany of evaluations questioned whether a police-led drug prevention program in elementary school reduced self-reported drug use by D.A.R.E. youth. D.A.R.E.’s leadership group, (D.A.R.E America) responded to each new evaluation with the same criticism: “D.A.R.E. has already revised its curriculum, the evaluation was of the old curriculum, therefore, the evaluation has no merit” (Petrosino 2005). Even minor tweaks of the curriculum were touted as major revisions and attempted to nullify evaluation evidence by making it appear obsolete and irrelevant. Vested interests often lead to the use of such techniques. A supporter of the “Campaign for Dark Skies” (Marchant 2004) criticized the methods in Farrington and Welsh (2002) systematic review of research on the effects of street lighting, motivated by fear of how their positive findings would affect the ability of professional and amateur astronomers to see the stars and planets in the UK.

Mears (2007) has highlighted some other challenges to evidence-based crime policy, including little questioning about whether the policies are needed in the first place, the pursuit of silver bullets or panaceas instead of a rational and holistic crime policy, the difficulties of implementing ideal evidence-based policy in the real world, the lack and misinterpretation of rigorous studies, and the challenge of getting good data on costs so that decision-makers can make rational choices.

Weiss and colleagues (2008) also make the point that in a democratic society, multiple inputs are expected and may be necessary. A strict adoption of evidence-based crime policy could translate into one small group (influential members of the scientific community) exerting too much influence on a process that, in a democratic society, should be representative of the majority of citizens.

Bibliography:

- Berk RA, Rauma D (1983) Capitalizing on nonrandom assignment to treatments: a regression discontinuity evaluation of a crime control program. J Am Stat Assoc 78(381):21–27

- Boruch R (1975) On common contentions about randomized field experiments. In: Boruch, Reicken (eds) Experimental testing of public policy: the proceedings of the 1974 Social Sciences Research Council conference on social experimentation. Westview Press, Boulder, pp 107–142

- Boruch RR, Lum C (2012) Eight lessons about evidence-based crime policy. Transl Criminol 2011. 1(Summer):4–5

- Boruch RF, Petrosino A (2010) Meta-analyses, systematic reviews and evaluation syntheses. In: Wholey J et al (eds) Handbook of practical program evaluation, 3rd edn. Jossey-Bass, New York, pp 531–553

- Campbell DT (1969) Reforms as experiments. Am Psychol 24:409–429

- Campbell DT, Stanley JC (1966) Experimental and quasiexperimental designs for research. Rand McNally, Skokie

- Cosgrove CA, McElroy JE (1986) The fixed tour experiment in the 115th precinct: its effects on police officer stress, community perceptions, and precinct management. Executive summary. Vera Institute of Justice, New York

- Crime Solutions.gov (2012) About Crime Solutions.gov. www.crimesolutions.gov/about.aspx

- Dershowitz A (1996) Reasonable doubts: the criminal justice system and the O.J. Simpson case. Simon & Schuster, New York

- Farrington DP, Welsh BC (2002) Improved street lighting and crime prevention. Justice Q 19:313–342

- Finckenauer JO, Gavin PW (1999) Scared straight: the pan acea phe nomenon revisited. Waveland Press, Prospect Heights

- Henry GT (2009) Estimating and extrapolating causal effects for crime prevention policy and program evaluation. In: Knutsson J, Tilley N (eds) Evaluating crime prevention initiatives. Criminal Justice Press, Monsey, pp 147–174

- Lipton DS (1992) How to maximize utilization of evaluation research by policymakers. Ann Am Acad Polit Soc Sci 52(1):175–188

- Lum C, Koper CS, Telep CW (2011) The evidence based policy matrix. J Exp Criminol 7:3–26

- Marchant PR (2004) A demonstration that the claim that brighter lighting reduces crime is unfounded. Br J Criminol 44:441–447

- Mears DP (2007) Towards rational and evidence-based crime policy. J Crim Justice 35(6):667–682

- Minor KT, Hartmann DJ, Davis SF (1990) Preserving internal validity in correctional evaluation research: the biased assignment design as an alternative to randomized design. J Contemp Crim Justice 6(4):215–225

- Mosteller F, Bush RR (1954) Selected quantitative techniques. In: Lindzey G (ed) Handbook of social psychology: vol. 1. Theory and method. Addison-Wesley, Cambridge, MA, pp 289–334

- Oakley A (1998) Experimentation and social interventions: a forgotten but important history. BMJ 317(7167):1239–1242

- Pawson R, Tilley N (1994) What works in evaluation research. Br J Criminol 34(2):291–306

- Petrosino AJ (2000) How can we respond effectively to juvenile crime? Pediatrics 105(3 Pt 1):635–637

- Petrosino A (2005) D.A.R.E. and scientific evidence: a 20-year history. Jpn J Sociol Criminol 30:72–88

- Sackett DL, Rosenberg WMC, Gray JAM, et al (1996) Evidence based medicine: what it is and what it isn’t. BMJ 312:71–2

- Sherman LW (1998) Evidence-based policing. Ideas in American policing series. Police Foundation, Washington, DC

- Sherman LW, Farrington DP (2002) In: Brandon W, Doris MK (eds) Evidence-based crime prevention. Routledge, London

- Sherman LW, Gottfredson D, MacKenzie DL, Eck JE, Reuter P, Bushway S (1997) Preventing crime: what works, what doesn’t, what’s promising – a report to the attorney general of the United States. United States Department of Justice, Office of Justice Programs, Washington, DC

- Smith ML, Glass GV (1977) Meta-analysis of psychotherapy outcome studies. Am Psychol 32:752–780

- Tilley N, Laycock G (2000) Joining up research, policy and practice about crime. Policy Stud 21(3):213–227

- Wallace JD (2011) Review of the coalition for evidence-based policy. Coalition for Evidence-based Policy, Washington, DC, http://coalition4evidence. org/wordpress/wp-content/uploads/Report-on-theCoalition-for-Evidence-Based-Policy-March-2011.pdf. Accessed 5 Oct 2012

- Webster DW, Vernick JS, Ludwig J, Lester KJ (1997) Flawed gun policy research could endanger public safety. Am J Public Health 87(6):918–921

- Weisburd D, Lorraine M, Anthony P (2007) The academy of experimental criminology: advancing randomized trials in crime and justice. Criminologist 32:1–7

- Weiss C, Murphy-Graham E, Birkeland S (2005) An alternate route to policy influence: evidence from a study of the Drug Abuse Resistance Education (D. A.R.E.) program. Am J Eval 26(1):12–31

- Weiss C, Murphy-Graham E, Gandhi A, Petrosino A (2008) The fairy godmother and her warts – making the dream of evidence-based policy come true. Am J Eval 29(1):29–47

See also:

Free research papers are not written to satisfy your specific instructions. You can use our professional writing services to buy a custom research paper on any topic and get your high quality paper at affordable price.