This sample School-Based Interventions For Aggressive And Disruptive Behavior Research Paper is published for educational and informational purposes only. If you need help writing your assignment, please use our research paper writing service and buy a paper on any topic at affordable price. Also check our tips on how to write a research paper, see the lists of criminal justice research paper topics, and browse research paper examples.

Schools are an important location for interventions to prevent or reduce aggressive behavior. They are a setting in which much interpersonal aggression among children occurs and the only setting with almost universal access to children. There are many prevention strategies from which school administrators can and do choose, including surveillance (e.g., metal detectors, security guards), deterrence (e.g., disciplinary rules, zero tolerance policies), and psychosocial programs. Indeed, over 75 % of schools in one national sample reported using one or more of these prevention strategies to deal with behavior problems (Gottfredson et al. 2000). Other reports similarly indicate that more than three-fourths of schools offer mental health, social service, and prevention service options for students and their families (Brener et al. 2001). Among psychosocial prevention strategies, a broad array of programs can be implemented in schools. These include packaged curricula and homegrown programs for use school-wide and others that target selected children already showing behavior problems or deemed at risk for such problems. Most psychosocial prevention programs address a range of social and emotional factors assumed to cause aggressive behavior or to be instrumental in controlling it (e.g., social skills or emotional self-regulation), and it is these psychosocial programs that are the focus of this meta-analysis.

Key Issues

Various resources are available to help schools identify programs with proven effectiveness. Among these resources are the Blueprints for Violence Prevention; the Collaborative for Academic, Social, and Emotional Learning (CASEL); and the National Registry of Evidence-based Programs and Practices (NREPP) administered by the Substance Abuse and Mental Health Services Administration (SAMSHA). There is, however, little indication that the evidence based programs promoted to schools through such sources have been widely adopted or that, when adopted, they are implemented with fidelity (Gottfredson and Gottfredson 2002).

While lists of evidence-based programs can provide useful guidance to schools about interventions likely to be effective in their settings, they are limited by their orientation to distinct program models and the relatively few studies typically available for each such program. A meta-analysis, by contrast, can encompass virtually all credible studies of such interventions and yield evidence about generic intervention approaches as well as distinct program models. Perhaps most important, it can illuminate the features that characterize the most effective programs and the kinds of students who benefit most. Since many schools already have prevention programs in place, a meta-analysis that identifies characteristics of successful prevention programs can inform schools about ways they might improve those programs or better direct them to the students for whom they are likely to be most effective. Thus, the purpose of the meta-analysis reported here is to investigate which program and student characteristics are associated with the most effective treatments.

In 2003, we published a meta-analysis on the effects of school-based psychosocial interventions for reducing aggressive and disruptive behavior aimed at identifying the characteristics of the most effective programs (Wilson et al. 2003). That meta-analysis included 172 experimental and quasi-experimental studies of intervention programs, most of which were conducted as research or demonstration projects with significant researcher involvement in program implementation. Though not necessarily representative of routine practice in schools, those programs showed significant potential for reducing aggressive and disruptive behavior, especially for students whose baseline levels of antisocial behavior were already high. Different intervention approaches appeared equally effective, but significantly larger reductions in aggressive and disruptive behavior were produced by those programs with better implementation, that is, more complete delivery of the intended intervention to the intended recipients. In 2007, we published an updated version of that meta-analysis that included 249 studies of school-based prevention programs (Wilson and Lipsey 2007). For that analysis, we separated the programs into four mutually exclusive groups, characterized by the general service format and target population of the programs. The four program groups were universal programs, pullout programs for high-risk populations, comprehensive programs, and special school programs. In addition to being distinctive in terms of service format, programs in each of the groups had a number of methodological, subject, and dosage differences that made it unwise to combine them in a single analysis. Furthermore, school decision-makers typically make choices from within a format category, rather than across them. Thus, there was little utility in lumping all the school-based prevention programs into one larger set.

Results of the 2007 meta-analysis found positive overall intervention effects on aggressive and disruptive behavior and other relevant outcomes. The most common and most effective approaches were universal programs and pullout programs targeted for high-risk children. Comprehensive programs did not show significant effects and those for special schools or classrooms were marginal. Different program approaches (e.g., behavioral, cognitive, social skills) produced largely similar effects. Effects were larger for better implemented programs and for those involving students at higher risk for aggressive behavior.

Since the publication of our earlier work, the analytic methods for handling dependent effect size estimates in meta-analysis have improved. Multiple effect size estimates from a single study sample can now be included in a meta-analysis using robust standard errors that account for the statistical dependencies present when multiple effect size estimates are used (Hedges et al. 2010). This research paper provides an opportunity to take the school-based prevention programs included in the 2003 and 2007 papers and reanalyze their results using these new statistical methods.

Method

Criteria For Including Studies In The Meta-Analysis

Studies were selected for this meta-analysis based on a set of detailed criteria, summarized as follows:

- The study was reported in English no earlier than 1950 and involved a school-based program for children attending any grade, prekindergarten through 12th grade.

- The study assessed intervention effects on at least one outcome variable that represented either (a) aggressive or violent behavior (e.g., fighting, bullying, person crimes), (b) disruptive behavior (e.g., classroom disruption, conduct disorder, acting out), or (c) both aggressive and disruptive behavior.

- The study used an experimental or quasi-experimental design that compared students exposed to one or more identifiable intervention conditions with one or more comparison conditions on at least one qualifying outcome variable. To qualify as an experimental or quasi-experimental design, a study was required to meet at least one of the following criteria:

- Students or classrooms were randomly assigned to conditions.

- Students in the intervention and comparison conditions were matched and the matching variables included a pretest for at least one qualifying outcome variable or a close proxy.

- If students or classrooms were not randomly assigned or matched, the study reported both pretest and posttest values on at least one qualifying outcome variable or sufficient demographic information to describe the initial equivalence of the intervention and comparison groups.

Search And Retrieval Of Studies

An attempt was made to identify and retrieve the entire population of published and unpublished studies that met the inclusion criteria summarized above. The primary source of studies was a comprehensive search of bibliographic databases, including PsycINFO, Dissertation Abstracts International, ERIC (Education Resources Information Center), US Government Printing Office publications, National Criminal Justice Reference Service, and MEDLINE. Second, the bibliographies of meta-analyses and literature reviews on similar topics were reviewed for eligible studies. Finally, the bibliographies of retrieved studies were themselves examined for candidate studies. Identified studies were retrieved from the library, obtained via interlibrary loan, or requested directly from the author. We obtained and screened more than 95 % of the reports identified as potentially eligible through these sources. Note that a new search was not performed for this research paper and that the literature reviewed here is current through 2004.

Coding Of Study Reports

Study findings were coded to represent the mean difference in aggressive behavior between experimental conditions at the posttest measurement. The effect size statistic used for these purposes was the standardized mean difference, defined as the difference between the treatment and control group means on an outcome variable divided by their pooled standard deviation (Lipsey and Wilson 2001). In addition to effect size values, information was coded for each study that described the methods and procedures, the intervention, and the student samples. Coding reliability was determined from a sample of approximately 10 % of the studies that were randomly selected and recoded by a different coder. For categorical items, intercoder agreement ranged from 73 % to 100 %. For continuous items, the intercoder correlations ranged from 0.76 to 0.99. A copy of the full coding protocol is available from the author.

General Analytic Procedures

All effect sizes were multiplied by the small sample correction factor, 1-(3/4n-9), where n is the total sample size for the study, and each effect size was weighted by its inverse variance in all computations (Lipsey and Wilson 2001). The inverse variance weights were computed using the subject-level sample size for each effect size. Because many of the studies used groups (e.g., classrooms, schools) as the unit of assignment to intervention and control conditions, they involved a design effect associated with the clustering of students within classrooms or schools that reduces the effective sample size. The respective study reports provided no basis for estimating those design effects or adjusting the inverse variance weights for them, so cluster adjustments were not made in the analyses reported here. This should not greatly affect the effect sizes estimates or the magnitude of their relationships to moderator variables but does assign them somewhat smaller standard error estimates and, hence, larger inverse variance weights than is technically correct. A dummy code identifying these cases was included in the analyses to reveal any differences in findings from these studies relative to those using students as the unit of assignment. The effect sizes for cluster assigned studies were not statistically different from the student-assigned studies in any analysis.

Examination of the effect size distributions for each of the four program format groups identified a small number of outliers with potential to distort the analysis. Outliers were defined as values that fell more than three interquartile ranges (IQR) above the 75th percentile or below the 25th percentile of the effect size distribution.

Outliers identified using this procedure were Winsorized to the next closest value. In addition, several studies used unusually large samples. Because the inverse variance weights chiefly reflect sample size, those few studies would dominate any analysis in which they were included. Therefore, the extreme tail of the sample size distribution was Winsorized using the procedure described above, and the inverse variance weights were recomputed for those effect sizes. These adjustments allowed us to retain outliers in the analysis, but with less extreme values that would not exercise undue influence on the analysis results.

In the original publications (Wilson et al. 2003; Wilson and Lipsey 2007) only one effect size from each subject sample was used in the analyses to maintain independence of the effect size estimates. When more than one was available, the effect size from the measurement source most frequently represented across all studies (e.g., teachers’ reports, self-reports) was selected. We wanted to retain informant as a variable for analysis, so we did not elect to average across effect sizes from different informants; if there was more than one effect size from the same informant or source, however, their mean value was used. However, techniques for computing robust standard errors for dependent effect sizes have since been developed (Hedges et al. 2010) and are employed in the analyses reported below. Thus, when a study reported intervention effects for more than one aggressive or disruptive behavior outcome, all effect sizes were used in the analyses. Dummy codes indicating the type of aggressive behavior measured and/or the informant or source of the outcome measure were examined for their influence on the effect sizes in the analyses.

Finally, many studies provided data sufficient for calculating mean difference effect sizes on the outcome variables at the pretest. In such cases, we adjusted the posttest effect size by subtracting the pretest effect size value. This information was included in the analyses presented below to test whether there were systematic differences between effect sizes adjusted in this way and those that were not.

A small number of studies were missing data on the method, participant, or program variables used in the final analyses; missing values were imputed using an expectation-maximization (EM) algorithm in SPSS.

Analysis of the effect sizes was conducted separately for each program format (described below) and done in several stages. We first produced the random effects mean effect size for each group of effect sizes and examined the homogeneity of the effect size distributions using the Q-statistic (Hedges and Olkin 1985). Moderator analyses were then performed to identify the characteristics of the most effective programs using weighted mixed effects multiple regression with the aggressive/disruptive behavior effect size as the dependent variable. When the number of effect sizes and studies was sufficient, three models were produced in order to examine the added contribution of different sets of variables. In the first stage of these analyses, we examined the influence of study methods on the effect sizes. Influential method variables were carried forward as control variables for the next stage of analysis, which examined the relationships between student characteristics and effect size. The third stage in these analyses involved adding important treatment characteristics to the models. Because a large number of potential moderators were available from our coding manual, moderators were selected based on the size of their weighted zero-order correlations with the effect size while being mindful of collinearity among the different moderator variables. That is, if two potential moderators were highly correlated with each other and with effect size, the one with the largest correlation with effect size was selected for the meta-regression models. Random effects analysis was used throughout but, in light of the modest number of studies in some categories and the large effect size variance, statistical significance was reported at the alpha=0.10 level as well as the conventional 0.05 level.

Results

Program Format And Treatment Modality

The literature search and coding process yielded data from 283 independent study samples. Note that many studies reported results separately for different subgroups of students (most commonly, results for boys and girls were reported separately). We treated each subgroup as a separate study sample. The 283 study samples participated in a variety of prevention and intervention programs. For purposes of analyzing their effects on student aggressive/disruptive behavior, we divided the programs into four groups according to their general service format. Programs differ across these groups on a number of methodological, participant, and intervention characteristics that made it unwise to combine them in a single analysis. The four intervention formats are as follows:

- Universal programs. These programs are delivered in classroom settings to all the students in the classroom; that is, the children are not selected individually for treatment but, rather, receive it simply because they are in a program classroom. However, the schools with such programs are often in low socioeconomic status and/or high crime neighborhoods, and thus, the children in these universal programs may be considered at risk by virtue of their socioeconomic background or neighborhood context.

- Pullout programs for high-risk students. These programs are provided to students who are specifically selected to receive treatment because of conduct problems or some risk factor (typically identified by teachers for social problems or classroom disruptiveness). Most of these programs are delivered to the selected children outside of their regular classrooms (either individually or in groups), although some are used in the regular classrooms but targeted on the selected children.

- Special schools or classes. These programs involve special schools or classrooms that serve as the usual educational setting for the students involved. Children are placed in these special schools or classrooms because of behavioral or academic difficulties that schools do not want to address in the context of mainstream classrooms. Included in this category are special education classrooms for behavior disordered children, alternative high schools, and schools-within-schools programs.

- Comprehensive/multimodal programs. These programs involve multiple distinct intervention elements (e.g., a social skills program for students and parenting skills training) and/or a mix of different intervention formats. They may also involve programs for parents or capacity building for school administrators and teachers in addition to the programming provided to the students. Within the comprehensive service format, some programs are delivered universally while others are targeted toward high-risk groups. All but one of the programs in this subcategory includes services for both students and their parents.

General Study Characteristics

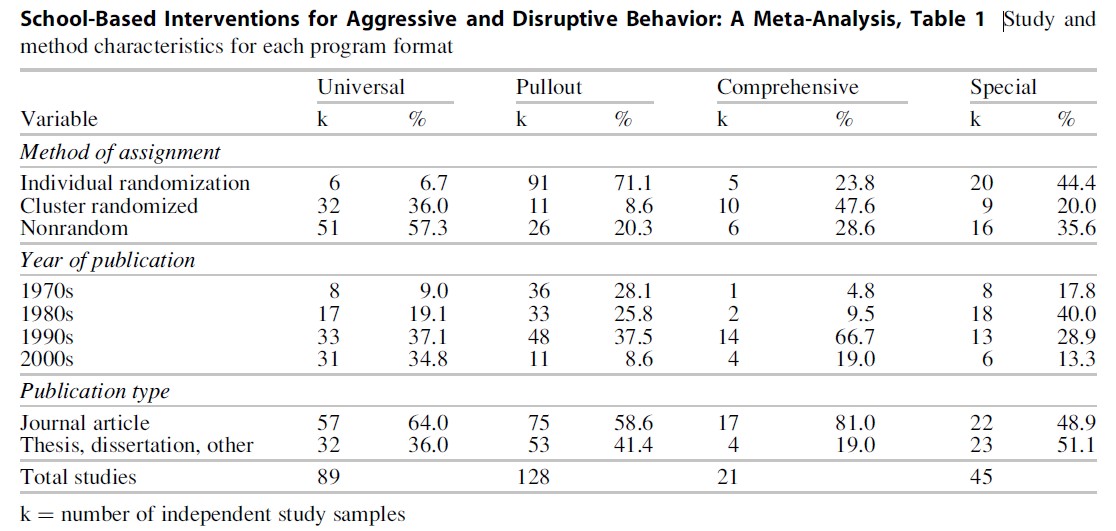

Nearly 90 % of the studies were conducted in the United States with over 75 % run by researchers in psychology or education. Table 1 shows additional characteristics of the studies, broken down by program format. Among universal programs, nonrandomized designs were most common. However, cluster randomization, in which schools or classrooms were randomly assigned to treatment conditions, was utilized with some frequency for both the universal and comprehensive programs, as would be expected given how participants were typically recruited for such studies. Individual random assignment was the norm for the pullout programs and common for the special school programs, though cluster randomization and nonrandomized designs were also present for these formats. Overall, fewer than 20 % of the studies were conducted prior to 1980 and most were published in peer-reviewed journals (60 %), with the remainder reported as dissertations, theses, conference papers, and technical reports.

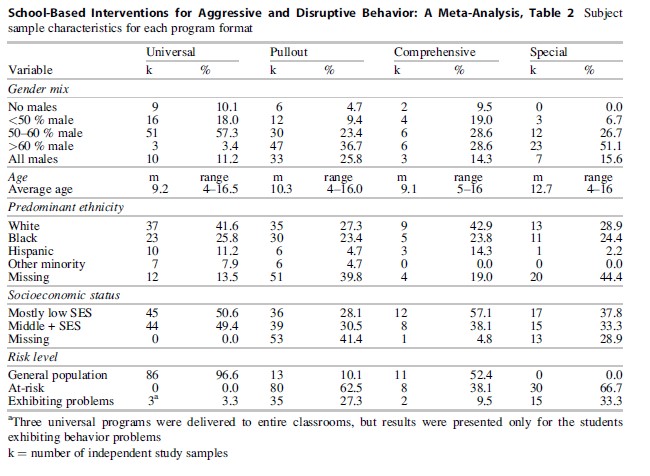

The student samples reflect the diversity in American schools (see Table 2). Most were comprised of a mix of boys and girls, but there were some all boy samples (19 %) and a few all girl samples (6 %). Formats targeting higher-risk children (i.e., pullout and special school programs) tended to have larger proportions of boys. Minority children were well represented with over a third of the studies having primarily minority youth; nearly 30 % of the included studies, however, did not report ethnicity information, making it difficult to examine differential program effects for different ethnic groups. Interestingly, missing information on ethnicity was more common among the special school and pullout programs, both of which tended to serve higher-risk groups of students. All school ages were included, from preschool through high school; the average age was around 9–10 for universal, pullout, and comprehensive programs and slightly older (about 13) for special school programs. A range of risk levels was also present, from generally low-risk students to those with serious behavior problems, and different risk levels were associated with the different program formats. Socioeconomic status was not widely reported, but a range of socioeconomic levels was represented among those studies for which it was reported.

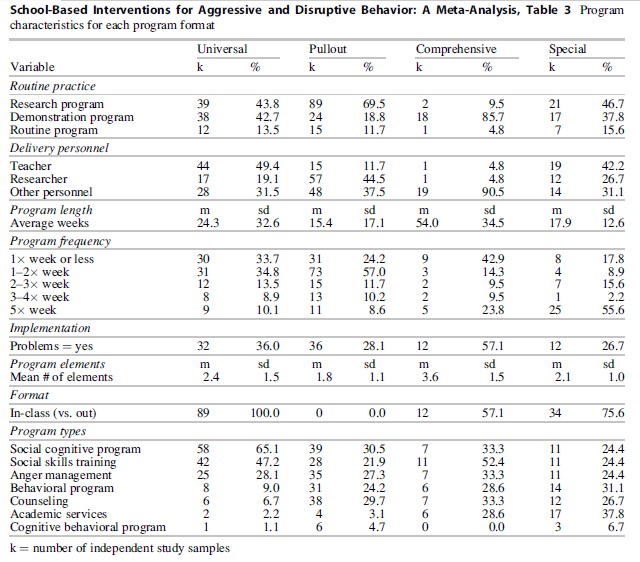

Characteristics of the delivery and dosage of the programs, as well as the different program types, are shown in Table 3. Most studies were conducted as research or demonstration projects with relatively high levels of researcher involvement. Routine practice programs implemented by typical service personnel with less researcher involvement were less common. Program length varied by format, with comprehensive programs longest. Pullout and special school programs were the shortest, averaging 15 and 18 weeks, respectively. Universal programs averaged about 24 weeks. Service frequency also varied, with comprehensive and special school programs having larger proportions of daily contacts. Programs were generally delivered by teachers or the researchers themselves, though comprehensive programs tended to involve multiple types of other delivery personnel. About 35 % of the reports mentioned some difficulties with the implementation of the program. This information, when reported, presented a great variety of relatively idiosyncratic problems, for example, attendance at sessions, dropouts from the program, turnover among delivery personnel, problems scheduling all sessions or delivering them as intended, wide variation between different program settings or providers, results from implementation fidelity measures, and the like. This necessitated use of a rather broad coding scheme in which we distinguished no problems indicated versus possible (some suggestion of difficulties but little explicit information) or definite problems with implementation.

The treatment modalities used in the four program formats varied. However, cognitive approaches and social skills training were common across all four service formats. Social cognitive strategies were cognitively oriented and focused on changing thinking patterns (e.g., hostile attributions) and developing social problem-solving skills (e.g., I Can Problem Solve; Shure and Spivack 1980). Anger management programs were also cognitively oriented but tended to focus on changing thinking patterns around anger and developing strategies for controlling angry impulses or coping with frustration (e.g., Coping Power; Lochman and Wells 2002). Social skills training focused on learning constructive behavior for interpersonal interactions, including communication skills and conflict management (Gresham and Nagle 1980). Also relatively common among the modalities were behavioral strategies that manipulated rewards and incentives (e.g., the Good Behavior Game, Dolan et al. 1993). Traditional counseling for individuals, groups, or families was also represented and tended to use a variety of therapeutic techniques (Nafpaktitis and Perlmutter 1998). The treatment coding was not mutually exclusive for any of the four program formats because many programs involved more than one strategy. While many of the programs involved multiple program strategies, the comprehensive programs tended to have more strategies on average than the other three formats, and the selection of different components was distinctive for the comprehensive programs. The comprehensive programs tended to have multiple distinct components (e.g., a school-based cognitive component and a family-based component) and often had non-school-based elements, while programs in the other three format categories that had multiple components tended to combine similar elements, like social cognitive and anger management components; they also tended to be solely school based.

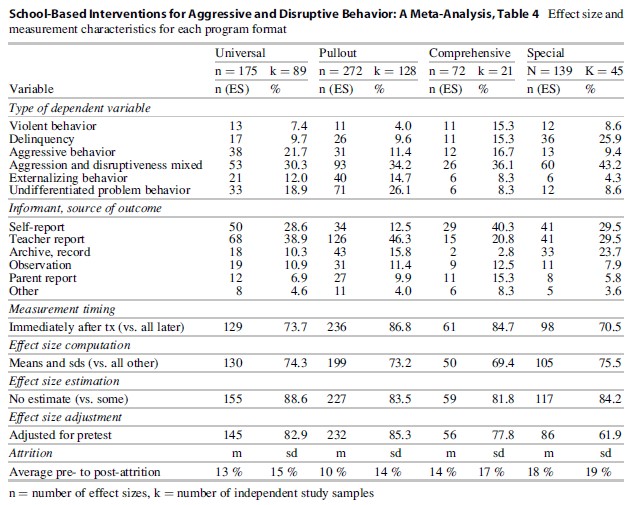

The analyses reported below allow the 238 study samples to contribute multiple effect sizes with different characteristics, which are shown in Table 4. The aggressive behavior outcomes were grouped into six categories. Violence outcomes involved clear-cut violent behavior, including hitting. Delinquency was generally not aggressive or violent and included arrests, police contacts, and self-reported criminal behavior. Aggressive behavior outcomes were those that assessed clear physically aggressive behavior. Measures of aggressive behavior were similar to those for violence, but generally less serious. Ideally, we would have liked to examine program effects on relatively distinct groups of outcome constructs. However, very few of the measures that called themselves aggressive behavior focused solely on physically aggressive interpersonal behavior. Many included disruptiveness, acting out, and other forms of behavior problems that are negative, but not necessarily aggressive. These measures were placed in the aggression and disruption mixed category. Externalizing measures generally involved disruptive and acting behaviors, but did not include physical aggression. Finally, the undifferentiated problem behavior category was comprised of those measures that included both internalizing and externalizing behaviors in the same score (e.g., the Child Behavior Checklist). Self-reports and teacher reports were the most common sources of information about aggressive and disruptive behavior, but observations, archival information, and parent reports were also used. Treatment effects were typically measured immediately after treatment, but some studies had longer follow-up periods. Effect sizes were generally computed directly from means and standard deviations, but other statistics were used in about 25 % of the cases. Direct computation from means and standard deviations or mathematically equivalent statistics was possible in over 80 % of the cases, and over 80 % of effect sizes had pretest effect sizes that allowed for adjustment. Attrition was moderate and averaged 10–18 % across the four program formats.

Overall Effects For School-Based Prevention Programs

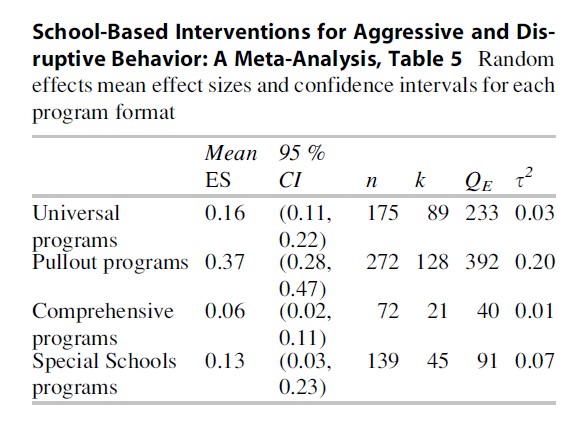

The random effects weighted mean effect sizes and confidence intervals for the four program formats are shown in Table 5. The mean effect sizes for the universal, pullout, comprehensive, and special school programs are all positive and statistically significant, indicating that the participants in the school-based programs represented here had significantly lower levels of aggressive and disruptive behavior after the programs than students in comparison groups. The pullout programs evidenced the largest mean effect size of the four groups. The comprehensive programs had the smallest mean effect size at 0.06. The homogeneity statistics for the four groups of programs suggest that there is greater variability in the distributions of effect sizes than would be expected from sampling error. This variation was expected to be associated with the nature of the interventions, students, and methods used in these studies. The next step, then, is to examine some of the method, subject, and program characteristics that may be associated with the variability in treatment effects using meta-regression models.

Results For Universal Programs

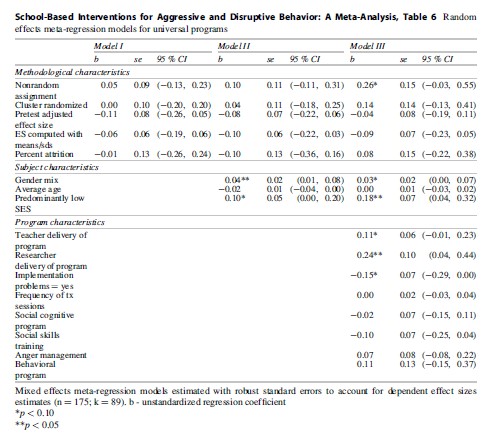

There were 89 studies of universal programs in the database, all delivered in classroom settings to entire classes of students. Many studies tested program effects on more than one relevant outcome. Thus, the universal programs contributed 175 effect size estimates to the analysis. The random effects weighted mean effect size for universal programs was 0.16 (p < 0.05) and the distribution of effect sizes evidenced heterogeneity. The moderator analysis focused first on the relationship between study methods and the intervention effects using random effects inverse variance weights estimated via the method of moments. As mentioned above, moderator variables were selected based on their weighted zero-order correlations with effect size. This analysis is shown as Model I in Table 6.

Neither informant nor type of outcome measure was related to the effect size, so these variables were not included in the final models. Most notable is the lack of significant relationships between the study design variables and effect size. Method of assignment, pretest adjustments and computations, and attrition were not significantly related to effect size.

Our next step was to identify student characteristics that were associated with effect size while controlling for method variables. The results of this analysis are presented as Model II in the table. Only two student variables were significantly associated with effect size: gender mix and socioeconomic status. Programs with larger proportions of boys showed larger effects from universal programming than those with larger proportions of girls. Since boys tend to be more likely to exhibit aggressive, disruptive, or acting out behavior, this result is not surprising. Students with low socioeconomic status achieved significantly greater reductions in aggressive and disruptive behavior from universal programs than middle-class students (p < 0.10).

Model III in Table 6 shows the addition of the program characteristics to the models. Again, moderators were selected based on their correlations with effect size. In Model III, nonrandom assignment becomes active when the program variables are included, indicating that some of the variability remaining after controlling for subject and program characteristics was associated with method of assignment. Gender mix and socioeconomic status were still influential and associated with effect size. Several other variables in this analysis were also significant. Teacher and researcher delivery of programs had a significant relationship with effect size, with programs delivered by teachers or researchers having larger effects than those delivered by other personnel. In general, the other personnel were not school affiliated and included laypeople, social workers, and counselors. Well-implemented programs showed significantly larger effect sizes than those experiencing implementation problems. Dummy codes for the most common program types were also included in the model and none of those were statistically significant. The different program types all produced positive effects on the outcomes and were not appreciably different from each other in effectiveness.

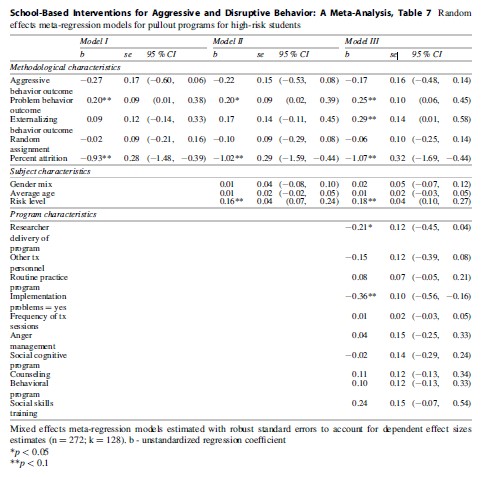

Results For Pullout Programs For High-Risk Students

There were 128 studies of pullout programs that targeted interventions to individually identified children. These studies contributed 272 effect sizes. The overall random effects mean effect size for these programs was 0.37 (p < 0.05), nearly twice as large as the average effect size for universal programs. The homogeneity test of the effect sizes showed significant variability across studies, and our analysis of the relationships between effect size and methodological and substantive characteristics of the studies proceeded much the same as for the universal programs (see Table 7). First, Model I examined the influence of each method variable on the aggressive/disruptive behavior effect sizes. Here also the study design was not associated with effect size—random assignment studies did not show appreciably smaller or larger effects than nonrandomized studies. Note that for the pullout programs, the design contrast was primarily between individual-level randomization and nonrandomization; there were only 11 cluster randomized studies. The two method variables that did show significant relationships with effect size were the outcome measures of undifferentiated problem behavior and attrition. Outcome measures that included both internalizing and externalizing problem behavior tended to produce larger effect sizes than the other outcomes (all more closely associated with aggressive behavior). Attrition was associated with smaller effect sizes.

Model II in Table 7 shows the addition of the subject characteristics to the analysis. One student characteristic had a significant relationship with effect size in this model. Higher-risk subjects showed larger effect sizes than lower-risk subjects though, with the pullout programs, very few low-risk children were involved. The distinction here is mainly between indicated students who are already exhibiting behavior problems and selected students who have risk factors that may lead to later problems. Nevertheless, programs with the highest-risk students tended to have larger effects.

Model III includes the characteristics of the intervention programs. In contrast to the universal programs, researchers and other non-teacher personnel as treatment delivery agents were less effective than teachers. Programs with higher-quality implementation were associated with larger effects. None of the different program types was significantly better or worse than any other.

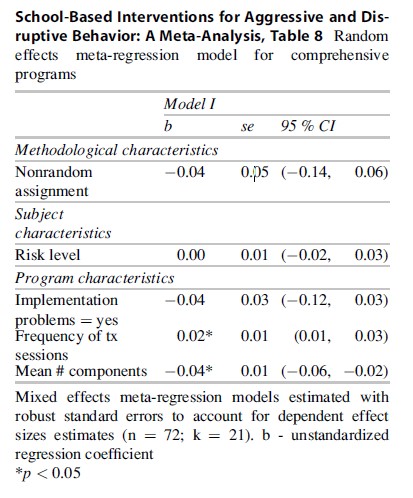

Results For Comprehensive Or Multimodal Programs

There were only 21 studies of comprehensive programs in the database, distinguished by their multiple treatment components and formats. These programs contributed 72 effect sizes indexing treatment effects on aggressive and disruptive behavior. The average number of distinct treatment components for these programs was nearly four, whereas the universal and pullout programs typically employed one or two treatment components. The studies of comprehensive programs tended to involve larger samples of students than the other program formats and a larger proportion of cluster randomizations as well. Thus, the statistical significance of the mean effect size of 0.06 is likely overstated. Comprehensive programs were generally longer than the universal and pullout programs. The modal program covered an entire school year and almost half of the programs were longer than 1 year. In contrast, the average program lengths for universal and pullout programs were 24 and 15 weeks, respectively.

Students who participated in comprehensive programs were slightly better off than students who did not, though the effect size was small. The Q has relatively low statistical power with small numbers of studies; therefore, despite the relative homogeneity in relation to the other program formats, we ran a single meta-regression model to examine the influence of some key study characteristics. This model is shown in Table 8. Because of the limited number of studies available in this category, only a few variables were tested. None of the method characteristics had correlations with effect size above 0.20 and were not tested. We also examined student risk level and found that it was not associated with effect size. Overlapping with the risk level variable was the variable we tested in the 2007 paper which compared universally delivered programs to those targeting higher-risk groups in a pullout format. Like the risk variable, this variable was not associated with effect size. Programs with implementation problems tended to have smaller effects than those without such problems, but the relationship was not significant. However, the frequency of treatment and the number of different treatment components were both associated with effect sizes. More frequent treatments produced larger effects on aggressive and disruptive behavior, while programs with more components produced smaller effects. The comprehensive programs with larger numbers of distinct components tended to be less effective than those with fewer components.

Results For Special Schools Or Classes

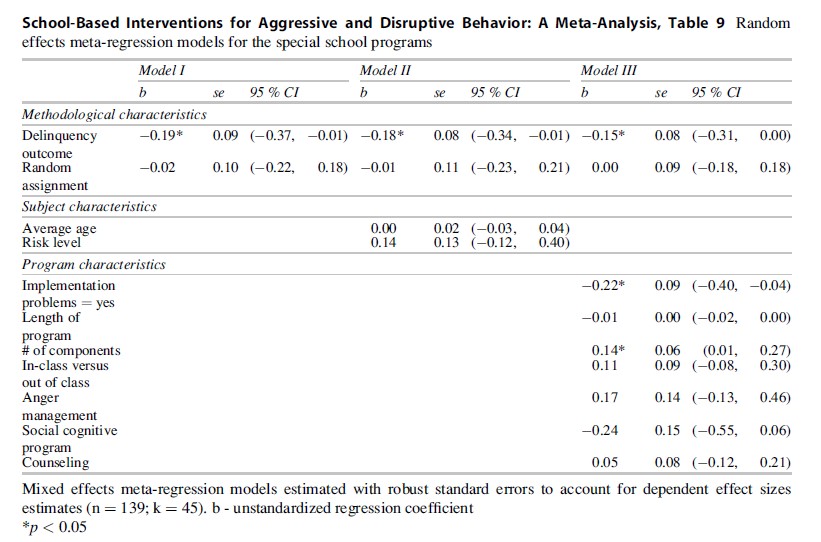

There were 45 studies of programs delivered in special schools or classrooms. These programs generally involved an academic curriculum plus programming for social or aggressive behavior. The students typically had behavioral (and often academic) difficulties that resulted in their placement outside of mainstream classrooms. The mean aggressive/disruptive behavior effect size for these programs was 0.13 (p < 0.05). The Q-statistic (Qe = 91) indicates that the distribution of effect sizes was heterogeneous. About 20 % of the studies of special programs assigned students to intervention and control conditions at the classroom level. As a result, there may be a design effect associated with the clustering of students within classrooms that overstates the significance, though the overall effect size and the regression coefficients presented below should not be greatly affected.

The limited number of studies available for this program format necessitated that we examine fewer moderator variables in the meta-regression models (See Table 9). The two method characteristics with the highest weighted zero-order correlations with effect size were random assignment and delinquency outcomes. Studies employing individual randomization did not produce effect sizes that were smaller or larger than studies that did not use random assignment. When program effects were assessed on delinquency outcomes for the special school programs, effect sizes were significantly smaller than when any other outcome variable was used. Note that all of the other outcomes contained some form of aggressive, violent, or acting out/ disruptive behavior, while the delinquency outcomes included arrests, police contacts, and self-reported crime.

In Model II, two subject characteristics were tested, average age and risk level. Neither age nor risk level were associated with effect size. These subject characteristics were dropped from Model III to allow us to test more different treatment characteristics. The length of the program did not have a significant relationship to effect size. In one form of the special school programs, students were assigned to special education classes or schools and the program was delivered entirely in the classroom setting. The other form involved students in special education classrooms who were pulled out of class for additional small group treatments. The programs delivered in classroom settings did not produce different effects than the pullout programs. Also, as in other analyses, better implemented programs showed larger effects. Three treatment modalities were tested in this model, anger management, social cognitive programs, and counseling. None were found to be significantly more effective than any of the other strategies.

Summary And Conclusions

The primary issue addressed in this research paper is the effectiveness of programs for preventing or reducing such aggressive and disruptive behaviors as aggression, fighting, bullying, name-calling, intimidation, acting out, and disruptive behaviors occurring in school settings. The main finding is that, overall, the school-based programs that have been studied by researchers (and often developed and implemented by them as well) generally have positive effects for this purpose. A secondary purpose of this research paper was to utilize new analytic techniques that allow for multiple dependent effect sizes from the same study to be included in an analysis (Hedges et al. 2010). Several differences from our original work are noteworthy. The mean effect size using the new techniques for universal programs was 0.16, while it was 0.21 in Wilson and Lipsey (2007). The mean effect size for the pullout programs was 0.37, quite a bit larger than the 0.29 published in Wilson and Lipsey (2007). It is well known that selecting a single effect size from each study is not ideal in meta-analysis; this reanalysis dramatizes how much difference such selections can make on results and conclusions.

The most common and most effective approaches are universal programs delivered to all the students in a classroom or school and pullout programs targeted for at-risk children who participate in the programs outside of their regular classrooms. The universal programs that were included in the analysis mainly used social cognitive approaches, anger management, and social skills training, and, like the original analysis, all strategies appeared about equally effective. In the 2007 meta-regression model for the universal programs, only SES was significant. The new analyses found that implementation quality and the type of delivery personnel were also important predictors of treatment effects.

Cognitively oriented approaches (both social cognitive programs and anger management) and social skills training were also the most frequent among the pullout programs, but many did use behavioral or counseling treatment modalities as well. In the original analysis, behavioral strategies were associated with larger treatment effects. However, differential use of the different modalities within the pullout format was not associated with differential effects in the new analysis. This suggests that it may be the pullout program format that is most important but does not rule out the possibility that the treatment modalities used with that format are especially effective ones. It is also possible that the larger effect size with the pullout programs was due to the generally higher-risk students participating in these programs. Higher-risk students generally achieve better outcomes from psychosocial programming than lower-risk students, so the larger effect size for the more targeted pullout programs may be partly due to the students with more serious behavior problems having greater room for improvement.

The mean effect sizes of 0.16 and 0.37 for the universal and pullout programs, respectively, represent a decrease in aggressive/disruptive behavior that is not only statistically significant but likely to be of practical significance to schools as well. Suppose, for example, that approximately 20 % of students are involved in some version of such behavior during a typical school year. This is a plausible assumption according to the Indicators of School Crime and Safety: 2005 which reports that 13 % of students age 12–18 were in a fight on school property, 12 % had been the targets of hate-related words, and 7 % had been bullied (DeVoe et al. 2004). Effect sizes of 0.16 and 0.37 represent reductions from a base rate prevalence of 20 % to about 14 % and 8 %, respectively, that is, 30–40 % reductions in aggressive and disruptive behavior. The programs of above average effectiveness, of course, produce even larger decreases.

The substantial similarity in effects across the different types of programs within the universal and pullout formats suggests that schools may choose from a range of such programs with some confidence that whatever they pick will be about as effective as any other choice. In the absence of evidence that one modality is significantly more effective at reducing aggressive and disruptive behavior than another, schools might benefit most by considering ease of implementation, compatibility with school culture, and teacher and administrator training and preferences when selecting programs and focusing on implementation quality once programs are in place. Our coding of implementation quality, albeit crude, was associated with larger effect sizes for all four treatment formats. A very high proportion of the studies in this meta-analysis, however, were research or demonstration projects in which the researchers had a relatively large direct influence on the service delivery. Schools adopting these programs without such engagement may have difficulty attaining comparable program fidelity, a concern reinforced by evidence of frequent weak implementation in actual practice (Gottfredson and Gottfredson 2002). The best choice of a universal or pullout program for a school, therefore, may be the one they are most confident they can implement well. In addition, for the universal programs, teachers and researchers as delivery personnel were generally more effective than nonschool personnel. Selecting programs that fit with teachers’ interests, training, and existing curricula may help to keep implementation fidelity as high as possible.

Another significant factor that cut across the universal and targeted pullout programs was the relationship of student characteristics to program effects. Larger treatment effects were achieved with higher-risk students, even among the already higher-risk samples in the targeted pullout programs. For the universal programs, the greatest benefits appeared for students from economically disadvantaged backgrounds and for groups with larger proportions of boys. Universal programs did not specifically select students with individual risk factors or behavior problems, though many students were of low socioeconomic status and there were most likely some behavior problem students in the classrooms that received universal interventions. These findings reinforce the fact that a program cannot have large effects unless there is sufficient problem behavior, or risk for such behavior, to allow for significant improvement.

The programs in the category we called comprehensive, in contrast to the universal and pullout programs, were surprisingly ineffective.

On the face of it, combinations of universal and pullout treatment elements and multiple intervention strategies would be expected to be at least as effective, if not more so, than less multifaceted programs. The small mean effect size for the comprehensive programs raises questions about their value. It should be noted, however, that there were few programs in this category and that most of these were long-term school-wide programs. It may be that this broad scope is associated with some dilution of the intensity and focus of the programs so that students have less engagement with them than with the programs in the universal and pullout categories. The comprehensive programs also suffered from implementation difficulties more frequently than the other formats (see Table 3), although implementation was not significantly associated with effect size in the reanalysis or in the original. However, the reanalysis found that the comprehensive programs with the largest number of treatment components were the least effective in this category, a finding that may be an indicator for implementation problems. This is an area that clearly warrants further study.

The most distinctive programs in our collection were those for students in atypical school settings. The mean effect size for these programs was modest, though statistically significant. As in the original analysis, implementation was important. The results also are somewhat anomalous. One of the signal characteristics of students in these settings is a relatively high level of behavior problems or risk for such problems; thus, there should be ample room for improvement. On the other hand, the special school settings in which they are placed can be expected to already have some programming in place to deal with such problems. The control conditions in these studies would thus reflect the effects of that practice-as-usual situation with less value added provided by additional programming of the sort examined in the studies included here. Alternatively, however, the add-on programs studied in these cases may have been weaker or less focused than those found in the pullout format, or the more serious behavior problems of students in these settings may be more resistant to change. Here too are issues that warrant further study.

A particular concern of our original meta-analysis (Wilson et al. 2003) was the smaller effects of routine practice programs in comparison to those of the more heavily represented research and demonstration programs. Routine practice programs are those implemented in a school on an ongoing routine basis and evaluated by a researcher with no direct role in developing or implementing the program. Research and demonstration programs are mounted by a researcher for research or demonstration purposes with the researcher often being the program developer and heavily involved in the implementation of the program, though somewhat less so for demonstration programs. In the present meta-analysis, somewhat more studies of routine programs were included, and it is reassuring that their mean effect sizes were not significantly smaller. The zero-order correlation between routine practice and effect sizes was only large enough to be included in the meta-regression models for the pullout programs, and it did not turn out to have a significant relationship with effect size in the model in which it was tested. However, only 35 of the 283 studies in this meta-analysis examined routine practice programs. This number dramatizes how little evidence exists about the actual effectiveness, in everyday real-world practice, of the kinds of school-based programs for aggressive/disruptive behavior represented in this review.

Bibliography:

- Brener ND, Martindale J, Weist MD (2001) Mental health and social services: results from the school health policies and program study. J Sch Heal 71:305–312

- DeVoe JF, Peter K, Kaufman P et al (2004) Indicators of school crime and safety: 2004 (Report Num. NCES 2005-002/NCJ 205290) Washington, DC: U.S. Departments of Education and Justice

- Dolan LJ, Kellam SG, Brown CH, Werthamer-Larsson L, Rebok GW et al (1993) The short-term impact of two classroom-based preventive interventions on aggressive and shy behaviors and poor achievement. J Appl Dev Psychol 14:317–345

- Gottfredson DC, Gottfredson GD (2002) Quality of school-based prevention programs: results from a national survey. J Res Crime Delinquency 39:3–35

- Gottfredson GD, Gottfredson DC, Czeh ER, Cantor D, Crosse S, Hantman I (2000) National study of delinquency prevention in schools. Final Report, Grant No. 96-MU-MU-0008. Ellicott City, MD: Gottfredson Associates, Inc. Available from:www.gottfredson. com

- Gresham FM, Nagle RJ (1980) Social skills training with children: responsiveness to modeling and coaching as a function of peer orientation. J Consult Clin Psychol 48:718–729

- Hedges LV, Olkin D (1985) Statistical methods for meta-analyses. Academic Press, San Diego

- Hedges LV, Tipton E, Johnson MC (2010) Robust variance estimation in meta-regression with dependent effect size estimates. Res Syn Method 1:39–65

- Lipsey MW, Wilson DB (2001) Practical meta-analysis. Sage, Thousand Oaks

- Lochman JE, Wells KC (2002) The coping power program at the middle-school transition: universal and indicated prevention effects. Psychol Addict Behav 16(suppl 4): S40–S54

- Nafpaktitis M, Perlmutter BF (1998) School-based early mental health intervention with at-risk students. Sch Psychol Rev 27:420–432

- Shure MB, Spivack G (1980) Interpersonal problem solving as a mediator of behavioral adjustment in preschool and kindergarten children. J Appl Dev Psychol 1:29–44

- Wilson SJ, Lipsey MW (2007) School-based interventions for aggressive and disruptive behavior: update of a meta-analysis. Am J Prev Med 33(2S):S130–S143

- Wilson SJ, Lipsey MW, Derzon JH (2003) The effects of school-based intervention programs on aggressive and disruptive behavior: a meta-analysis. J Consult Clin Psychol 71:136–149

See also:

Free research papers are not written to satisfy your specific instructions. You can use our professional writing services to buy a custom research paper on any topic and get your high quality paper at affordable price.