This sample Thinking and Problem Solving Research Paper is published for educational and informational purposes only. If you need help writing your assignment, please use our research paper writing service and buy a paper on any topic at affordable price. Also check our tips on how to write a research paper, see the lists of psychology research paper topics, and browse research paper examples.

Thinking is a broad term applied to the representation of concepts and procedures and the manipulation of information; acts such as reasoning, decision making, and problem solving are part of thinking. This research-paper focuses on problem solving as one of the most complex intellectual acts. Humans must solve many problems per day. For example, the relatively simple act of driving to school or work requires us to solve problems related to the operation of a vehicle and the determination of the best route to our destination.

Researchers have studied the nature of problem solving for many years. This research-paper focuses on the major theories and research findings generated from the last 100 years of problem-solving research. This research-paper summarizes the methods of inquiry in this area of research in three parts: (a) behaviorist methods, (b) introspective methods, and (c) methods using computer modeling. Applications of these findings are discussed in the context of the development of expertise and the use of expert problem-solving strategies. Finally, the chapter concludes with a discussion of future directions for research on human problem solving.

Definition Of Problem Solving

Simply defined, problem solving is the act of determining and executing how to proceed from a given state to a desired goal state. Consider the common task of driving to work or school. To get from the given state, sitting in your dorm room, apartment, or home, to the desired goal state, sitting in class or in your office, requires you to solve problems ranging from the location of your keys to the physical execution of backing your car out of its parking space, observing traffic laws, choosing a route, negotiating traffic, and arriving at your destination in a timely manner. The first problem, locating your keys, can be broken down further into several cognitive acts: (a) perceiving that you need your keys to start the car, (b) generating ideas for where to search for your keys, (c) conducting a visual search for your keys, and (d) recognizing your keys when you see them. You can divide this relatively simple problem further into subproblems related to the retrieval of information from memory, the recognition of visual stimuli, and so forth. Thus, it is evident why psychologists consider problem solving one of the most complex intellectual acts. Although we solve many problems per day, the difficult and unfamiliar problems, such as how to complete the algebra homework assigned by a mathematics professor, make us notice how we solve problems; we tend to overlook the more mundane problem solving that makes up most of our day.

The following paragraphs present a historical overview of the theories that researchers have applied to the study of human problem solving. When reading these theories, notice that the theorists make different assumptions about human problem solving and use different techniques to isolate the most basic processes involved in this activity. I discuss their methodologies in more detail later.

Theory And Modeling

Gestalt Theory

Early in the 20th century, a group of German psychologists, most notably Max Wertheimer, Kurt Kofka, and Wolfgang Köhler, noted that our perception of environmental stimuli is marked by the tendency to organize what we see in predictable ways. Although the word “Gestalt” has no direct translation in English, it is loosely translated as “form,” “shape,” or “whole.” The Gestalt theorists believed and demonstrated that humans tend to perceive stimuli as integrated, complete wholes. From this belief followed the assertion by the Gestalt theorists that the whole of an object is greater than the sum of all of its parts; something new or different is created when all the parts of a perception are assembled.

Perceptual Tendencies

Building on the basic premise that the whole is greater than the sum of its parts and the notion that we perceive visual stimuli in predictable ways, the Gestalt theorists proposed several perceptual tendencies that determine how we group elements of a stimulus’s parts. These tendencies are (a) proximity—elements tend to be grouped together according to their nearness, (b) similarity—items similar in some respect or characteristic tend to be grouped together, (c) closure—items are grouped together if they tend to complete some known entity (i.e., a cube, face, etc.), and (d) simplicity—items are organized into simple figures according to symmetry, regularity, and smoothness. The perceptual tendencies, or principles of organization as the Gestalt theorists called them, relate to problem solving in that the perception of an individual stimulus or an entire problem is greater than the individual components of the problem. That is the reason that, when someone considers the driving to work or school task, the problem is different from the individual parts of the problem, down to the perception of a pile of metal as one’s car keys. Essentially, the mind proceeds from understanding a problem in its discrete parts to recognizing the whole problem.

Insight

In addition to proposing the various perceptual tendencies that affect problem solving, research on animal problem solving conducted by Wolfgang Köhler provided support for the notion of insight in problem solving. In one of his most famous demonstrations of animal problem solving, Köhler (1927) studied the problem-solving ability of a chimpanzee named Sultan. From his cage, Sultan used a long pole to reach through the bars to grab and drag a bunch of bananas to him. When faced with a more difficult problem, two poles that individually were too short to reach the bananas outside of the cage, Sultan sulked and then suddenly put the poles together by placing one pole with a smaller diameter inside the other pole with a larger diameter. The resulting combined pole was long enough to reach the bananas. Köhler argued that Sultan’s ability to solve this problem demonstrated insight, a sudden solution to a problem as the result of some type of discovery. True to Gestalt principles of organization, Köhler further argued that animals notice relations among objects, not necessarily the individual objects.

In summary, the principles of organization proposed by the Gestalt theorists provide some information about how animals and humans perceive problems and their component parts. However, the work of the Gestalt theorists marks the beginning of almost 100 years of research on problem solving. A more recent theory, problem space theory, defines the concept and proposes a simple framework for considering the act of problem solving.

Problem Space Theory

Similar to the Gestalt psychologists, Allan Newell and Herbert Simon (1972) emphasized the importance of the whole problem, which they called the problem space. The problem space, according to Newell and Simon, represents all possible configurations a problem can take. Problem solving begins with an initial state, the given situation and resources at hand, and ends with the goal state, a desired state of affairs generally associated with an optimal solution to a problem. To get from the initial state to the goal state, a problem solver must use a set of operations, tools or actions that change the person’s current state and push that state closer to the goal state than the initial state. Conceptualized in this way, problem solving seems straightforward. However, Newell and Simon proposed that this relatively simple process has limitations, called path constraints. Path constraints essentially allow the problem solver to rule out some operators and methods to a problem solution.

Based on Newell and Simon’s (1972) description of the problem space, we can describe problem solving as a search for the set of operations that moves a person most efficiently from the initial state to the goal state. The search for the set of operations is the heart of problem solving. When a problem is well defined, the problem space is identified clearly and the search for the best set of operations is easy. However, many of the problems we solve are ill defined; we do not recognize or retrieve from memory the initial state, goal state, or the set of operations. Ill-defined problems pose a challenge to the search for the set of operations. Several methods of selecting operators that researchers have proposed include conducting an exhaustive search or using various problem-solving heuristics.

Exhaustive Search

One logical choice for searching for the best set of operations is to engage in a systematic and exhaustive search of all possible solutions. Theorists call this type of search a brute force search. When considering how to drive to work or school from your home or apartment, you have a number of options for making your way out of your neighborhood, presumably to a thoroughfare that takes you closer to your destination. Some of those options are more or less direct routes to the thoroughfare. Regardless, they are options. A brute force search assumes that the problem solver considers every possible option. Thus, it is easy to see how a brute force search, which eventually allows the problem solver to find the best solution, can be a time-consuming process.

Heuristics

Heuristics offer another way to identify the set of operations and most efficient path through a problem space. Theorists loosely define heuristics as basic strategies, or rules of thumb, that often lead a problem solver to the correct solution. As you will see in the following paragraphs, some general problem-solving heuristics include the hill-climbing strategy, means-ends analysis, and working backward.

The hill-climbing strategy is what the name implies. Consider standing at the bottom of a hill; your goal state is to make it to the top of the hill. To ascend the hill, you look for the combination of footsteps (and ultimately a path) that takes you to the top of the hill in the most direct manner. Each step should move you closer to the goal. This last point also marks the most important constraint of this strategy. That is, to solve some problems, it is sometimes necessary to backtrack or to choose an operation that moves you away from the goal state. In such cases, you cannot use the hill-climbing strategy.

Students of psychology often equate the hill-climbing strategy to the problem of earning a college degree. A student begins at an initial state with no college credits. The goal, of course, is to complete all of the required credits for a particular degree program. Each semester or quarter, students complete one or more courses among those required for their degree programs, thereby making forward progress toward the goal state and graduation. Of course, one must acknowledge that in the past some students have not applied the hill-climbing strategy consistently to this particular problem. Changing majors, failing courses, and taking unnecessary electives are all examples of operations that do not meet the forward progress criterion of the hill-climbing strategy.

A second strategy that offers more flexibility in terms of executing the set of operations is known as means-ends analysis. Researchers characterize means-ends analysis as the evaluation of the problem space by comparing the initial state and goal state and attempting to identify ways to reduce the difference between these two states. When evaluating the differences between the initial state and the goal state, the problem solver often breaks the overall problem down into subproblems with subgoals. Solving the subproblems and meeting the subgoals allows problem solvers to reduce the overall distance between the initial state and the goal state so they become the same state.

We can apply means-ends analysis to the problem of earning a college degree. Most college degrees consist of a series of requirements that are grouped into courses that meet general education requirements, courses that meet the major requirements, courses that meet minor requirements, and courses that serve as electives. By breaking the college degree problem down into a series of subgoals, a student may choose to take courses to satisfy the requirements of each grouping in turn. That is, it is not uncommon for college students to take all courses for the general education requirements before beginning courses required for the major or minor. In summary, the assumptions of means-ends analysis allow this strategy to accommodate the need for a choice or an operation that appears to be a lateral move or a move away from the goal state, but one that eventually helps the problem solver meet a subgoal of the overall problem.

A third heuristic is known as working backward. Like the hill-climbing strategy, working backward is self-explanatory. A person solves a problem by working backward from the goal state to the initial state. Problem solvers use this heuristic most often when they know the goal state but not the initial state. An example of when to use such a strategy is when you check your bank balance at the end of the day. If you know all of the transactions that have posted during the day, you can work backward from your ending account balance to determine your account’s beginning balance for the day.

Assuming that we can define problems in terms of a space with an initial state, a goal state, and a set of operations, a critical aspect of problem solving involves the search for the set of operations. Individuals search for the optimal set of operations to solve problems using a variety of methods. The choice between an exhaustive, brute force search and one of the heuristic strategies is constrained by the characteristics of the problem. However, there is another source of information that individuals bring to bear upon the problem solving process—background knowledge. Research on problem solving has proposed several ways that individuals use what they already know to devise solutions to problems. The following paragraphs describe how we use analogies to solve problems.

Theories of Analogical Reasoning

Occasionally when solving a problem, some aspect of the problem reminds us of another problem we have solved before. The previously solved problem can provide us with a model for how to solve the problem in front of us. That is, we draw an analogy between one problem and another. For example, you may solve the problem of driving to work or school regularly. When faced with the problem of driving to the grocery store, you may draw an analogy between previously solved problems and decide to rely on the strategies you used to determine how best to get from home to work or school when you decide how to get from home to the grocery store.

Using analogies to solve problems seems easy. However, most people fail to use them with any regularity. Research by Mary Gick and Keith Holyoak (1980) has suggested that analogies can assist problem solving, but that few individuals use them unless they are prompted to do so. Gick and Holyoak (1980) used Duncker’s (1945) tumor problem to understand how individuals use analogies to solve problems:

Suppose you are a doctor faced with a patient who has a malignant tumor in his stomach. To operate on the patient is impossible, but unless the tumor is destroyed, the patient will die. A kind of ray, at a sufficiently high intensity, can destroy the tumor. Unfortunately, at this intensity the healthy tissue that the rays pass through on the way to the tumor will also be destroyed. At lower intensities the rays are harmless to healthy tissue, but will not affect the tumor. How can the rays be used to destroy the tumor without injuring the healthy tissue?

The solution to the problem is to divide the ray into several low-intensity rays so that no single ray will destroy healthy tissue. Then, the rays can be positioned at different positions around the body and focused on the tumor. Their combined effect will destroy the tumor without destroying the healthy tissue. Before reading the tumor problem, however, Gick and Holyoak asked one group of their participants to read a different problem, known as the attack dispersion story:

A dictator ruled a small country from a fortress. The fortress was situated in the middle of the country and many roads radiated outward from it, like spokes on a wheel. A great general vowed to capture the fortress and free the country of the dictator. The general knew that if his entire army could attack the fortress at once it could be captured. But a spy reported that the dictator had planted mines on each of the roads. The mines were set so that small bodies of men could pass over them safely, since the dictator needed to be able to move troops and workers about; however, any large force would detonate the mines. Not only would this blow up the road, but the dictator would destroy many villages in retaliation. A full-scale direct attack on the fortress therefore seemed impossible.

The general, however, was undaunted. He divided his army up into small groups and dispatched each group to the head of a different road. When all was ready he gave the signal, and each group charged down a different road. All of the small groups passed safely over the mines, and the army then attacked the fortress in full strength. In this way, the general was able to capture the fortress.

Gick and Holyoak found that roughly 76 percent of participants who read the attack dispersion story before reading Duncker’s tumor problem drew the appropriate analogy and solved the tumor problem. When the researchers gave participants a hint that the attack dispersion solution may help them solve the tumor problem, 92 percent produced the correct solution. Thus, it seems that individuals may not use analogies to solve problems as readily as we may assume.

Researchers have proposed several theories to explain analogical reasoning. The most well-known theory, the structure mapping theory, proposes that the use of analogies depends on the mapping of elements from a source to a target (Gentner, 1983). The mapping process focuses not only on elements (objects) but also on relations among elements. According to structure mapping theory, individuals must search their background knowledge for sources that are similar to the target. Then, the individual must determine whether there is a good match between what is retrieved and the target. Problem solving by analogy, therefore, requires us to retrieve an old solution from memory and to recognize that it does or does not fit the current problem.

Keith Holyoak and Paul Thagard (1989) offered a different view of analogical reasoning. They proposed multiconstraint theory, which fits within structure mapping theory. Specifically, multiconstraint theory describes the factors that limit the analogies that problem solvers construct. Multiconstraint theory proposes three factors that govern how individuals use analogies. Specifically, the ease with which an individual uses an analogy to solve a problem depends upon similarities in structure, meaning, and purpose between the source problem and the target problem. Structural similarity refers to the extent that the source problem and target problem possess a similar structure. Similar structures allow problem solvers to map elements and relations among elements from the source problem to the target problem easily. Similarity in meaning further eases a problem solver’s ability to perceive how the solution for one problem may apply to another. In Gick and Holyoak’s (1980) research, for example, the fortress and troops were similar in meaning to the tumor and rays. Finally, multiconstraint theory’s similarity of purpose refers to the extent that an individual recognizes the similarities between the goals in a source problem and a target problem. In the example of using an analogous solution for driving to work or school when trying to solve the problem of driving to the grocery store, there is high similarity in the purpose, structure, and meaning of the two problems.

In summary, researchers have proposed and applied several theories to the act of problem solving. The theories discussed here are not necessarily mutually exclusive. Rather, each can be applied to a different aspect of problem solving. Problem solving begins with the perception of the problem. According to the Gestalt theorists, we perceive problems like objects, as a whole. This tendency may affect our ability to represent accurately the initial state, goal state, and the set of operations needed to solve a problem. Our perceptual tendencies may further constrain our ability to choose a heuristic or recognize an appropriate analogous solution. Research in the area of problem solving has focused on discovering the conditions under which the assumptions of the theories presented here apply.

I describe the methodological traditions that researchers have used in the following section.

Methods

Studying problem solving is a challenging process. When an individual generates a solution to a problem, sometimes it is easy to observe evidence of that success. For example, we can be sure that people have solved the problem of driving to work or school if they arrive at their destination in a timely manner. However, it is what happens between the initial state and goal state that has interested researchers and has proved more difficult to study. That is, researchers have used several methods for observing problem-solving processes (e.g., planning, retrieving problem-specific information from memory, choosing operators) that may be otherwise unobservable.

As in the description of Gestalt theory, early studies of problem solving focused on animal learning and problem solving. Although findings from animals may not always generalize to humans, researchers took notice of the structure of the experiments, the problems used, and the observations made. Although most real-life problem solving is quite complex and involves numerous subproblems, researchers sought to simplify problems so that they could isolate and observe various aspects of the problem-solving process.

Building on the methods used in animal research, a large portion of the problem-solving literature uses behaviorist research methods. Specifically, investigators provide research participants with structured problems and then observe their problem-solving behaviors, record the number of steps they use to solve a problem, and determine whether they generate the correct solution.

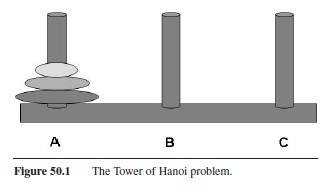

Investigators have often used the Tower of Hanoi problem to study problem solving. The Tower of Hanoi problem was invented by French mathematician Edouard Lucas in 1883. The most common version of this problem, the three-disk problem, requires the problem solver to move the disks from peg 1 (the initial state) to peg 3 (the goal state) given two conditions: (a) only one disk may be moved at a time, and (b) a larger disk may never be placed on top of a smaller disk (see Figure 50.1).

Figure 50.1 The Tower of Hanoi problem.

Figure 50.1 The Tower of Hanoi problem.

In one interesting study using the Tower of Hanoi, Fireman and Kose (2002) observed how successfully six-to eight-year-old children solved three-disk and four-disk versions of the Tower of Hanoi problem. Fireman and Kose divided their sample into three training groups and one control group. One training group practiced solving the three-disk problem, a second group received training in the correct solution, and a third group watched videotapes of their own previous attempts to solve the problem. Fireman and Kose observed the children’s ability to solve the three-disk and four-disk problems immediately after training and one week later. The results showed that children who practiced independently and those who watched videotapes of their prior attempts made the most progress in their ability to solve the problems correctly. Additionally, their gains were maintained after one week. The methodology of this study and the Gick and Holyoak (1980) study described earlier are characteristic of most behavior-based studies of human problem solving in that the methods and outcomes of a participant’s problem-solving efforts are recorded as evidence of unseen cognitive processes.

It is difficult to deny that problem solving is a cognitive act. Accordingly, some researchers have argued that to understand problem solving, you have to know what the individual is thinking while executing the behaviors that lead to a solution. To get this information, some of the research in problem solving has relied on introspection, or verbal “think-aloud” protocols generated by individuals while solving problems. The earliest use of introspection in psychology is attributed to Wilhelm Wundt in 1879. Later, Edward Titchener advocated the use of verbal protocols. More recently, Herbert Simon and Allen Newell defined the “think-aloud” protocol as a research methodology in which a research participant thinks aloud while engaging in some kind of cognitive activity.

The analysis of the think-aloud protocol is known as verbal protocol analysis. The primary advantage of this method is that it is the only way to know what information is in working memory during the problem-solving process. This advantage also relates to the primary limitation of this methodology. That is, individuals verbalize only thoughts and processes of which they are consciously aware. Automatic processes, which are executed outside of conscious awareness, may not be articulated in verbal protocols. Despite this limitation, the use of think-aloud protocols continues, particularly in the field of education. Instructors often ask learners to verbalize what they are thinking as they solve mathematics problems or as they read texts.

A final research methodology used in the study of problem solving involves the use of computers to model the problem-solving behavior of humans. Researchers build computer programs that mimic the strategies that human problem solvers often use to solve a problem. Then, the researchers compare the computer’s attempts to solve the problem to the existing data on how humans solve the same problem. The primary benefit of this particular research methodology is that building a computer model that fits data from human problem solvers requires the researcher to think carefully and to define every step in the problem-solving process explicitly.

The most well-known example of such a computer model is Newell and Simon’s (1972) General Problem Solver. Newell and Simon compared the verbal protocols from humans who attempted to solve abstract logic problems to the steps generated by the General Problem Solver and found a high degree of similarity between the self-reported problem-solving actions of humans and the General Problem Solver. Additionally, their research noted that humans and the computer model relied on means-ends analysis to solve the logic problems. The humans and the model created subgoals to reduce the difference between the initial state and goal state of the problem space. Since Newell and Simon’s work, researchers have used computer modeling to study how people solve everyday problems such as reading single words, playing games, making medical diagnoses, and even reasoning about a particular law’s application to a case.

In conclusion, research on problem solving has relied on three methodologies, direct observation of problem-solving behaviors, think-aloud protocols, and computer modeling. For theory building, computer modeling seems to be the most promising methodology. As already stated, a computer model that effectively accounts for the behavioral or think-aloud data requires the researcher to state the specific assumptions and mechanisms required to solve various problems. This level of specificity creates opportunities for researchers to test the assumptions of theories of human problem solving generally and within specific domains (e.g., mathematics, reading).

Applications

Problem solving is an everyday occurrence. Thus, the opportunities for application of the concepts presented in this research-paper are numerous. This section discusses the practical applications of what psychologists have learned from research on human and animal problem solving. Specifically, the research on problem solving suggests some specific strategies an individual may use to avoid common mistakes in problem solving. Finally, this section describes how experts solve problems in their domain of expertise.

Five Rules for Problem Solving

Henry Ellis (1978) identified five basic rules a person can apply to any problem-solving situation. Consider how each of these rules relates to the theory and research that we have considered in this research-paper. For each of Ellis’s rules, try to generate your own example of when to apply each rule.

- Understand the Problem

The first step to solving any problem is to understand the current problem. As discussed earlier in this research-paper, most real-life problems are ill structured, meaning that the initial state, goal state, and the set of operations (or possibly all three) are not known or are unclear. Thus, successful problem solving starts with a clear understanding of the problem space. Thus, it is best to begin solving a problem with a thorough analysis of the problem to ensure understanding of the problem space.

- Remember the Problem

A second step to solving problems successfully is to remember the problem and the goal throughout the problem-solving process. Often, we lose sight of the goal and instead solve a different problem, not the problem before us. A common example is forgetting a question someone asks you and instead answering a completely different question.

- Identify Alternative Hypotheses

When problem solving, it is good practice to generate multiple hypotheses, or guesses about the set of operations, for the best strategy or solution to the problem. We often make a mistake when we settle upon a solution before considering all relevant and reasonable alternatives.

- Acquire Coping Strategies

Because life presents many challenges, the problems we solve daily can be difficult. Frustration is a barrier to problem-solving success, as are functional fixedness and response set. Functional fixedness is a tendency to be somewhat rigid in how we think about an object’s function. This rigidity often prevents us from recognizing novel uses of objects that may help us solve a problem. For example, our failure to recognize a butter knife’s ability to do the job of a flat-head screwdriver is an example of functional fixedness. Response set, however, relates to inflexibility in the problem-solving process. Response set is our tendency to apply the same set of operations to problems that have similar initial states and goal states. However, this strategy breaks down when some aspect of the problem space changes, making the set of operations that were successful for previous problems the wrong choice. Essentially, we become set in our response to a problem. This tendency prevents us from noticing slight differences in problems that may suggest a simpler or different solution process. Thus, it is important to be aware of these common barriers to problem solving and to take steps to avoid them.

- Evaluate the Final Hypothesis

In everyday problem solving, it is important to evaluate the practicality of your solutions. That is, the shortest route from your home or apartment to work or school may also be the route that is most likely to be congested with traffic when you will be traveling. Thus, the more practical solution may be a less direct route that has less traffic. The point is that you should evaluate your final hypothesis for a solution to determine if it is the best or only solution to the problem, given the constraints of the problem space.

Expert Problem Solving

In addition to applying any or all of the five rules mentioned, try approaching a problem-solving situation the way an expert does. An expert is someone who is highly informed on a particular subject and is very good at solving problems in that domain. Consider Tiger Woods. Mr. Woods is an expert golfer. He knows more about golf than the average individual does, and he is very good at figuring out how to solve the most obvious golf-related problem, how to get the ball from the tee to the hole in the fewest shots. What has research uncovered about expert problem solvers?

First, experts simply know more about their domain of expertise than novices do. Researchers generally recognize the 10-year rule, the idea that individuals need 10 years of experience or 10,000 hours of practice in a domain to achieve expertise (for a review of research on expertise, see Ericcson, Charness, Hoffman, & Feltovich, 2006). Given this rule, it is conceivable that an expert has more opportunities to learn about his or her area of expertise and more opportunities to store that information in memory. Research supports this idea. William Chase and Herbert Simon (1973) reported that chess masters have superior memory, in comparison to chess novices, for the midgame position of chess pieces on a chessboard, even when exposed to the board briefly. When the pieces are placed randomly on the board, however, chess masters demonstrate no advantage over chess novices for memory of the pieces’ positions. These findings suggest that chess masters, due to experience, have stored many legal board positions in memory. Thus, experts simply have more experience in their domain and know more about it than do novices.

Second, experts organize information in memory differently from novices. Due to their additional knowledge and practice in a domain, experts have rich, interconnected representations of information in their area of expertise. Micheline Chi and her colleagues have compared physics masters to physics novices to observe differences in how the two groups represent problems. Chi, Feltovich, and Glaser (1981) noted that novices and experts, when presented with a variety of physics problems, showed differences in how they grouped the problems. Novices tended to focus on physical similarities of the problems, for example, by grouping all problems related to inclined planes together. Experts, however, grouped problems according to the underlying physical concepts (e.g., conservation of energy) related to each problem. These and other related findings suggest that experts understand their content domain at a deeper level and, therefore, organize information differently.

Finally, experts move through the problem space differently from novices. Experts are less likely to use the working backward strategy when solving problems in their domain of expertise. Instead, they work forward from the initial state to the goal state (Larkin, McDermott, D. Simon, & H. Simon, 1980). Novices, however, tend to work backward from the goal to the initial state. Jose Arocha and Vimla Patel (1995) compared diagnostic reasoning of beginning, intermediate, and advanced medical students. Rather than working forward from the symptoms to a diagnosis, the students tended to use a backward search from the diagnosis to the symptoms. In this study, initial case information suggested diagnosis of a common disease. The least experienced novices in the study ignored subsequent case information that would have required them to change their hypotheses about the diagnosis. Therefore, experts use their well-organized knowledge in a domain to recognize a problem and solve it by moving continually toward a solution.

Future Directions

Although the field of problem solving, particularly expert problem solving, has been researched extensively over the past 30 years, there are related theories to be tested and other measurement techniques to apply. John Anderson’s (1983, 1990, 1993) ACT* (adaptive control of thought) model (and later ACT-R, adaptive control of thought— rational) is a model of knowledge representation that proposes a cognitive architecture for basic cognitive operations. ACT-R describes human knowledge as having of two kinds of memory: declarative memory, which consists of an interconnected series of nodes representing information, and procedural (or production) memory, which comprises series of productions, or if-then pairs, that relate to various conditions and their associated actions. Declarative memory and procedural memory interact with working memory. In Anderson’s model, working memory receives information from the outside world and stores it in declarative memory or retrieves old information from declarative memory for current use. Working memory also interacts with procedural memory in that working memory seeks to match the if conditions so that the then actions can be executed. Although Anderson’s model is more complex than what is summarized here, the application of this model to problem solving is clear. Problem solving, according to this model, likely takes place in two stages, an interpretative stage and a procedural stage. When individuals encounter a problem, they retrieve problem-solving examples from declarative memory. This is the interpretative stage. The procedural stage follows and involves the evaluation and execution of related if-then (condition-action) pairs. Research on Anderson’s model suggests that problem solving proceeds in a manner similar to means-ends analysis. That is, there is constant reevaluation of the problem space and subsequent execution of steps to reduce the difference between the current state and the goal state. In summary, Anderson’s cognitive architecture holds promise for further application to the study of human problem solving. Specifically, researchers have applied the ACT-R model to measurements of human problem-solving performance generated by brain-imaging technologies.

Although researchers generally study human cognition by observing behaviors that are indicative of various cognitive acts (i.e., word recall as evidence of memory), researchers recently have turned to brain-imaging technologies to gain a further understanding of what happens in the brain when we try to recognize a face, retrieve a word, make a decision, or solve a problem. Functional magnetic resonance imaging (fMRI) is one relatively new technique that measures activity in the brain. Simply put, fMRI measures blood flow within the brain that occurs in response to neural activity. Research using fMRI indicates that blood flow in several distinct areas of the brain, namely the left Dorsolateral Prefrontal Cortex (DLPFC) and the Anterior Cingulate Cortex (ACC), appear to be related to human problem solving (see Cazalis et al., 2006). In a recent study of problem solving, Anderson, Albert, and Fincham (2005) used fMRI analysis to track patterns of brain activity predicted by the ACT-R cognitive architecture. In this study, fMRI confirmed brain activity in the parietal region related to planning moves to solve the Tower of Hanoi problem. Thus, Anderson et al.’s study provides evidence that investigators can use fMRI to examine human problem solving and to verify the assumptions of cognitive theories and models.

Summary

This research-paper has summarized the major theories, research findings, and applications of research in the field of human problem solving. As one of the most complex acts of human cognition, the study of problem solving will continue, with greater emphasis on the generation of computer-based models that predict data from behavioral observations and data generated from imaging studies of neural activity within the brain.

References:

- Anderson, J. R. (1983). The architecture of cognition. Cambridge, MA: Harvard University Press.

- Anderson, J. R. (1990). The adaptive character of thought. Hillsdale, NJ: Erlbaum.

- Anderson, J. R. (1993). Problem solving and learning. American Psychologist, 48(1), 35-44.

- Anderson, J. R., Albert, M. V., & Fincham, J. M. (2005). Tracing problem solving in real time: fMRI analysis of the subjectpaced Tower of Hanoi. Journal of Cognitive Neuroscience, 77(8), 1261-1274.

- Arocha, J. F., & Patel, V. L. (1995). Novice diagnostic reasoning in medicine: Accounting for clinical evidence. Journal of the Learning Sciences, 4, 355-384.

- Cazalis, F., Feydy, A., Valabregue, R., Pelegrini-Issac, M.,Pierot, L., & Azouvi, P. (2006). fMRI study of problemsolving after severe traumatic brain injury. Brain Injury, 20(10), 1019-1028.

- Chase, W. G., & Simon, H. A. (1973). Perception in chess. Cognitive Psychology, 4(1), 55-81.

- Chi, M. T. H., Feltovich, P. J., & Glaser, R. (1981). Categorization of physics problems by experts and novices. Cognitive Science, 5, 121-152.

- Duncker, K. (1945). On problem-solving. Psychological Monographs: General and Applied, 58(5, Whole No. 270).

- Ellis, H. C. (1978). Fundamentals of human learning, memory, and cognition. Dubuque, IA: Wm. C. Brown.

- Ericcson, K. A., Charness, N., Hoffman, R. R., & Feltovich, P. J. (Eds.). (2006). The Cambridge handbook of expertise and expert performance. Cambridge, UK: Cambridge University Press.

- Fireman, G., & Kose, G. (2002). The effect of self-observation on children’s problem solving. Journal of Genetic Psychology, 164(4), 410-423.

- Gentner, D. (1983). Structure-mapping: A theoretical framework for analogy. Cognitive Science, 7, 115-170.

- Gick, M. L., & Holyoak, K. J. (1980). Analogical problem solving. Cognitive Psychology, 12, 306-355.

- Hayes, J. (1985). Three problems in teaching general skills. In S. Chipman, J. Segal, & R. Glaser (Eds.), Thinking and learning skills (pp. 391-406). Hillsdale, NJ: Erlbaum.

- Holyoak, K. J., & Thagard, P. (1989). Analogical mapping by constraint satisfaction. Cognitive Science, 13, 295-355.

- Kohler, W. (1927). The mentality of apes. New York: Harcourt Brace.

- Larkin, J., McDermott, J., Simon, D., & Simon, H. (1980). Expert and novice performance in solving physics problems. Science, 208, 1335-1342.

- Newell, A., & Simon, H. A. (1972). Human problem solving. Englewood Cliffs, NJ: Prentice Hall.

- Plaut, D. C. (1999). Computational modeling of word reading, acquired dyslexia, and remediation. In R. M. Klein & P. A. McMullen (Eds.), Converging methods in reading and dyslexia (pp. 339-372). Cambridge, MA: MIT Press.

See also:

Free research papers are not written to satisfy your specific instructions. You can use our professional writing services to order a custom research paper on any topic and get your high quality paper at affordable price.