This sample Validation Research Paper is published for educational and informational purposes only. If you need help writing your assignment, please use our research paper writing service and buy a paper on any topic at affordable price. Also check our tips on how to write a research paper, see the lists of research paper topics, and browse research paper examples.

Before social scientists can study the feelings, thoughts, behaviors, and performance of individuals, and before practitioners (such as therapists, case managers, or school staff) can respond to clients’ problems in those areas, they must be able to measure the phenomena in question. Measurement tools include measures, instruments, scales, indices, questionnaires, and surveys. Complex social constructs such as “depression,” “worker satisfaction,” or “reading achievement” cannot be assessed with one question. A depression scale, for example, requires multiple questions (or items) to fully capture depression’s affective, cognitive, and physical dimensions. An individual’s responses to multiple items on a scale are typically combined (e.g., averaged or summed) to give one composite score. Measurement validation is the process of demonstrating the quality of a measure, the scores obtained with the measure, or the interpretation of those scores. Validation is necessary because scores from measures may be inaccurate. Respondents may misunderstand items, deliberately provide inaccurate responses, or simply lack the knowledge or ability to provide accurate responses. Specific items or the scale as a whole may not accurately reflect the target construct.

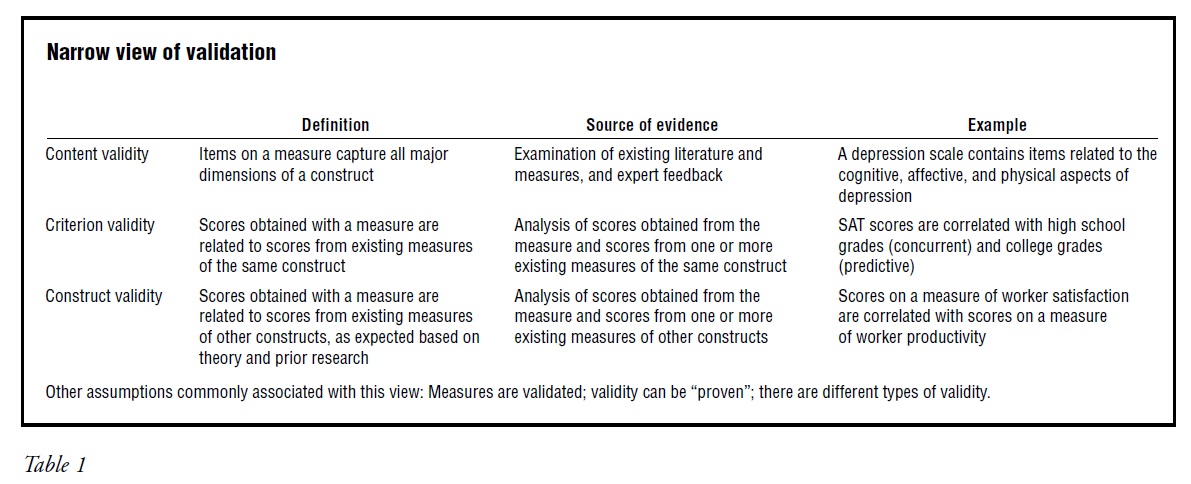

A common textbook definition of validity focuses on how accurately and completely a measure captures its target construct. From this perspective, measures can be sufficiently validated based on evidence of content validity, criterion validity, and/or construct validity (see Table 1). Validity is considered a characteristic of measures and is a demonstrable goal. For example, a scale developer might claim that a new worker-satisfaction scale is valid after presenting results of analyses of content and criterion validity.

This approach to validation as it is commonly applied has a number of shortcomings. First, it focuses narrowly on item content and score performance. Second, as elaborated by Kenneth A. Bollen (1989), it ignores potential problems with traditional correlational analyses, including the possibly erroneous assumption that scores from the existing measures used for comparison are valid. Third, it relies on indirect methods of assessing whether respondents interpreted items and response options as intended.

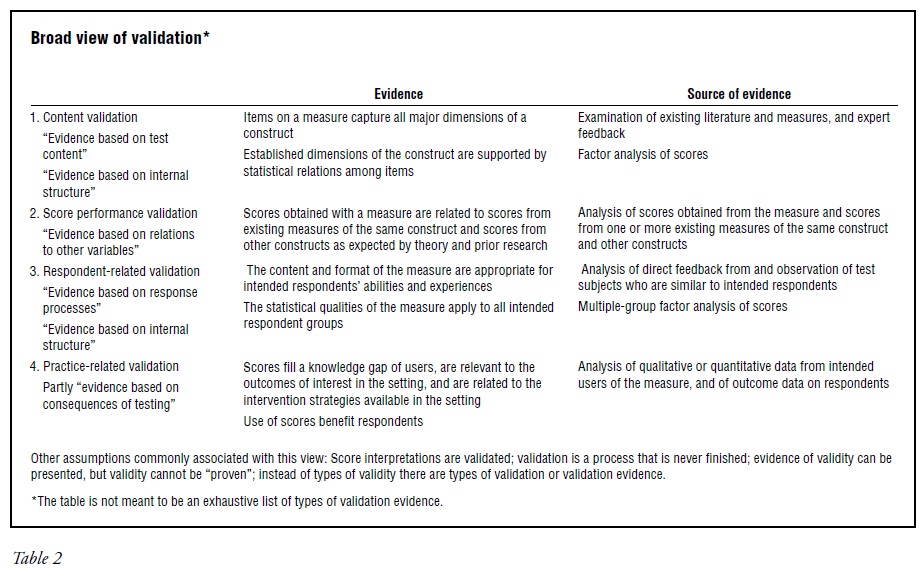

A broader view of validation defines it as an ongoing process of building a case that (1) scores obtained with a measure accurately reflect the target construct and (2) scores obtained with the measure can be interpreted and used as intended. This view implies that validation is an unending, multifaceted process and that it applies to the interpretations of scores obtained with a measure, not the measure itself. It also suggests that evaluating how scores are interpreted and used is essential to the validation process. This last point implies a legitimate role for values and ethics in the evaluation of measures. Proponents of elements of this broader view include measurement scholars from numerous social science disciplines—for example, Robert Adcock and David Collier (2001), Kenneth A. Bollen (1989), Lee J. Cronbach (1988), Samuel Messick (1988), and Howard Wainer and Henry I. Braun (1988), as well as a trio of social science professional organizations: the American Educational Research Association, the American Psychological Association, and the National Council on Measurement in Education (American Educational Research Association et al. 1999). From this broad view of validation and validity, the approach to validation presented in Table 1 is inadequate.

Table 2 presents components of a broad view of measurement validation. The components of Table 1 are present (in rows 1 and 2), but other evidence is considered necessary to validate the interpretation and use of scores. Additional statistical procedures that are often used in scale development but less often presented as evidence of validity are also included (in rows 1 and 3). Corresponding categories of validation evidence described in the Standards for Educational and Psychological Testing (1999) are listed under the terms in the first column. It is unlikely that any validation process will include all elements in the table, but the more sources and methods used, the stronger the case for validation will be.

Narrow view of validation

Other assumptions commonly associated with this view: Measures are validated; validity can be “proven”; there are different types of validity.

Broad view of validation*

Other assumptions commonly associated with this view: Score interpretations are validated; validation is a process that is never finished; evidence of validity can be presented, but validity cannot be “proven”; instead of types of validity there are types of validation or validation evidence.

*The table is not meant to be an exhaustive list of types of validation evidence.

Respondent-Related Validation

A direct method of assessing whether a scale measures what it is intended to measure is to interview pilot test subjects about their interpretation of items. Gordon B. Willis (2005) and Stanley Presser et al. (2004) provide detail on cognitive interviewing techniques. Data collected can be analyzed (usually qualitatively) to identify problem words or concepts and evidence that items or response options were misunderstood. Establishing that respondents interpret original or revised items as intended can contribute significantly to the validation case for a measure’s score interpretations.

Demonstrating that respondents understand, interpret, and respond to items as intended is evidence of what Kevin Corcoran (1995, p. 1946) has referred to as the “suitability and acceptability” of a measure for its intended population. More specifically, it may constitute evidence of developmental validity (the content and format of a scale are appropriate for the cognitive, attentional, and other abilities of individuals). Natasha K. Bowen, Gary L.Bowen, and Michael E. Woolley (2004) and Michael E. Woolley, Natasha K. Bowen, and Gary L. Bowen (2004) discuss the concept of developmental validity and a sequence of scale-development steps that promote it. The process may also generate evidence of cultural validity (the content and format of a scale are appropriate in relation to the experiences of individuals that may vary based on language, nationality, race/ethnicity, economic status, education level, religion and other characteristics). Although these examples are presented as types of validity, they are easily reframed as evidence of validation that supports confidence in the interpretation of scores obtained from respondents with different characteristics.

Certain statistical analyses of scores obtained with a measure, such as multiple group factor analysis, can also support respondent-related validation. The analyses may provide statistical evidence that scores obtained from members of different groups (e.g., males, females; members of different cultural groups) can be interpreted the same way.

Practice-Related Validation

Some measurement scholars, such as Cronbach (1988) and Messick (1988), stress that the uses and consequences of scores must be considered in the validation process. Messick states: “The key validity issues are the interpretability, relevance, and utility of scores, the import or value implications of scores as a basis for action, and the functional worth of scores in terms of social consequences of their use” (p. 33). Practice-related validation requires researchers to examine the context in which a measure is to be used—the setting, the users, and the intended uses of the measure. As demonstrated by Natasha K. Bowen and Joelle D. Powers (2005), practice-related validation of a school-based assessment might include evidence that the construct measured is related to achievement, evidence that school staff who will use the scores currently lack (and want) the information provided by the measure, and evidence that resources exist at the school for addressing the threats to achievement revealed in the obtained scores.

As pointed out by Cronbach (1988), evaluation of the context and consequences of score interpretations necessarily involves a consideration of values. The consequences of decisions based on scores may determine “who gets what in society” (p. 5). Standardized school test scores, college entrance exams scores, and mental health screening scores, for example, may be used to determine, respectively, who gets what instructional resources, who goes to college, and who receives mental health services. Given how the use of scores from social science measures affects the distribution of resources and the opportunities of individuals to succeed, a broad, thorough, ongoing approach to the validation process is an ethical necessity for social scientists.

Bibliography:

- Adcock, Robert, and David Collier. 2001. Measurement Validity: A Shared Standard for Qualitative and Quantitative Research. American Political Science Review 95 (3): 529–546. American Educational Research Association, American

- Psychological Association, and the National Council on Measurement in Education. 1999. Standards for Educational and Psychological Testing. Washington, DC.: American Educational Research Association.

- Bollen, Kenneth A. 1989. Structural Equations with Latent Variables. New York: John Wiley & Sons.

- Bowen, Natasha K., Gary L. Bowen, and Michael E. Woolley. 2004. Constructing and Validating Assessment Tools for School-Based Practitioners: The Elementary School Success Profile. In Evidence-Based Practice Manual: Research and Outcome Measures in Health and Human Services, eds. Albert R. Roberts and Kenneth R. Yeager, 509–517. New York: Oxford University Press.

- Bowen, Natasha K., and Joelle D. Powers. 2005. Knowledge Gaps among School Staff and the Role of High Quality Ecological Assessments in Schools. Research on Social Work Practice 15 (6): 491–500.

- Corcoran, Kevin. 1995. Psychometrics. In The Encyclopedia of Social Work, 19th ed., ed. Richard L. Edwards, 942–1947. Washington, DC: NASW.

- Cronbach, Lee J. 1988. Five Perspectives on the Validity Argument. In Test Validity, eds. Howard Wainer and Henry I. Braun, 3–17. Hillsdale, NJ: Lawrence Erlbaum.

- Messick, Samuel. 1988. The Once and Future Issues of Validity: Assessing the Meaning and Consequences of Measurement. In Test Validity, eds. Howard Wainer and Henry I. Braun, 33–45. Hillsdale, NJ: Lawrence Erlbaum.

- Presser, Stanley, Jennifer M. Rothgeb, Mick P. Couper, et al., eds. 2004. Methods for Testing and Evaluating Survey Questionnaires. Hoboken, NJ: John Wiley & Sons.

- Wainer, Howard, and Henry I. Braun. 1988. Introduction. In Test Validity, eds. Howard Wainer and Henry I. Braun, xvii–xx. Hillsdale, NJ: Lawrence Erlbaum.

- Willis, Gordon B. 2005. Cognitive Interviewing: A Tool for Improving Questionnaire Design. Thousand Oaks, CA: Sage.

- Woolley, Michael E., Gary L. Bowen, and Natasha K. Bowen. 2004. Cognitive Pretesting and the Developmental Validity of Child Self-Report Instruments: Theory and Applications. Research on Social Work Practice 14 (3): 191–200.

See also:

Free research papers are not written to satisfy your specific instructions. You can use our professional writing services to buy a custom research paper on any topic and get your high quality paper at affordable price.